Higher education has long been a vehicle for economic mobility and the primary center for workforce skill development. But alongside the recognition of the many individual and societal benefits from postsecondary education has been a growing focus on the individual and societal costs of financing higher education. In light of national conversations about growing student loan debt and repayment, there have been growing calls for improved higher education accountability and interrogating the value of different higher education programs.

The U.S. Department of Education recently requested feedback on a policy proposal to create a list of “low-financial-value” higher education programs. The Department hopes the list will highlight programs that do not provide substantial financial benefits to students relative to the costs incurred, in hopes of (1) steering students away from those programs and (2) applying pressure on institutions on the list to improve the value of those programs—either on the cost or the benefit side. Drawing on my comments to the Department, in this piece, I outline the key considerations when measuring the value of a college education, the implications of those decisions on what programs the list will flag, and how the Department’s efforts can be more effective at achieving its goals.

Why create a list of low-financial-value programs?

Ultimately, whether college will “pay off” is highly individualized, dependent on students’ earnings potential absent education, how they fund the education, and some combination of effort and luck that will determine their post-completion employment. What value does a federal list of “low-financial-value” programs provide students beyond their own knowledge of these factors?

First, it is challenging for students to evaluate the cost of college given that the “sticker price” costs colleges list rarely reflect the “net price” most students actually pay after accounting for financial aid. Many higher education institutions employ a “high cost, high aid” model that results in students paying wildly different prices for the same education. Colleges are supposed to provide “net price calculators” on their websites to help students estimate their actual expenses, but a recent report from the U.S. Government Accountability Office found only 59% of colleges provide any net price estimate, and only 9% of colleges were accurately estimating net price. When students do not have accurate estimates of costs, they are vulnerable to making suboptimal enrollment decisions.

Second, it is difficult for students to estimate the benefits of postsecondary education. While on average individuals earn more as they accrue more education—with associate degree holders earning $7,800 more each year than those with a high school diploma and bachelor’s degree holders earning $21,200 more each year than those with an associate degree—that return varies substantially across fields of study within each level of education and across institutions within those fields of study. Yet students rarely have access to this program-specific information when making their enrollment decisions.

The Department has focused on developing a list of “low-financial-value” programs from an individual, monetary perspective. But it is important to note there are non-financial costs and benefits to society, as well as to individuals. There are many careers that have high value to society, but that do not typically have high wages. Higher education institutions cannot control the local labor market, and there is a risk that in response to the proposed list, institutions would simply cut “low-financial-value” programs, worsening labor shortages in some key professions. For example, wages are notoriously low in the early education sector, where labor shortages and high turnover rates have significant negative effects on student outcomes. Flagging postsecondary programs that result in slightly higher wages for their childcare graduates is less productive than policy efforts to ensure adequate pay to attract and retain those workers into this crucial profession.

HOW TO MEASURE the value of a college education?

This is not the first time the Department has proposed holding programs accountable for their graduates’ employment outcomes. The most analogous effort has been the measurement of “gainful employment” (GE) for career programs. As the Biden administration prepares to release a new gainful employment rule in spring 2023, elements of that effort offer a starting point for the current accountability initiative. Specifically, the proposed GE rules of using both the previously calculated debt-to-earnings ratio and setting a new “high school equivalent” benchmark for outcomes provide a framework for evaluating the broader set of programs and credential levels proposed under the “low-financial-value” effort.

Setting Benefits Benchmarks

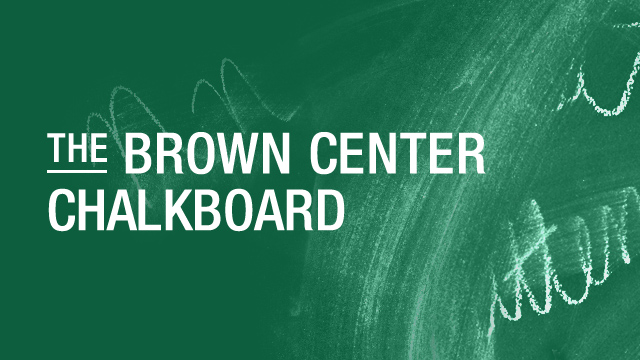

The primary financial benefits of a postsecondary education are greater employment stability and higher wages. The U.S. Census Post-Secondary Employment Outcomes (PSEO) data works in partnership with states to measure both outcomes, though wage data only includes those earning above a “minimum wage” threshold and coverage varies across states. With those caveats, I use PSEO to examine outcomes for programs in the four states reporting data for more than 75% of graduates (Indiana, Montana, Texas, and Virginia, limiting analysis to programs with at least 40 graduates). The Department is deliberating on which benchmark to measure outcomes against, and here I examine how programs would stack up against two potential wage benefits benchmarks: 1) earning more than 225% of the federal poverty rate ($28,710, which is similar to a $25,000 benchmark frequently proposed); and 2) earning more than the average high school graduate ($36,600). These benchmarks are compared against the median reported earnings of a program’s median graduate; those where the median graduate’s earnings fail to meet the benchmark are at risk of being labeled a “low-financial-value” program.

Many certificate programs produce low wages

As illustrated in Figure 1, while only 2.8% of all programs fail the first benchmark of 225% of the federal poverty rate, 15% of postsecondary programs fail the second benchmark against high school graduate earnings. Failure rates vary across credential levels, with certificates being most likely to produce low wages. Though nearly all bachelor’s and master’s degree programs meet both benchmarks, 3% of associate degrees, 6% of long-term certificates (one to two years) and 10% of short-term certificates (less than a year) fail to produce median earnings above 225% of the federal poverty line, and more than a third of certificate programs have median graduate earnings below that of an average high school graduate.

“As illustrated in Figure 1, while only 2.8% of all programs fail the first benchmark of 225% of the federal poverty rate, 15% of postsecondary programs fail the second benchmark against high school graduate earnings.”

That no master’s programs fail a high-school earnings benchmark is not surprising—the counterfactual for master’s program graduates is the earnings from holding a bachelor’s degree, not the earnings from a high school degree. However, calculating a “bachelor’s degree equivalent” benchmark would be challenging given wide variation in the returns to bachelor’s degrees, motivating the need to consider additional outcomes (e.g., employment) and contextualizing benefits with cost to understand the value of master’s programs.

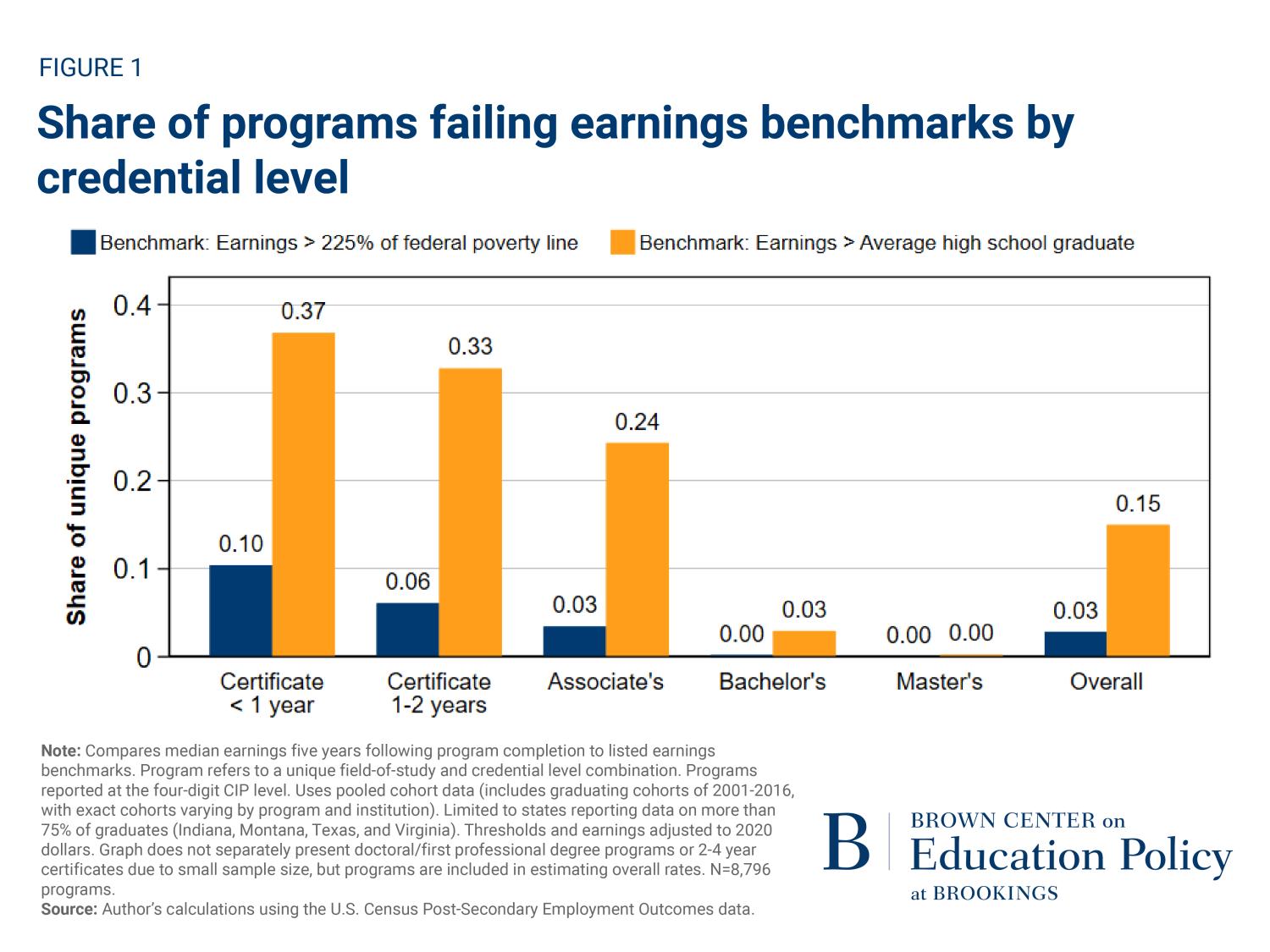

More programs pass employment benchmarks

I next constructed a “high school equivalency” employment benchmark of more than 50% or 60% of graduates employed (in any field) five years after graduation. In Figure 2, I show that while fewer programs fail employment benchmarks than the earnings thresholds, many certificate programs see a substantial share of their graduates unemployed. About one fifth of short-term certificate programs fail to see 60% or more of their graduates employed five years after graduation.

“About one fifth of short-term certificate programs fail to see 60% or more of their graduates employed five years after graduation.”

Programs with comparatively worse earnings outcomes are not always those with worse employment outcomes. For example, about two thirds of short-term certificates in Family/Human Development programs (typically early childhood education programs) have median graduate earnings below 225% of the federal poverty level, but only 9% of those programs fail the employment benchmark, mirroring research finding many short-term certificates lead to employment stability, even if they do not result in high wages. Conversely, while virtually no master’s programs failed the earnings threshold, about 4% of master’s programs result in fewer than 50% of graduates employed.

Cost-Benefit Comparison

While graduates’ earnings and employment are important outcomes, there are many programs where graduates meet these thresholds but perhaps not enough to justify the cost of the program, hence the Department’s intent to incorporate college costs in constructing a “low-financial-value” list. The Department could measure college costs in two ways—how much students pay up front (e.g., average net price) and how much they repay over the course of their lifetime (e.g., debt repayment, or a debt-to-earnings ratio as used in gainful employment rules). Each has advantages and disadvantages. Program-level cost of attendance estimates impose additional reporting burdens on institutions and don’t include the ongoing costs of loan interest. Debt-to-earnings ratios use more easily available data (and are already used for gainful employment) but only for borrowers and require complicated amortization decisions about what repayment plans to use.

These seemingly wonky decisions could result in substantially different debt-to-earnings estimates and would result in significant differences in which schools appear on a “low-financial-value” list. While the latest proposed income-driven repayment (IDR) plan is still under construction, the use of IDR plans has increased over time—from 11% to 24% of undergraduate-only borrowers and from 6% to 39% of graduate borrowers between 2010 and 2017. Under the proposed IDR plan, many students would have zero expected monthly payments, which other scholars have flagged would also eliminate the utility of the “cohort default rate” accountability measure. Using the standard repayment plan in accountability efforts is likely still the preferred option but would result in programs being flagged for having a higher debt-to-earnings ratio than their graduates actually face given these more affordable repayment options.

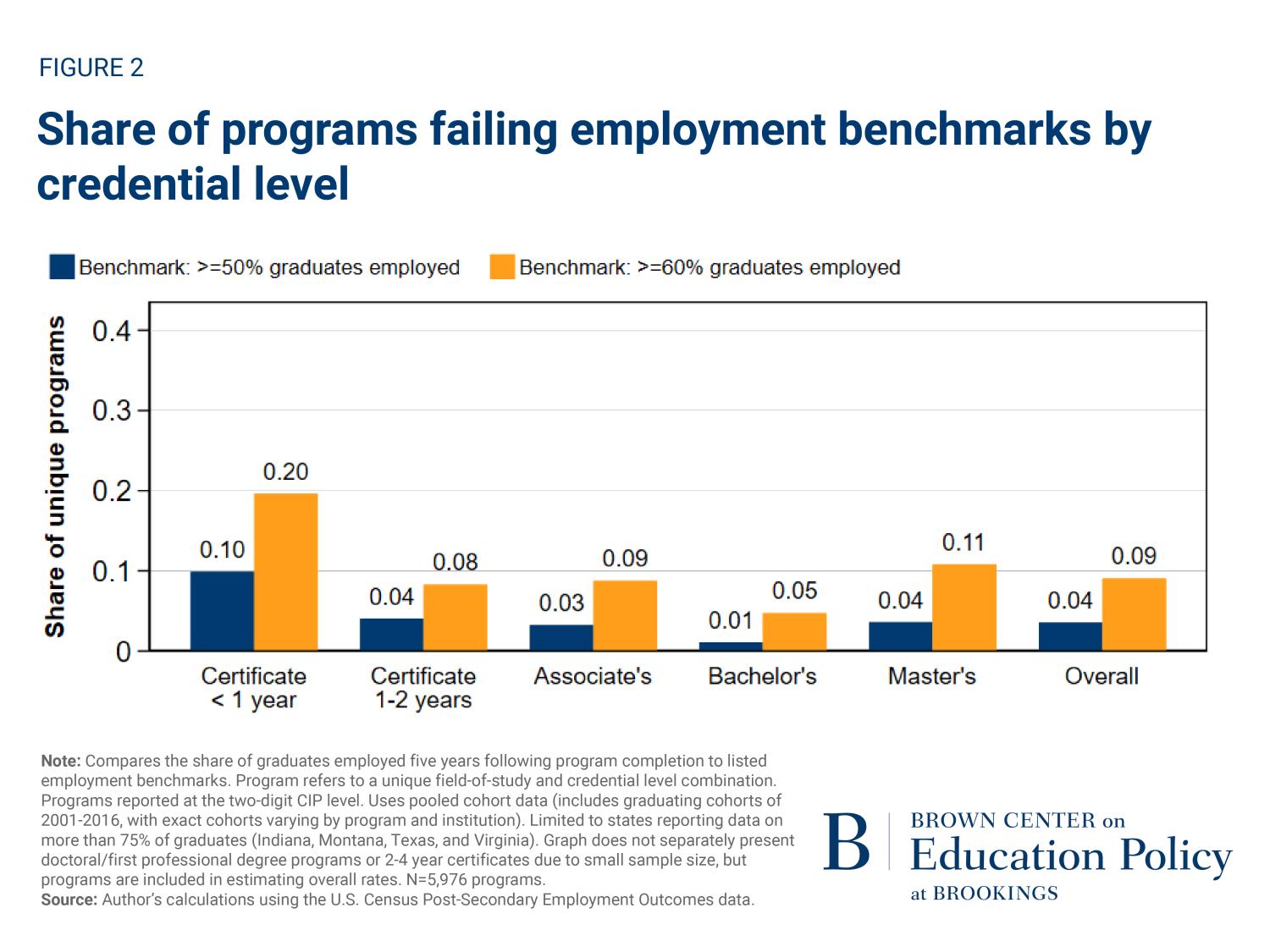

Even after deciding on a repayment plan, there are important decisions to make about acceptable benchmark levels. GE rules offer two potential debt-to-income thresholdsdebt comprising 8% to 12% of graduates’ monthly income (dubbed the “warning zone”) and 12% or more of monthly income (the GE failing rate). The College Scorecard reports limited program-level earnings and debt data. Using the latest field-of-study data, I examined the share of programs with at least 40 graduates and with non-suppressed debt and earnings data that failed those thresholds. I also calculated a more lenient benchmark of debt more than 20% of monthly income (since prior GE rules measured debt and earnings on a different timeline and sample than College Scorecard).

“Notably, many graduate-level programs fail even the more lenient benchmark, with 60% of first professional degree programs leaving graduates with monthly debt payments exceeding 20% of earnings.”

Here I see a reversal in the profile of institutions feeling accountability pressure. While all bachelor’s degree programs produced median earnings above the minimal poverty benchmark (recall Figure 1), Figure 3 shows they are more likely than subbaccalaureate programs to be in the warning zone for debt-to-earnings ratios, with 17% of the programs reporting median debt that exceeds 8% of median graduate earnings. Notably, many graduate-level programs fail even the more lenient benchmark, with 60% of first professional degree programs leaving graduates with monthly debt payments exceeding 20% of earnings. First professional degrees include law, medicine, pharmaceutical science, and veterinary medicine. These programs do produce high earnings but also high debt—though there is variance even within field of study.

In Table 1, I highlight the median income and debt for the three most common professional degree programs, looking separately by whether they pass or fail a 20% debt-to-earnings ratio. There are limitations to this analysis—many programs do not have data available in the College Scorecard. However, coverage is higher for first professional degree programs and the sample for these programs is similar to the number of accredited programs in the U.S. (e.g., my data includes 156 law programs, and the American Bar Association has accredited 199 law programs).

| Table 1. Median wages and debt at first professional degree programs | ||||||||

|

Debt <20% Income |

Debt >20% Income |

Low vs. High DE Programs |

||||||

|

Monthly Wages |

Monthly Debt |

N programs |

Monthly Wages |

Monthly Debt |

N programs |

Wage Difference |

Debt Difference |

|

| Law |

$7,468 |

$1,087 | 95 | $5,371 | $1,558 | 61 | $2,097 |

$(471) |

| Medicine |

$6,174 |

$1,059 |

7 |

$5,627 |

$2,107 |

89 |

$547 |

$ (1,048) |

| Pharmaceutical Science |

$9,502 |

$1,151 |

67 |

$10,564 |

$2,441 |

19 |

$ (1,062) |

$ (1,290) |

| Note: Compares median graduate earnings three years after completing highest credential to the median estimated payment for Stafford and Grad PLUS loan debt disbursed at that institution, for the first professional degree programs with the largest number of programs reporting data. Restricts sample to programs reporting at least 40 graduates to the Integrated Postsecondary Education Data System and those with non-suppressed debt and earnings data. Programs reported at the four-digit CIP level. | ||||||||

Limitations notwithstanding, the table illustrates the different wage and debt profiles that graduates encounter even within the same fields. In law and medicine, programs that pass my lenient debt-to-earnings threshold tend to have both higher wages and lower debt, while in pharmaceutical sciences the programs that pass the threshold have both lower wages and debt. There are many law and pharmaceutical science programs that pass the threshold, while fewer medicine programs do. These graduate-level comparisons are where a “low-financial-value” list could have a significant impact on students’ decision making—students are more likely to be geographically mobile for graduate studies and should know not all programs result in similar levels of financial stability. Further, sharing the raw wage and debt data as I do in Table 1 alongside metrics such as a debt-to-earning ratio can help students better understand their investment—students accumulate substantial debt for first professional degrees, and a ratio might mask the magnitude of the underlying wage and debt figures.

To what end? Considerations for List Dissemination and Impact

The Department of Education expects the proposed list of “low-financial-value” programs will provide prospective students with insights into which programs will not “pay off” and which they should be cautious about pursuing. However, evidence from previous Department accountability efforts indicate this list is unlikely to meaningfully affect students’ enrollment decisions. One analysis of the College Affordability and Transparency Center (CATC) lists found no effect on institutional behavior or student application patterns at schools flagged for having large year-over-year increases in costs. When the Department rolled out the College Scorecard, reporting detailed college cost and anticipated earnings information through a well-designed dashboard, researchers found schools with higher reported costs did not experience any change in SAT score submissions, and while schools with higher reported graduate earnings did receive slightly more SAT score submissions, those effects were concentrated among students attending private high schools and high schools with a lower share of students receiving free/reduced price lunch. In other words, the information appeared to primarily benefit students already well positioned to navigate college enrollment decisions.

Insights from behavioral science can inform how the Department can best design and share this information with students in order to steer students to more informed postsecondary enrollment decisions:

- First, information should be proactive. Rather than hoping students will incorporate the “low-financial-value” list into their decision-making, the Department should engage in an outreach campaign to provide this information to students. For example, the Department could mail a copy of the “low-financial-value” list to anyone who files the Free Application for Federal Student Aid (FAFSA).

- Second, information should be personalized, particularly to students’ geography. At minimum, any online display of these programs should be filterable by geography. Ideally, any Department proactive dissemination efforts would customize information by geography as well.

- Third, information should be actionable—students should know what to do with this list. If the Department has specific recommendations on how students should behave based on this information, they should make it clear.

The Department has high hopes for this accountability effort, and it is in their best interests to design and disseminate information in a way that ensures students and families can easily understand the information. If the list cannot demonstrate an impact on students’ enrollment decisions, it is unlikely that programs will respond in any meaningful way to “improve” their value.

THE CAPACITY FOR IMPACT

“There is broad bipartisan consensus that the financing of higher education is in dire need of reform.”

On the surface, measuring the costs and benefits of college may seem to be a straightforward exercise. In practice, doing so requires several nuanced decisions about what to include in that formula. This analysis suggests that a pure “high school equivalency” wage benefit would be more likely to flag credentials and associate degree programs, and that a slightly higher annual wage threshold (a difference of ~$8,000) results in a dramatic increase in the share of programs flagged—going from 3% to 24% of associate degree programs. Few prior accountability efforts have focused on employment rates and doing so would include many more bachelor’s and master’s degree programs on the list. The Department will likely look to gainful employment rules to determine a cost-benefit comparison. The GE debt-to-earnings ratio would flag a smaller share of credential programs relative to just using a high school equivalency benchmark and would flag a substantial share of graduate programs—nearly all first professional degree programs would be in the “warning zone” for typical GE rules. Regardless of the exact metrics the Department selects, if the hope is to affect student enrollment and put pressure on institutions to improve their value, the Department should carefully attend to list design and proactive dissemination.

There is broad bipartisan consensus that the financing of higher education is in dire need of reform. Accountability will necessarily play a role in those reform efforts, though it is unclear the extent to which the proposed “low-financial-value” list will provide that accountability. The devil is in the details. Seemingly small decisions about which costs and benefits to include, for whom, and over what timeline matters for the conclusions we draw about higher education outcomes. If done well, this list has the potential to provide useful information to students in a complex college enrollment decision. Researchers, higher education leaders, and legislators have provided their advice to the Department on how to execute this policy, and I am eager to see how they incorporate that advice.

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).