On September 29, 2021, the United States and the European Union’s (EU) new Trade and Technology Council (TTC) held their first summit. It took place in the old industrial city of Pittsburgh, Pennsylvania, under the leadership of the European Commission’s Vice-President, Margrethe Vestager, and U.S. Secretary of State Antony Blinken. Following the meeting, the U.S. and the EU declared their opposition to artificial intelligence (AI) that does not respect human rights and referenced rights-infringing systems, such as social scoring systems.1 During the meeting, the TTC clarified that “The United States and European Union have significant concerns that authoritarian governments are piloting social scoring systems with an aim to implement social control at scale. These systems pose threats to fundamental freedoms and the rule of law, including through silencing speech, punishing peaceful assembly and other expressive activities, and reinforcing arbitrary or unlawful surveillance systems.”2

The implicit target of the criticism was China’s “social credit” system, a big data system that uses a wide variety of data inputs to assess a person’s social credit score, which determines social permissions in society, such as buying an air or train ticket.3 The critique by the TTC indicates that the U.S. and the EU disagree with China’s view of how authorities should manage the use of AI and data in society.4 The TTC can therefore be viewed as the beginning steps towards forming an alliance around a human rights-oriented approach to the development of artificial intelligence in democratic countries, which contrasts with authoritarian countries such as Russia and China. However, these different approaches may lead to technological decoupling, conceptualized as national strategic decoupling of otherwise interconnected technologies such as 5G, hardware such as computer chips, and software such as operating systems. Historically, the advent of the world wide web created an opportunity for the world to be interconnected as one global digital ecosystem. Growing mistrust between nations, however, has caused a rise in digital sovereignty, which refers to a nation’s ability to control its digital destiny and may include control over the entire AI supply chain, from data to hardware and software. A consequence of the trend toward greater digital sovereignty—which then drives the trend further—is increasing fear of being cut off from critical digital components such as computer chips and a lack of control over the international flow of citizens’ data. These developments threaten existing forms of interconnectivity, causing markets for high technology to fragment and, to varying degrees, retrench back into the nation state.

To understand the extent to which we are moving towards varying forms of technological decoupling, this article first describes the unique positions of the European Union, United States and China concerning regulation of data and the governance of artificial intelligence. The article then discusses implications of these different approaches for technological decoupling, and then discusses implications for specific policies around AI, such as the U.S. Algorithmic Accountability Act, the EU’s AI Act, and China’s regulation of recommender engines.

Europe: A holistic AI governance regime

The EU has, in many ways, been a frontrunner in data regulation and AI governance. The European Union’s General Data Protection Regulation (GDPR), which went into effect in 2018, set a precedent for regulating data. This is seen in how the legislation has inspired other acts, e.g., the California Consumer Privacy Act (CCPA) and China’s Personal Information Protection Law (PIPL). The EU’s AI Act (AIA), which could go into effect by 2024, also constitutes a new and groundbreaking risk-based regulation of artificial intelligence, which, together with the Digital Markets Act (DMA) and Digital Services Act (DSA), creates a holistic approach to how authorities seek to govern the use of AI and information technology in society.

The EU AI Act establishes a horizontal set of rules for developing and using AI-driven products, services, and systems within the EU. The Act is modelled on a risk-based approach that moves from unacceptable risks (e.g., social credit scoring and use of facial recognition technologies for real-time monitoring of public spaces), to high risk (e.g., AI systems used in hiring and credit applications), to limited risk (e.g., a chatbot) to little or no risk (e.g., AI-enabled video games or spam filters). While AI systems that pose unacceptable risks are outrightly banned, high-risk systems will be subject to conformity assessments, including independent audits and new forms of oversight and control. Limited risk systems are subject to transparency obligations, such as user-facing information when interacting with a chatbot. In contrast, little or no risk systems remain unaffected by the AI Act.5

The EU Digital Markets Act (DMA) attempts, among other things, to ensure that digital platforms that possess so-called gatekeeper functions, in their access to and control of large swaths of consumer data, do not exploit their data monopolies to create unequal market conditions. The implicit goal is to increase (European) innovation, growth, and competitiveness.

Similarly, the EU Digital Services Act (DSA) seeks to give consumers more control over what they see online. This means, for example, better information about why specific content is recommended through recommender engines and the possibility of opting out of recommender-based profiling. The new rules aim to protect users from illegal content and aim to tackle harmful content, such as political or health-related misinformation. In effect, this carves out new responsibilities for very large platforms and search engines to engage in some forms of content moderation. This means that gatekeeper platforms are considered responsible for mitigating against risks such as disinformation or election manipulation, balanced against restrictions on freedom of expression, and subject to independent audits.

The aim of these new laws is not only to ensure that the rights of EU citizens are upheld in the digital space but also to make sure that European companies have a better opportunity to compete against large U.S. tech firms. One way of doing this is to mandate compatibility requirements between digital products and services. Such compatibility requirements have already required Apple to change the standard of its charger starting in 20246 and could also require greater interoperability between messaging services such as Apple’s iMessage, Meta’s WhatsApp, Facebook Messenger, Google Chat, and Microsoft Teams.7 While increased interoperability could increase the vulnerability and complexity of security-related issues, instituting such changes would arguably make it harder for companies to secure market share and continue their network-driven forms of dominance.

At the same time, the EU is trying to build ties to U.S. tech companies by opening an office in the heart of Silicon Valley headed by Gerard de Graaf, the European Commission’s director of digital economy, who is expected to establish closer contact with companies such as Apple, Google, and Meta.8 The strategic move by the EU is also going to serve as a mechanism to ensure that American tech companies comply with new European rules such as the AIA, DMA, and DSA.

Concerning semiconductors, European Commission President Ursula von der Leyen announced the European Chips Act in February 2022, intending to make the EU a leader in semiconductor manufacturing.9 By 2030, the European share of global semiconductor production is expected to more than double, increasing from 9 to 20%. The European Chips Act is a response to the U.S. CHIPS and Science Act and China’s ambitions to achieve digital sovereignty through the development of semiconductors. Semiconductors are the cornerstone of all computers and, thus, are integral for developing artificial intelligence. Strategic policies such as the European Chips Act suggest that control over the computer-based part of the AI value chain and the politicization of high-tech development will only become more important in coming years.

The largest tech companies—Apple, Amazon, Google, Microsoft, Alibaba, Baidu, Tencent, and others—are mostly found in the U.S. and China, not in Europe. To address this imbalance, the EU aims to set the regulatory agenda for public governance of the digital space. The new regulations aim to ensure that international companies comply with European rules while strengthening the EU’s resolve to obtain digital sovereignty.

US: A light-touch approach to AI governance

The United States’ approach to artificial intelligence is characterized by the idea that companies, in general, must remain in control of industrial development and governance-related criteria.10 So far, the U.S. federal government has opted for a hands-off approach to governing AI in order to create an environment free of burdensome regulation. The government has repeatedly stated that “burdensome” rules and state regulations often are considered “barriers to innovation,”1112 which must be reduced, for example, in areas such as autonomous vehicles.

The U.S. also takes a different approach than the EU and China in the area of data regulation. The U.S. has not yet drawn up any national policy on data protection, such as in the EU, where in 2018 the GDPR introduced a harmonized set of rules across the EU. By comparison, only five out of 50 U.S. states—California, Colorado, Connecticut, Utah, and Virginia—have adopted comprehensive data legislation.13 As a result, California’s Consumer Privacy Act (CCPA), effective in 2020, has, to some extent, become the U.S.’s de facto data regulation.14 The GDPR in many ways served as a model for CCPA, which requires companies to give consumers increased privacy rights, including the right to access and delete any personal data as well as the right to opt-out of having data sold and be free from online discrimination.

Section 230 of the Communications Decency Act protects platforms from liability for content posted. Under current law, liability for content remains with users who post it.15 In part due to this focus on users rather than platforms, in the U.S. there is little oversight of recommender engines that rank, organize, and determine the visibility of information across search engines and social media platforms. Content moderation is a thorny issue, however. On the one hand, there is an argument to be made for platforms to engage in content moderation to avoid overly discriminatory and harmful behavior online. On the other hand, states such as Texas, and Florida, among others, are passing laws prohibiting tech companies from “censoring” users, which are enacted to protect their constituents’ rights to free speech.16 The counterargument made by platforms is that their content moderation decisions, as well as their use of recommender engines, is a form of expression that should be protected by the First Amendment, which defends American citizens and companies from government restraints on speech.17

While the United States takes a laissez-faire approach to regulating artificial intelligence, that tends to be fragmented at the state level, new industrial policy initiatives are aimed explicitly at strengthening certain aspects of the AI supply chain. One example is the CHIPS and Science Act, where Democrats and Republicans have come together to create new incentives for producing semiconductors on American soil.18 Based on the idea of digital sovereignty, the CHIPS and Science Act marks a shift in U.S. industrial policy to address renewed concerns over maintaining U.S. technological leadership in the face of fast-growing competition from China.

When it comes to using artificial intelligence in the public sector, the United States has experienced significant opposition from civil society, especially to law enforcement’s use of facial recognition technologies (FRT), for example, from the American Civil Liberties Union (ACLU).19 Again, the U.S. approach has been fragmented. Several cities—such as Boston, Minneapolis, San Francisco, Oakland, and Portland—have banned government agencies, including the police, from using FRT. “It does not work. African Americans are 5-10 times more likely to be misidentified,” said Alameda Council member John Knox White, who helped ban facial recognition in Oakland in 2019.20

In the United States, a March 2021 report by the country’s National Security Commission on Artificial Intelligence (NSCAI) defined the “AI race” (between China and the United States) as a value-based competition in which China must be seen as a direct competitor.21 In the report, NSCAI went further and recommended creating so-called “choke points” that limit Chinese access to American semiconductors to stall progress in some areas of technological development.22 Some of these “choke points” were seen in August 2022, when the U.S. Department of Commerce banned Nvidia from selling its A100, A100X, and H100 computer graphics processing units (GPUs) to customers in China, in a move intended to slow China’s progress in semiconductor development and prevent advanced chips from being used for military applications in China. The Department of Commerce justified the move by saying it was meant to “keep advanced technologies out of the wrong hands,” while Nvidia has signaled that it will have serious consequences for its global sales of semiconductors.23

Over the years, however, many Chinese researchers have contributed to important breakthroughs in AI-related research in the United States. U.S. companies such as Microsoft Research Asia (MSRA), headquartered in Beijing, have also played a crucial role in nurturing Chinese talent in AI. Several former MSRA researchers have gone on to spearhead China’s technological development in leading companies such as Baidu.24 Against the background of growing mistrust between the United States and China, these forms of cooperation are suffering, resulting in rethinking existing ties in areas of technological collaboration.

Over the long run, ongoing technological decoupling could contribute to a bifurcation of digital ecosystems. The Bureau of Industry and Security’s (BIS) Entity List arguably contributes to these developments by blacklisting entities on the list from doing business with U.S. enterprises. In terms of software, these developments are already happening. Google, for example, stopped providing access to its Android operating system (OS) to Huawei after the company was placed on the Entity List. These developments caused Huawei’s sales of smartphones to plummet on international markets due to a sudden lack of access to Android’s (OS) and app store, hurting interoperability between hardware and apps and services.25 These developments have resulted in Huawei doubling down on developing its own proprietary operating system, HarmonyOS, for use across its products.26

In terms of AI-related regulation, the U.S. Algorithmic Accountability Act was reintroduced in 2022, but it has not been approved in either the Senate or the House of Representatives, where it was first introduced in 2019. Should the Act be passed, it would require companies that develop, sell, and use automated systems to be subject to new rules related to transparency and when and how AI systems are used. In the absence of national legislation, some states and cities have started to implement their own regulations, such as New York City’s Law on Automated Employment Decision Tools. The law stipulates that any automated hiring system used on or after January 1, 2023, in NYC, must undergo a bias audit consisting of an impartial evaluation by an independent auditor, including testing to assess potential disparate impact on some groups.27

China: a budding AI governance regime

China’s approach to AI legislation is evolving rapidly and is heavily based on central government guidance. Implementing China’s national AI strategy in 201728 was a crucial step in moving the country from a lax governance regime to establishing stricter enforcement mechanisms across data and algorithmic oversight. In 2021, China implemented the Personal Information Protection Law (PIPL), a national data regulation inspired by the GDPR. PIPL entails that companies operating in China must classify and store their data locally within the country—an element critical in establishing digital sovereignty. Under the law, companies that process data categorized as “sensitive personal information” must seek separate consent from these individuals, state why they process this data, and explain any effects of data-related decision-making. Like the GDPR, PIPL gives China’s consumers increased rights while companies have become subject to stricter national oversight and data-related controls, enhancing trust in the digital economy.

In terms of AI regulation, China oversees recommender engines through the “Internet Information Service Algorithmic Recommendation Management Provisions”29 which went into effect in March 2022, the first regulation of its kind worldwide. The law gives users new rights, including the ability to opt-out of using recommendation algorithms and delete user data. It also creates higher transparency regarding where and how recommender engines are used. The regulation goes further, however, with its content moderation provisions, which require private companies to actively promote “positive” information that follows the official line of the Communist Party. It includes promoting patriotic, family-friendly content and focusing on positive stories aligned with the party’s core values.30 Extravagance, over-consumption, antisocial behavior, excessive interest in celebrities, and political activism are subject to stricter control: Platforms are expected to intervene actively and regulate this behavior.31 Therefore, China’s regulation of recommendation algorithms goes far beyond the digital space by dictating what type of behavior China’s central government considers favorable or not in society.

Unlike the United States, Chinese regulations put the responsibility on private companies to moderate, ban, or promote certain types of content. However, China’s regulation of recommender engines can be complicated—both for companies to implement and for regulators to enforce—because the law often may be interpreted arbitrarily.32 The regulation could further accelerate the decoupling of practices for companies operating in China and international markets.

In terms of innovation, China’s central government has strengthened private partnerships with China’s leading technology companies. Several private companies, including Baidu, Alibaba, Huawei, and SenseTime, among others, have been elevated to “national champions” or informally to members of China’s “national AI team”33 responsible for strengthening China’s AI ecosystem.34

The result is that technology giants such as Baidu, Alibaba, and others have moved into the upper echelons of China’s centrally planned economy. And precisely because of these companies’ importance to the social and economic development of the country, the government is bringing them closer to the long-term strategic goals of the Communist Party. These developments include experimenting with mixed forms of ownership, for example where government agencies acquire minority stakes in private companies through state-run private equity funds and then fill board seats with members of the Communist Party.35 Other measures include banning sectors that do not live up to the Party’s long-term priorities. One of these was China’s for-profit educational technology sector, which was banned in 2021 because the party wanted to curb inequality in education.36

In China, the state is playing a central and growing role in adopting facial recognition technologies to monitor public spaces. According to Chinese government estimates, up to 626 million facial recognition cameras were installed in the country by 2020.37 Huge public sector demand has not surprisingly contributed to making China a world leader in developing AI related to facial recognition. Meanwhile, pushback by civil society continues to play a marginal role in China compared to the United States, which makes it more difficult for the population to question the government’s use of AI in society.

While the U.S. and the EU only recently have launched new initiatives and industrial policies explicitly aimed at semiconductors, China has long nurtured its chip industry. In 2014, for instance, the National Integrated Circuit Industry Investment Fund was established to make China a world leader in all segments of the chip supply chain by 2030.38 While China still lags far behind the U.S in semiconductor development, it is an area of the AI value chain that receives continued attention from China’s central government, as it is critical for the country’s ambitions of achieving AI leadership by 2030.

Regarding how AI intersects with social values, China’s latest five-year plan states that technological development aims to promote social stability.39 Artificial intelligence should therefore be seen as a social control tool in “the great transformation of the Chinese nation,”40 which implies maintaining a balance between social control and innovation.41

The desire for self-sufficiency

The ideological differences between the three great powers could have broader geopolitical consequences for managing AI and information technology in the years to come. Control over strategic resources, such as data, software, and hardware has become paramount to decisionmakers in the United States, the European Union, and China, resulting in a neo-mercantilist-like approach to governance of the digital space. Resurfacing neo-mercantilist ideas are most visible in the ways that trade in semiconductors is being curtailed, but they are also apparent in discussions over international data transfers, resources linked to cloud computing, the use of open-source software, and so on. These developments seem to increase fragmentation, mistrust, and geopolitical competition, as we have seen in the case of communication technologies such as 5G. The United States, Canada, England, Australia, and several European countries have excluded Chinese 5G providers, such as Huawei and ZTE, due to growing mistrust about data security and the fear of surveillance of citizens by China’s central government.42

As technological decoupling deepens, China will seek to maintain its goal of achieving self-sufficiency and technical independence, especially from high-tech products originating in the United States. As recently as May 2022, China’s central government ruled that central government agencies and state-subsidized companies must replace computers from foreign-owned manufacturers within two years.43 That includes phasing out Windows OS, which will be replaced by Kylin OS, developed by China’s National University of Defense Technology.

Regarding open-source code repositories such as GitHub (owned by Microsoft), China has also signaled that it seeks to diminish its reliance on foreign-developed open-source software. In 2020, for instance, the Ministry of Industry and Information Technology (MIIT) publicly endorsed Gitee as the country’s domestic alternative to GitHub.44 While the development of leading open-source deep learning frameworks continues to be led by U.S. technology enterprises—e.g.,TensorFlow (Google) and PyTorch (Meta)—Chinese alternatives developed by national champions such as PaddlePaddle (Baidu) and Mindspore (Huawei), among others, are growing in scope and importance within China. These developments illustrate that achieving self-sufficiency in open-source software development such as deep learning frameworks are on the political agenda of China’s central government, feeding into its long-term desire for achieving digital sovereignty.

Certain U.S. policies, such as placing a growing number of Chinese companies on the BIS Entity List, will make it more difficult for China’s central government to rely on strategic technical components from the United States as part of the country’s economic growth strategy, thus incentivizing China to continue toward its goal of achieving technological self-sufficiency. These developments mean that previous forms of cooperation across the Pacific, e.g., in terms of academic research and corporate R&D, are quietly diminishing. These developments may complicate the possibilities for finding new international solutions to harmonization of AI use and legislation.

While the U.S. and EU diverge on AI regulation, focused on self-regulation versus comprehensive regulation of the digital space, respectively, they continue to share a fundamental approach to artificial intelligence based on respect for human rights. This approach is now slowly being operationalized to condemn the use of AI for social surveillance and control purposes, as witnessed in China, Russia, and other authoritarian countries. To some extent, “American” and “European” values are evolving into an ideological mechanism that aims to ensure a human rights-centered approach to the role and use of AI.45 Put differently, an alliance is currently forming around a human rights-oriented view of socio-technical governance, which is embraced and encouraged by like-minded democratic nations. This view strongly informs how public sector authorities should relate to and handle the use of AI and information technology in society.

Where are we headed?

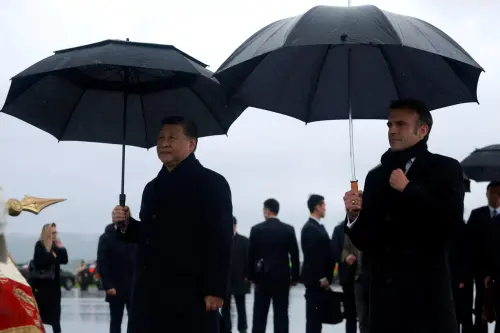

On May 15, 2022, the United States and EU TTC held its second summit, this time in Saclay, a suburb of Paris and one of France’s leading research and business clusters. Secretary Blinken and Vice President Vestager met again to promote transatlantic cooperation and democratic approaches to trade, technology, and security. The meeting ultimately strengthened the strategic relationship across the Atlantic in several specific areas, including engaging in more detailed information exchange on exports of critical technology to authoritarian regimes such as Russia. The United States and the EU will also engage in greater coordination of developing evaluation and measurement tools that contribute to credible AI, risk management, and privacy-enhancing technologies. A Strategic Standardization Information (SSI) mechanism will also be set up to enable greater exchange of information on international technology standards—an area in which China is expanding its influence. In addition, an early warning system is being discussed to better predict and address potential disruptions in the semiconductor supply chain. This discussion includes developing a transatlantic approach to continued investment in long-term security in supply for the EU/U.S. market.46

While the TTC is slowly cementing the importance of the U.S. and the EU’s democratic transatlantic alliance in artificial intelligence, the gap between the U.S. and China seems to widen. The world is, therefore, quietly moving away from a liberal orientation based on global interoperability, while technological development increasingly is entangled in competition between the governments of the United States and China. These developments diminish the prospects for finding international forms of cooperation on AI governance,47 and could contribute to a Balkanization of technological ecosystems. The result, already partially underway, would be the emergence of a “Chinese” network and its digital ecosystem, a U.S. and a European one, each with its own rules and governing idiosyncrasies. In the long run, this may mean that it will be much more difficult to agree on how more complicated forms of artificial intelligence should be regulated and governed. At present, the EU and China do seem to agree on taking a more active approach to regulating AI and digital ecosystems relative to the U.S. This could change, however, if the U.S. were to pass the Algorithmic Accountability Act. Like the EU AI Act, the Algorithmic Accountability Act requires organizations to perform impact assessments of their AI systems before and after deployment, including providing more detailed descriptions on data, algorithmic behavior, and forms of oversight.

Should the U.S. choose to adopt the Algorithmic Accountability Act, the regulatory approaches of the EU and the U.S. would be better aligned. Even though regulatory regimes may align over time, the current trajectory of digital fragmentation between the EU and US on one side, and China on the other, is set to continue under the current political climate.

Undoubtedly, AI will continue to revolutionize society in the coming decades. However, it remains uncertain whether the world’s countries can agree on how technology should be implemented for the greatest possible societal benefit. As stronger forms of AI continue to emerge across a wider range of use cases, securing AI alignment at the international level, could be one of the most significant challenges of the 21st century.

-

Footnotes

- U.S.-E.U. Trade and Technology Council. “US-EU Trade and Technology Council Inaugural Joint Statement.” White House Briefing Room Statements and Releases. September 29, 2021. https://www.whitehouse.gov/briefing-room/statements-releases/2021/09/29/u-s-eu-trade-and-technology-council-inaugural-joint-statement/

- Ibid.

- Kuo, L. “China bans 23m from buying travel tickets as part of ‘social credit’ system.” The Guardian. March 1, 2019. https://www.theguardian.com/world/2019/mar/01/china-bans-23m-discredited-citizens-from-buying-travel-tickets-social-credit-system

- AI governance, is defined as public and private management of artificial intelligence, from innovation to use and regulation.

- European Commission. “Regulatory framework proposal on artificial intelligence.” https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

- Guarascio, F. “Apple forced to change charger in Europe as EU approves overhaul.” Reuters. October 4, 2022. https://www.reuters.com/technology/eu-parliament-adopts-rules-common-charger-electronic-devices-2022-10-04/.

- Deutsch, J. EU Rules on Messaging Apps Raise Alarms on Personal Privacy. Bloomberg, July 19, 2022. https://www.bloomberg.com/news/articles/2022-07-20/whatsapp-imessage-face-privacy-mess-over-eu-messaging-rules

- Feingold, S. “Why the European Union is opening a Silicon Valley ’embassy’.” World Economic Forum. August 16, 2022. https://www.weforum.org/agenda/2022/08/why-the-european-union-is-opening-a-silicon-valley-embassy/

- European Commission. “Digital sovereignty: Commission proposes Chips Act to confront semiconductor shortages and strengthen Europe’s technological leadership.” Press release on February 8, 2022. https://ec.europa.eu/commission/presscorner/detail/en/IP_22_729

- Cath, C., Wachter, S., Mittelstadt, B., Taddeo, M., and Floridi, L. “Artificial Intelligence and the ‘Good Society’: the US, EU, and UK approach.” Science and Engineering Ethics 24, no. 2: 505–528. https://doi.org/10.1007/s11948-017-9901-7

- The White House Office of Science and Technology Policy. “Summary of the 2018 White House Summit on Artificial Intelligence for American Industry.” May 10, 2018. https://trumpwhitehouse.archives.gov/wp-content/uploads/2018/05/Summary-Report-of-White-House-AI-Summit.pdf

- National Institute for Standards and Technology. “US Leadership In AI: A Plan for Federal Engagement in Developing Technical Standards and Related Tools.” US Department of Commerce. https://www.nist.gov/system/files/documents/2019/08/10/ai_standards_fedengagement_plan_9aug2019.pdf Vought, R. “Guidance for Regulation of Artificial Intelligence Applications Introduction.” Executive Office of the President, Office Of Management and Budget. November 17, 2020. https://www.whitehouse.gov/wp-content/uploads/2020/11/M-21-06.pdf

- National Conference of State Legislatures. “State Laws Related to Digital Privacy.” July 6, 2022. https://www.ncsl.org/research/telecommunications-and-information-technology/state-laws-related-to-internet-privacy.aspx

- Klosowski, T. “The State of Consumer Data Privacy Laws in the US (And Why It Matters).” New York Times. Wirecutter, September 6, 2021. https://www.nytimes.com/wirecutter/blog/state-of-privacy-laws-in-us/

- Citron, DK, and Wittes, B. “The Problem Isn’t Just Backpage: Revising Section 230 Immunity.” 2 Georgetown Law Technology Review 453, July 2018. https://georgetownlawtechreview.org/the-problem-isnt-just-backpage-revising-section-230-immunity/GLTR-07-2018/

- Lima, C. “Republicans are leaning on the courts to target social media, but the losses are pilling up” Washington Post, May 24, 2022. https://www.washingtonpost.com/politics/2022/05/24/republicans-are-leaning-courts-target-social-media-losses-are-piling-up/

- Oremus, W. “Want to regulate social media? The First Amendment may stand in the way.” Washington Post, May 30, 2022. https://www.washingtonpost.com/technology/2022/05/30/first-amendment-social-media-regulation/

- Kharpal, A. “First 100 days: Biden keeps Trump-era sanctions in tech battle with China, looks to friends for help.” CNBC, April 29, 2021. https://www.cnbc.com/2021/04/29/biden-100-days-china-tech-battle-sees-sanctions-remain-alliances-made.html

- American Civil Liberties Union. “The Fight to Stop Face Recognition Technology.” Accessed November 14, 2022. https://www.aclu.org/news/topic/stopping-face-recognition-surveillance.

- East Bay Citizen. “Alameda approves facial-recognition technology policy ban.” https://ebcitizen.com/2019/12/23/alameda-approves-facial-recognition-technology-policy-ban-will-seek-ordinance/

- National Security Commission on Artificial Intelligence. “Final report.” March 2021. https://www.nscai.gov/wp-content/uploads/2021/03/Full-Report-Digital-1.pdf

- Nellis, S. “US panel recommends export ‘choke points’ to prevent Chinese dominance in semiconductors.” Reuters, March 1, 2021. https://www.reuters.com/article/us-usa-china- semiconductors-idUSKCN2AT39H

- Nellis S, Lee L. “U.S. officials order Nvidia to halt sales of top AI chips to China.” Reuters. September 1, 2022. https://www.reuters.com/technology/nvidia-says-us-has-imposed-new-license-requirement-future-exports-china-2022-08-31/

- Kaye, K. “Microsoft helped build AI in China. Chinese AI helped build Microsoft.” Protocol. November 2, 2022. https://www.protocol.com/enterprise/us-china-ai-microsoft-research

- Kharpal, A. “From No. 1 to No. 6, Huawei smartphone shipments plunge 41% as U.S. sanctions bite.” CNBC. January 28, 2021. https://www.cnbc.com/2021/01/28/huawei-q4-smartphone-shipments-plunge-41percent-as-us-sanctions-bite.html

- Zhou, W. “Harmony OS 3 is Huawei’s latest move in the building of a “walled garden” ecosystem to rival Apple’s.” July 28, 2022. Technode. https://technode.com/2022/07/28/harmony-os-3-is-huaweis-latest-move-in-the-building-of-a-walled-garden-ecosystem-to-rival-apples/

- Crowell. New York City Issues Proposed Regulations on Law Governing Automated Employment Decision Tools. October 14, 2022. https://www.crowell.com/NewsEvents/AlertsNewsletters/all/New-York-City-Issues-Proposed-Regulations-on-Law-Governing-Automated-Employment-Decision-Tools#:~:text=October%2014%2C%202022&text=Local%20Law%20144%2C%20which%20is,the%20use%20of%20such%20tool.

- Webster, G, R. Creemers, E. Kania, and P. Triolo. “Full Translation: China’s ‘New Generation Artificial Intelligence Development Plan,’” DigiChina, Stanford University. https://digichina.stanford.edu/work/full-translation-chinas-new-generation-artificial-intelligence-development-plan-2017/

- Rogier, C, Graham W, and Helen T. “Translation: Internet Information Service Algorithmic Recommendation Management Provisions – Effective March 1, 2022.” DigiChina. Stanford University, January 10, 2022. https://digichina.stanford.edu/work/translation-internet-information-service-algorithmic-recommendation-management-provisions-effective-march-1-2022/

- Huld, A. China’s Sweeping Recommendation Algorithm Regulations in Effect from March 1. China Briefing. January 6, 2022. https://www.china-briefing.com/news/china-passes-sweeping-recommendation-algorithm-regulations-effect-march-1-2022/

- Ibid.

- Ibid.

- Jing, M, & S. Dai, 2017. “China recruits Baidu, Alibaba and Tencent to AI ‘national team’.” South China Morning Post. November 21, 2017. https://www.scmp.com/tech/china-tech/article/2120913/china-recruits-baidu-alibaba-and-tencent-ai- national-team

- Larsen, B. C. “Drafting China’s National AI Team for Governance.” New America. November 18, 2019. https://www.newamerica.org/cybersecurity-initiative/digichina/blog/drafting-chinas-national-ai-team-governance/

- Yang, Y., and B. Goh. “Beijing took stake and board seat in key ByteDance domestic entity this year.” Reuters. August 17, 2021. https://www.reuters.com/world/china/beijing-owns-stakes-bytedance-weibo-domestic-entities-records-show-2021-08-17/

- Koty, AC. “More Regulatory Clarity After China Bans For-Profit Tutoring in Core Education.” China Briefing. September 27, 2021. https://www.china-briefing.com/news/china-bans-for-profit-tutoring-in-core-education-releases-guidelines-online-businesses/

- Keegan, M. “Big Brother is watching: Chinese city with 2.6m cameras is world’s most heavily surveilled.” The Guardian. December 2, 2019. https://www.theguardian.com/cities/2019/dec/02/big-brother-is-watching-chinese-city-with-26m-cameras-is-worlds-most-heavily-surveilled

- Tao, L. “How China’s ‘Big Fund’ is helping the country catch up in the global semiconductor race.” South China Morning Post. May 10, 2018. https://www.scmp.com/tech/enterprises/article/2145422/how-chinas-big-fund-helping-country-catch-global-semiconductor-race

- Webster G, R. Creemers, E. Kania, P. Triolo. “Full Translation: China’s ‘New Generation Artificial Intelligence Development Plan,’” DigiChina, Stanford University. August 1, 2017. https://digichina.stanford.edu/work/full-translation-chinas-new-generation-artificial-intelligence-development-plan-2017/

- Ibid.

- Hine, E., and Floridi, L. “Artificial Intelligence with American Values and Chinese Characteristics: A Comparative Analysis of American and Chinese Governmental AI Policies.”AI & Society. June 25, 2022. https://doi.org/10.1007/s00146-022-01499-8

- Sevastopulo D., E. White, and J. Kerr. “Canada to ban Chinese telecoms Huawei and ZTE from 5G networks.” Financial Times. May 19, 2022. https://www.ft.com/content/2534ca85-b08e-4f78-88f3-c04770b41a02 Wiley Law. “Biden Signs Secure Equipment Act, Requires FCC to Ban Covered Chinese Communications Equipment from Obtaining Equipment Authorizations.” November 15, 2021. https://www.wiley.law/alert-Biden-Signs-Secure-Equipment-Act-Requires-FCC-to-Ban-Covered-Chinese-Communications-Equipment-from-Obtaining-Equipment-Authorizations BBC News. “Huawei and ZTE handed 5G network ban in Australia.” August 23, 2018. https://www.bbc.com/news/technology-45281495 BBC News. “Huawei ban: UK to impose early end to use of new 5G kit.” November 30, 2020. https://www.bbc.com/news/business-55124236 Noyan, O. “EU countries keep different approaches to Huawei on 5G rollout.” May 20, 2021. https://www.euractiv.com/section/digital/news/eu-countries-keep-different-approaches-to-huawei-on-5g-rollout/

- Yanping L., Yuan G. “China Orders Government, State Firms to Dump Foreign PCs.” Bloomberg. May 5, 2022. https://www.bloomberg.com/news/articles/2022-05-06/china-orders-government-state-firms-to-dump-foreign-pcs

- Liao, R. “China is building a GitHub alternative called Gitee.” TechCrunch. August 21, 2020 https://techcrunch.com/2020/08/21/china-is-building-its-github-alternative-gitee/

- Hine, E., and Floridi, L. “Artificial Intelligence with American Values and Chinese Characteristics: A Comparative Analysis of American and Chinese Governmental AI Policies.” AI & Soc (2022). https://doi.org/10.1007/s00146-022-01499-8

- The White House. “FACT SHEET: U.S.-EU Trade and Technology Council Establishes Economic and Technology Policies & Initiatives.” White House Briefing Room Statements and Releases. May 16, 2022. https://www.whitehouse.gov/briefing-room/statements-releases/2022/05/16/fact-sheet-u-s-eu-trade-and-technology-council-establishes-economic-and-technology-policies-initiatives/

- Larsen, B. C. “Governing Artificial Intelligence: Lessons from the United States and China.” PhD diss., Copenhagen Business School, 2022. https://research.cbs.dk/en/publications/governing-artificial-intelligence-lessons-from-the-united-states-