Companies increasingly rely on an extended workforce (e.g., contractors, gig workers, professional service firms, complementor organizations, and technologies such as algorithmic management and artificial intelligence) to achieve strategic goals and objectives.1 When we ask leaders to describe how they define their workforce today, they mention a diverse array of participants, beyond just full- and part-time employees, all contributing in various ways. Many of these leaders observe that their extended workforce now comprises 30-50% of their entire workforce. For example, Novartis has approximately 100,000 employees and counts more than 50,000 other workers as external contributors.2 Businesses are also increasingly using crowdsourcing platforms to engage external participants in the development of products and services.34 Managers are thinking about their workforce in terms of who contributes to outcomes, not just by workers’ employment arrangements.5

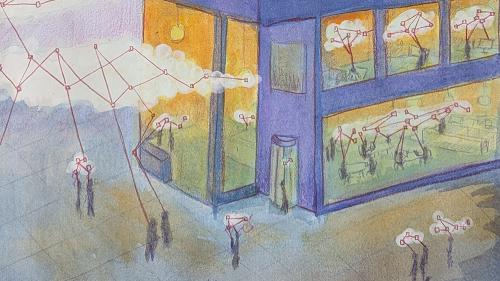

Our ongoing research on workforce ecosystems demonstrates that managing work across organizational boundaries with groups of interdependent actors in a variety of employment relationships creates new opportunities and risks for both workers and businesses.6 These are not subtle shifts. We define a workforce ecosystem as:7

A structure that encompasses actors, from within the organization and beyond, working to create value for an organization. Within the ecosystem, actors work toward individual and collective goals with interdependencies and complementarities among the participants.

The emergence of workforce ecosystems has implications for management theory, organizational behavior, social welfare, and policymakers. In particular, issues surrounding work and worker flexibility, equity, and data governance and transparency pose substantial opportunities for policymaking.

At the same time, artificial intelligence (AI)—which we define broadly to include machine learning and algorithmic management—is playing an increasingly large role within the corporate context. The widespread use of AI is already displacing workers through automation, augmenting human performance at work, and creating new job categories.

What’s more, AI is enabling, driving, and accelerating the emergence of workforce ecosystems. Workforce ecosystems are incorporating human-AI collaboration on both physical and cognitive tasks and introducing new dependencies among managers, employees, contingent workers, other service providers, and AI.

Clearly, policy needs to consider how AI-based automation will affect workers and the labor market more broadly. However, focusing only on the effects of automation without considering the impact of AI on organizational and governance structures understates the extent to which AI is already influencing work, workers, and the practice of management. Policy discussions also need to consider the implications of human-AI collaborations and AI that enhances human performance (such as generative AI tools). Policymakers require a much more nuanced and comprehensive view of the dynamic relationship between workforce ecosystems and AI. To that end, this policy brief presents a framework that addresses the convergence of AI and workforce ecosystems.

Within workforce ecosystems, the use of AI is changing the design of work, the supply of labor, the conduct of work, and the measurement of work and workers. Examining AI-related shifts in four categories—Designing Work, Supplying Workers, Conducting Work, and Measuring Work and Workers—reveals a variety of policy implications. We explore these policy considerations, highlighting themes of flexibility, equity, and data governance and transparency. Furthermore, we offer a broad view of how a shift toward workforce ecosystems and the increasing use of AI is influencing the future of work.

AI and Workforce Ecosystems: A Framework

Workforce ecosystems consist of workforce participants inside and outside organizations crossing all organizational levels and functions and spanning all product and service development and delivery phases. Strikingly, AI usage within workforce ecosystems is increasing and simultaneously accelerating their emergence and growth. The increasing shift toward workforce ecosystems creates new opportunities to leverage AI, and the increased use of AI further amplifies the move toward workforce ecosystems.

In this brief, we present a typology to better understand the interaction between the continuing emergence of AI and the ongoing evolution of workforce ecosystems. With this framework, we aim to assist policymakers in making sense of changes accompanying AI’s growth. The typology includes four categories highlighting four areas in which AI is impacting workforce ecosystems: Designing Work, Supplying Workers, Conducting Work, and Measuring Work and Workers. Each of the four categories suggests distinct (if related) policy implications.

One overarching implication of this discussion is that policy for work-related AI applications is not limited to addressing automation. Despite the clear need for policy to consider implications arising from the use of AI to automate jobs and displace workers, it is insufficient to focus policy discussions only on automation and not fully consider changes in which human work is augmented by AI and in which humans and AI collaborate. Discussions omitting these factors run the risk of understating the current and future influence of AI on work, workers, and the practice of management.

Policy related to AI in workforce ecosystems should balance workers’ interests in sustainable and decent jobs with employers’ interests in productivity and economic growth. If done properly, there is tremendous potential to leverage AI to improve working conditions, worker safety, and worker mobility/flexibility, and to work more collectively and intelligently.8 The goal of these policy refinements should be to allow businesses to meet competitive challenges while limiting the risk of dehumanizing workers, discrimination, and inequality. Policy can offer incentives to limit the use of AI in low value-added contexts, such as for automation of work with small efficiency gains, while promoting higher value-added uses of AI that increase economic productivity and employment growth.9

Designing Work

The growing use of AI has a profound effect on work design in workforce ecosystems. A greater supply of AI affects how organizations design work while changes in work design drive greater demand for AI. For example, modern food delivery platforms like GrubHub and DoorDash use AI for sophisticated scheduling, matching, rating, and routing, which has essentially redesigned work within the food delivery industry. Without AI, such crowd-based work designs would not be possible. These technologies and their impact on work design reach beyond food delivery into other supply chains wherever complex delivery systems exist. Similarly, AI-driven tools enable larger, flatter, more integrated teams because entities can coordinate and collaborate more effectively. For workforce ecosystems, this means organizations can more seamlessly integrate external workers, partner organizations, and employees as they strive to meet strategic goals.

On the flip side, changes in work design drive increasing demand for AI. For example, as jobs are disaggregated into tasks and work becomes more modular and/or project-based, algorithms can help humans become more effective.10 As companies refine their approach to designing work, they gain access to more data (e.g., in medical research and marketing analytics) and AI becomes even more valuable.

Policy concerns associated with U.S. business’s increasing reliance on contingent labor date back (at least to) the 1994 Dunlop Commission.11 Companies do not want to overcommit to hiring full-time workers with skills that will soon become obsolete and thus prefer to rely on contingent labor in many cases. They design work for maximum flexibility and productivity but not necessarily for maximum economic security for workers.12 The shift in employment away from (full- and part-time) payroll to more flexible categories (e.g., contingent workers such as long-term contractors or short-term gig workers) tends to increase the income and wealth gap between workers in full- and part-time employed positions and those in contracted roles by affecting what leverage and protection is available for various classes of workers.13

Notably, contingent work has a direct relationship with “precarious work.” Precarious work has been defined as work that is “uncertain, unstable, and insecure and in which employees bear the risks of work […] and receive limited social benefits and statutory protections.”14 This is likely to affect workers of different skills in different ways, leading not only to income and wealth inequality but also to human capital inequality as workers with different skill levels have more or less control over their wages. For example, a highly-skilled data scientist may command a premium and may work for more than one client. In the shipping industry, most of the workers who maintain and operate commercial vessels are contractors, but they are less likely to command a premium nor will they be able to offer their services to multiple clients. Flexible, platform-based work arrangements can result in precarious work arrangements for some workers while giving flexibility, higher wages, and the ability to hyper-specialize to others. This creates human capital inequality. The difference may depend on already existing discrepancies like class, race, and gender, and thus further amplify income and wealth inequality.

The growing sophistication of AI makes it easier for managers to source, vet, and hire contingent labor. This new role for AI enables managers to design work in new ways. Instead of focusing on hiring employees and filling in skill gaps with full-time labor, managers are increasingly turning to external talent markets and staffing platforms as a source of shorter-term, skills-based engagements to achieve outcomes. Managers can disaggregate existing jobs into component tasks and then use AI to access external contributors with specific skills to accomplish those tasks.

Policy considerations for designing work

These changes in work design affect policies for tax, labor, and technology. Federal and state governments should consider developing more inclusive and flexible policies that support all kinds of employment models so workers receive equal protection and benefits based on the value they create, not the employment status they hold. If workers are to be afforded protections that ensure sustainable, safe, and healthy work environments, the same protections should be available to all workers regardless of whether they are an employee or a contingent worker. Unemployment insurance should be modernized to expand eligibility to include workers who do not work (or seek work) full-time and to provide flexible, partial unemployment benefits.

Today, firms themselves may be willing to be more flexible and creative with compensation and benefits schemes, but they sometimes only have limited opportunities to do so because of labor regulation constraints. Modernized unemployment and other labor policies would potentially increase contingent workers’ access to reasonable earning opportunities, social safety nets, and benefits. Beyond unemployment insurance, other benefits including retirement savings contributions, health insurance, and medical, family, and parental leaves are similarly restricted to full-time workers for historical reasons (although the restrictions vary across geographic regions). Policies should be updated to allow portability of benefits between employers and improve access to assistance, which would dampen the income volatility faced by many contingent workers.

Supplying Workers

By using AI to increase the supply of workers of more types (e.g., contractors, gig workers) through improved communication, coordination, and matching, workforce ecosystems can grow more easily, effectively, and efficiently. At the same time, the growth of workforce ecosystems increases the demand for all kinds of workers, leading to more demand for AI to help increase and manage worker supply.

Organizations increasingly require a variety of workers to engage in multiple ways (full-time, part-time, as professional service providers, as long- and short-term contractors, etc.). They can use AI to assist in sourcing these workers, for example, by using both internal and external labor platforms and talent marketplaces to find and match workers more effectively.15 Using AI that includes enhanced matching functions, scheduling, recruiting, planning, and evaluations increases access to a diverse corps of workers. Organizations can use AI to more effectively build workforce ecosystems that both align with specific business needs and help meet diversity goals.

Increasing the use of AI can have both negative and positive consequences for supplying workers. For example, it can perpetuate or reduce bias in hiring.16 Similarly, AI systems can help ensure pay equity (by identifying and correcting gender differences in pay for similar jobs) or contribute to inequity throughout the workforce ecosystem by, for example, amplifying the value of existing skills while reducing the value of other skills.17 In workforce ecosystems where certain skills are becoming more highly valued, AI can efficiently and objectively verify and validate existing skills and find opportunities for workers to gain new skills. However, on the negative side, such public worker evaluations can lead to lasting consequences when errors are introduced into the verification process and workers have little recourse for correcting them.18

While supplying work is distinct from designing work, the boundaries between the two are porous. For example, an organization may redesign a job into modular pieces and then use an AI-powered talent marketplace to source workers to accomplish these smaller jobs. An organization could break one job into 10 discrete tasks and engage 10 people instead of one via an online labor market such as Amazon Mechanical Turk or Upwork.

Further, if an organization can increasingly use AI to effectively source workers (including human and technological workers such as software bots), the organization can design work to leverage a more abundant, diverse, and flexible worker supply. Because organizations can increasingly find people (and partner organizations) to engage for shorter-term, specific assignments, they can more easily build complex and interconnected workforce ecosystems to accomplish business objectives.

Policy considerations for supplying workers

Policy plays multiple roles in AI-enabled workforce ecosystems related to supplying workers. We consider three sets of issues: tax policy favoring capital over labor investment; relatively inflexible existing educational policies associated with training and development; and, collective bargaining.

First, policy shapes incentives for automation relative to human labor. Current U.S. tax policy has relatively high taxation of labor and relatively lower taxation of capital, which can favor automation.19 While this can benefit the remaining workers in heavily automated industries, it can provide incentives to organizations to invest in automation technologies that displace human workers. These automation investments are unlikely to be effectively constrained by taxes on robots, however.20 We need policy incentives that actually make investments in human capital and labor more attractive. These could include tax incentives for upskilling and reskilling both employees and external contributors, creating decent jobs programs, or developing programs to calibrate investments in automation and human labor.21

Second, public and private organizations can collaborate more closely on worker training and continuous learning. Organizations can build relationships across communities to provide training, reskilling, and lifelong learning for workers, especially because current regulations in some geographies, including the U.S., preclude organizations from providing training to contractual workers.22 Public-private partnerships can help enable good jobs and fair work arrangements, provide career opportunities to workers, and add economic benefits for employers. Education needs to become more flexible to provide workers with fresh skills beyond, and in some cases in place of, college. AI can be utilized not only to decompose jobs into component tasks but also to provide support for team formation and career management.23 Digital learning and digital credential and reputation systems are likely to play a key role in enabling a more flexible and comprehensive worker supply. All of these measures would support the continued growth and success of workforce ecosystems across industries and economies.

Finally, policymakers should clarify the role that collective bargaining can serve in negotiating issues such as the use of technology, safety, privacy concerns, plans to expand automation, and training and access to training (e.g., paid time off to complete training) among others. Ideally, these benefits can be expanded to include all workers across an ecosystem, not just those in traditional full-time employment.

Conducting Work

In workforce ecosystems, humans and AI work together to create value, with varying levels of interdependency and control over one another. As stated by MIT Professor Thomas Malone:24

People have the most control when machines act only as tools; and machines have successively more control as their roles expand to assistants, peers, and, finally, managers.

Policy should cover the full range of interactions that exist when humans and AI collaborate. Although these categories—assistants, peers, and managers—clearly overlap, each type of working relationship suggests new policy demands for conducting work.

AI-as-Assistant. AI supports individual performance within workforce ecosystems. Businesses are increasingly relying on augmented reality/virtual reality (AR/VR) technologies, for instance, to enhance individual and team performance. These technologies promise to improve worker safety in some workplace environments.25 However, new technologies also promise to allow AI-enabled workplace avatars to interact, bringing very human predilections, both prosocial and antisocial, into digital environments.26AI-as-Peer: Humans and AI increasingly work together as collaborators in workforce ecosystems, using complementary capabilities to achieve outcomes: 60% of human workers already see AI as a co-worker.27 In hospitals, radiologists and AI work together to develop more accurate radiologic interpretations than either alone could accomplish. At law firms, algorithms are taking over elements of the arduous process of due diligence for mergers and acquisitions, analyzing thousands of documents for relevant terms, freeing associates to focus on higher-value assignments.28AI-as-Manager: AI is already being used to direct a wide range of human behaviors in the workplace, deciding, for example, who to hire, promote, or reassign. Uber uses algorithms to assign and schedule rides, set wages, and track performance; and, AI may direct a warehouse worker’s hand movement with haptic feedback based on motion sensors. AI is also being used in surveillance applications, which can be considered a form of supervision or management.29

Policy considerations for conducting work

To address issues related to AI as an assistant or peer, the U.S. needs regulation for workplace safety when humans collaborate with AI agents and robots. These regulations will likely cut across existing government regulatory structures. For example, if AI assistants or robots on a factory floor need to meet cybersecurity requirements to ensure worker safety, are these standards set by the Occupational Safety and Health Administration (OSHA) or some other body? In OSHA’s A-Z website index, there is currently no mention of cybersecurity.

A key issue with AI-as-manager is that AI decisions may appear opaque and confusing, leaving workers guessing about how and why certain decisions were made and what they can do when bad data skew decisions. For example, unreasonable passengers may give low marks to rideshare drivers, which in turn adversely affects drivers’ income opportunities. Policymakers could pass rules to increase transparency for workers about how algorithmic management decisions are made. Such rules could force employers and online labor platform businesses to disclose which data is used for which decisions. This would be helpful to counteract the current information asymmetry between platforms and workers.

Finally, policymakers need to consider how existing anti-discrimination rules intended to regulate human decisions can be applied to algorithms and human-AI teams. Currently, algorithm-based discrimination is difficult to verify and prove given the absence of independent reviews and outside audits.3031 Such audits could help address (and possibly alleviate) unintended consequences when algorithms inadvertently exploit natural human frailties and use flawed data sets. Policymakers could mandate outside audits, establish which data can be used, support research that attempts to assess algorithmic properties, promote research on both algorithmic fairness and machine learning algorithms with provable attributes, and analyze the economic impact of human and AI collaboration. Additionally, policies seeking to reduce discrimination may need to wrestle with which bias—a human’s or an algorithm’s—is the most important bias to minimize.

Measuring Work and Workers

Firms are increasingly using AI to measure behaviors and performance that were once impossible to track. Advanced measurement techniques have the potential to generate efficiency gains and improve conditions for workers, but they also risk dehumanizing workers and increasing discrimination in the workplace. AI’s ability to reduce the cost of data collection and analysis has greatly expanded the range of possible monitoring to include location, movement, biometrics, affect, as well as verbal and non-verbal communication. For example, AI can predict mood, personality, and emergent leadership in group meetings.32 Workers may experience such tools as intrusive even if the monitoring itself is lawful and even if workers do not directly experience the surveillance.

At the same time, workers can use newly available AI systems to assess their performance in real-time and prescribe efficient actions, balance stress, and improve performance.33 Fine-grained, real-time measures may be particularly useful because they can improve processes that support collective intelligence.34 For example, AI that detects emotional shifts on phone calls may enable pharmacists to deal more effectively with customer aggravations;35 biometric sensors for workers in physical jobs can detect strenuous movements and reduce the risk of injury.36 Workers may welcome AI that augments performance and improves safety. On the other hand, a firm’s desire to utilize AI for work and worker measurement poses a risk of treating workers more like machines than humans and introducing AI-based discrimination.

Policy considerations for measuring work and workers

Policymakers need to recognize that AI is changing the nature of surveillance beyond the regulatory scope of the Electronic Communications Privacy Act of 1986 (ECPA), which is the only federal law that directly governs the monitoring of electronic communications in the workplace.37 Surveillance affects not only traditional employees but also contingent workers participating in workforce ecosystems. And, in many cases, contracted workers may be subject to more, and more intrusive, monitoring than other workers, especially when working in remote locations. Three specific areas stand out as particularly relevant.

Transparency: To ensure decent work, data transparency is especially crucial as tracking workers (both inside a physical location and also digitally for remote workers) can be disrespectful and violate their privacy. Currently, it is rarely clear to workers what types of data are being used to measure their performance and determine compensation and task assignment. Stories abound in which workers try to game the system by figuring out how to get the most lucrative assignments.38Policymakers need to establish legitimate purposes for data collection and use as well as guidelines for how these need to be shared with workers. They must address the risks of invasive work surveillance and discriminatory practices resulting from algorithmic management and AI systems. Guidelines for data security, privacy, ownership, sharing, and transparency should be much more specifically addressed across regulatory environments.

AI Bias: Bias in algorithmic management within traditional organizations and workforce ecosystems can arise from three sources: (a) data that is used to train AI that may include human biases; (b) biased decisionmaking by software developers (who may reflect a narrow portion of the population); and (c) AI that is too rigid to detect situations in which different behavior is warranted (i.e., swerving to avoid a pothole may indicate attentive driving as opposed to inattentive). To further complicate matters, AI itself can develop software, which might introduce other biases.

Equity: Employment arrangements become increasingly flexible and fluid in workforce ecosystems, and worker employment status can determine the type of monitoring. Contingent workers in a workforce ecosystem for example might be monitored in ways that employees performing similar tasks would not be. Similar inequities exist even among employees. For instance, with the growth of remote work, various types of monitoring on all employees seems to be on the rise; however, employees working from home may be subject to surveillance different from those in the office.39 Indeed, the threat of surveillance can be used to encourage a return to the workplace. Aside from the question of whether organizational culture can benefit from a threat-induced return to work, there is a substantive question about whether businesses should be allowed to selectively protect or exploit privacy among employees performing similar jobs. To address possible discriminatory practices, policymakers need to establish rules for legitimate data collection and use and for equitable protections of privacy in different work arrangements. At the same time, those policies need to be carefully balanced against the need for work and worker flexibility, innovation, and economic growth.

Conclusion

Corporate uses of AI are transforming the design and conduct of work, the supply of labor, and the measurement of work and workers. At the same time, companies are increasingly dependent on a wide range of actors, employees and beyond, to accomplish work. The intersection of these two trends has more consequential and broad policy implications than automation in the workplace.

Today, many of the protections and benefits workers receive still depend on their classification as an employee versus a contingent worker. We need policies that can:

- anticipate and account for a variety of work arrangements to ensure safety and equity for workers across categories;

- accommodate increasingly novel work arrangements that support and protect all workers;

- balance workers’ desires for decent jobs with organizations’ needs for sustainability and economic growth.

All of this needs to be accomplished while policymakers keep a careful eye on unintended consequences. Both AI technologies and firm practices are developing rapidly, making it difficult to predict which future work arrangements may be most successful in which circumstances. Hence, decisionmakers should strive to develop policies that increase rather than constrain innovation for future work arrangements that benefit both workers and organizations. Policymakers should explicitly allow experimentation and learning while limiting regulatory complexity associated with AI in workforce ecosystems.

-

Footnotes

- Altman, Elizabeth J., David Kiron, Jeff Schwartz, and Robin Jones. 2021. “The Future of Work Is Through Workforce Ecosystems.” MIT Sloan Management Review. https://sloanreview.mit.edu/article/the-future-of-work-is-through-workforce-ecosystems/

- Altman, Elizabeth J., David Kiron, Robin Jones, and Jeff Schwartz. 2022. “Orchestrating Workforce Ecosystems: Strategically Managing Work Across and Beyond Organizational Boundaries.” MIT Sloan Management Review. https://sloanreview.mit.edu/projects/orchestrating-workforce-ecosystems/

- Riedl, C., and Anita Williams Woolley. 2016. “Teams vs. crowds: A field test of the relative contribution of incentives, member ability, and emergent collaboration to crowd-based problem solving performance.” Academy of Management Discoveries 3, no. 4: 382-403. https://doi.org/10.5465/amd.2015.0097

- Fulker, Z., and Christoph. 2023. “Who wants to cooperate-and why? Attitude and perception of crowd workers in online labor markets.” arXiv. Working paper. https://doi.org/10.48550/arXiv.2301.08808.

- Altman et. al., “The Future of Work Is Through Workforce Ecosystems.”

- Altman, Elizabeth J., David Kiron, Jeff Schwartz and Robin Jones. 2023. Workforce Ecosystems. Management on the Cutting Edge. Cambridge, MA: MIT Press. https://mitpress.mit.edu/9780262047777/workforce-ecosystems/

- Altman et. al., Workforce Ecosystems.

- Westby, S. and Christoph Riedl. 2023. “Collective Intelligence in Human-AI Teams: A Bayesian Theory of Mind Approach.” Proceedings of Thirty-Seventh AAAI Conference on Artificial Intelligence. https://arxiv.org/abs/2208.11660.

- Acemoglu, Daron. 2021. “Harms of AI.” National Bureau of Economic Research Working Paper 29247. September 2021. http://doi.org/10.3386/w29247.

- Jesuthasan, Ravin, and John W. Boudreau. 2022. Work without Jobs: How to Reboot Your Organization’s Work Operating System. Management on the Cutting Edge. Cambridge, MA: MIT Press. https://mitpress.mit.edu/9780262545969/work-without-jobs/

- U.S. Commission on the Future of Worker-Management Relations. 1994. Final Report. https://ecommons.cornell.edu/bitstream/handle/1813/79039/DunlopCommissionFutureWorkerManagementFinalReport.pdf

- Parker, Sharon, Frederick P. Morgeson, and Gary Johns. 2017. “One Hundred Years of Work Design Research: Looking Back and Looking Forward.” Journal of Applied Psychology 102, no. 3: 403-420. https://doi.org/10.1037/apl0000106

- Autor, D., David Mindell, and Elisabeth Reynolds. 2020. “The Work of the Future.” MIT Task Force on the Work of the Future. https://workofthefuture.mit.edu/research-post/the-work-of-the-future-building-better-jobs-in-an-age-of-intelligent-machines/.

- Kalleberg, Arne L., and Steven P. Vallas, eds. 2017. “Probing Precarious Work: Theory, Research, and Politics.” In Precarious Work, 31:1–30. Research in the Sociology of Work. Emerald Publishing Limited. https://doi.org/10.1108/S0277-283320170000031017.

- Schrage, Michael, Jeff Schwartz, David Kiron, Robin Jones, and Natasha Buckley. 2020. “Opportunity Marketplaces: Aligning Workforce Investment and Value Creation in the Digital Enterprise.” MIT Sloan Management Review. https://sloanreview.mit.edu/projects/opportunity-marketplaces/

- Engler, A. 2021. “Auditing employment algorithms for discrimination.” Brookings Institution, March 12, 2021. https://www.brookings.edu/research/auditing-employment-algorithms-for-discrimination/

- Autor, D., David Mindell, and Elisabeth Reynolds. 2020. “The Work of the Future: Building Better Jobs in an Age of Intelligent Machines”. MIT Task Force on the Work of the Future. https://workofthefuture.mit.edu/research-post/the-work-of-the-future-building-better-jobs-in-an-age-of-intelligent-machines/

- Pallais, Amanda. 2014. “Inefficient Hiring in Entry-Level Labor Markets.” American Economic Review 104, no. 11 (November 2014): 3565–99. https://doi.org/10.1257/aer.104.11.3565.

- Acemoglu, Daron, Andrea Manera, and Pascual Restrepo. 2020. “Does the US Tax Code Favor Automation?” Brookings Papers on Economic Activity. March 18, 2020. https://www.brookings.edu/bpea-articles/does-the-u-s-tax-code-favor-automation/.

- Seamans, Robert. 2021. “Tax Not the Robots.” Brookings Institution. August 25, 2021. https://www.brookings.edu/research/tax-not-the-robots/.

- Goldberg, Kalman, Jannett Highfill and Michael McAsey. “Technology Choice: The Output and Employment Tradeoff.” The American Journal of Economics and Sociology 57, no. 1 (Jan 1998), pp. 27-46. https://www.jstor.org/stable/i277647.

- IRS Revenue Ruling 87-41 sets out 20 factors that help determine if there is an employer-employee relationship. These are nicely summarized on the Virginia Employment Commission website. Factor #2 explains that a contractor should not receive training from the purchaser of their services. Additionally, the IRS publishes guidelines related to determining if a person is an employee or a contractor.

- Fulker, Z., and Christoph Riedl. “Who wants to cooperate-and why? Attitude and perception of crowd workers in online labor markets.” Working paper https://arxiv.org/abs/2301.08808.

- Malone, Thomas W. 2018. “How Human-Computer ‘Superminds’ Are Redefining the Future of Work.” MIT Sloan Management Review, May 21, 2018. https://sloanreview.mit.edu/article/how-human-computer-superminds-are-redefining-the-future-of-work/.

- Park, Chan-Sik, and Hyeon-Jin Kim. “A Framework for Construction Safety Management and Visualization System.” Automation in Construction, Augmented Reality in Architecture, Engineering, and Construction, 33 (August 1, 2013): 95–103. https://doi.org/10.1016/j.autcon.2012.09.012.

-

Taylor, Timothy. 2022. “Preventing Sexual Harassment In The Metaverse Workplace.” Law360. May 23, 2022. https://www.law360.com/employment-authority/articles/1494398/preventing-sexual-harassment-in-the-metaverse-workplace

Shen, M. 2022. “Sexual harassment in the metaverse? Woman alleges rape in virtual world.” USA Today, February 1. https://www.usatoday.com/story/tech/2022/01/31/woman-allegedly-groped-metaverse/9278578002/ - Ransbotham, Sam, David Kiron, François Candelon, Shervin Khodabandeh, and Michael Chu. 2022. “Achieving Individual — and Organizational — Value With AI.” MIT Sloan Management Review, October 31, 2022. https://sloanreview.mit.edu/projects/achieving-individual-and-organizational-value-with-ai/.

- Ransbotham et al. “Achieving Individual — and Organizational — Value With AI.”

-

Chokshi, N. 2019. “How Surveillance Cameras Could Be Weaponized With A.I.” New York Times. June 13, 2019.https://www.nytimes.com/2019/06/13/us/aclu-surveillance-artificial-intelligence.html

Aloisi, Antonio, and Elena Gramano. “Artificial Intelligence Is Watching You at Work: Digital Surveillance, Employee Monitoring, and Regulatory Issues in the EU Context.” Comparative Labor Law & Policy Journal 41, no. 1 (Fall 2019): 95-122. https://heinonline.org/HOL/LandingPage?handle=hein.journals/cllpj41&div=8&id=&page= - Lazer, D. M., A. Pentland, D.J. Watts, S. Aral, S. Athey, N. Contractor, and C. Wagner. 2020. “Computational social science: Obstacles and opportunities.” Science 369, no. 6507: 1060-1062. https://doi.org/10.1126/science.aaz8170.

- See the Algorithmic Justice League for more information on this topic.

- Zhang, Lingyu, Indrani Bhattacharya, Mallory Morgan, Michael Foley, Christoph Riedl, Brooke Foucault Welles, and Richard J. Radke. 2020. “Multiparty Visual Co-Occurrences for Estimating Personality Traits in Group Meetings.” In Proceedings of 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), 2074–83. https://doi.org/10.1109/WACV45572.2020.9093642.

- Westby, S. and Christoph Riedl. 2023. “Collective Intelligence in Human-AI Teams: A Bayesian Theory of Mind Approach.” Proceedings of Thirty-Seventh AAAI Conference on Artificial Intelligence. https://arxiv.org/abs/2208.11660.

- Riedl, Christoph, Young Ji Kim, Pranav Gupta, Thomas W. Malone, and Anita Williams Woolley. “Quantifying Collective Intelligence in Human Groups.” Proceedings of the National Academy of Sciences 118, no. 21 (May 25, 2021): e2005737118. https://doi.org/10.1073/pnas.2005737118.

- Ransbotham et al. “Achieving Individual — and Organizational — Value With AI.”

- Patel, V., Chesmore, A., Legner, C.M. and Pandey, S. 2022. “Trends in Workplace Wearable Technologies and Connected-Worker Solutions for Next-Generation Occupational Safety, Health, and Productivity.” Advanced Intelligent Systems 4, no. 1. https://doi.org/10.1002/aisy.202100099.

-

Electronic Communications Privacy Act of 1986. 18 U.S.C. §§ 2510-2523.

According to the Society for Human Resource Management, “Congress passed [the ECPA] in 1986 as an amendment to the federal Wiretap Act. Whereas the Wiretap Act restricted only the interception and monitoring of oral and wire communications, the ECPA extended those restrictions to electronic communications such as e-mail.” - Bressler, Becca, Latif Nasser, and Barry Lam. 2022. “Gigaverse.” Radiolab, August 26, 2022. Podcast, 51:22. https://radiolab.org/episodes/gigaverse.

- Ward, Alysa. “Bringing Work Home: Emerging Limits on Monitoring Remote Employees.” The National Law Review, October 31, 2022. https://www.natlawreview.com/article/bringing-work-home-emerging-limits-monitoring-remote-employees.

Shen, M. 2022. “Sexual harassment in the metaverse? Woman alleges rape in virtual world.” USA Today, February 1. https://www.usatoday.com/story/tech/2022/01/31/woman-allegedly-groped-metaverse/9278578002/

Aloisi, Antonio, and Elena Gramano. “Artificial Intelligence Is Watching You at Work: Digital Surveillance, Employee Monitoring, and Regulatory Issues in the EU Context.” Comparative Labor Law & Policy Journal 41, no. 1 (Fall 2019): 95-122. https://heinonline.org/HOL/LandingPage?handle=hein.journals/cllpj41&div=8&id=&page=

According to the Society for Human Resource Management, “Congress passed [the ECPA] in 1986 as an amendment to the federal Wiretap Act. Whereas the Wiretap Act restricted only the interception and monitoring of oral and wire communications, the ECPA extended those restrictions to electronic communications such as e-mail.”

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).