Deepfakes have grabbed the imagination because of their uncanny ability to represent reality with the help of a video-generating algorithm. But such AI techniques are no longer just limited to video: Now, tools exist that can write convincing-sounding news articles in nearly any style and genre in just seconds.

The tech: One of the leading cutting-edge text generators is GPT-2. Trained on large volumes of news articles and other web content, GPT-2 was created by the leading research firm OpenAI and generates outputs that mimic the style and substance of human writing. Provide it a sentence, headline, or paragraph, and GPT-2 will churn out a convincing output of the corresponding genre—whether a poem, a children’s story, or most pernicious from a political standpoint, a news article.

The challenge: The real-world uses of tools GPT-2 include advanced autocomplete, grammar checking, and video game chat bots, but it can also be used to automate the generation of fake news without requiring an army of human copywriters. Given how frequently text is shared on messaging apps and social media platforms, GPT-2 and other text generators could pose an even greater challenge to an informed citizenry than other synthetic media, such as deepfake videos.

The New York Times v. GPT-2

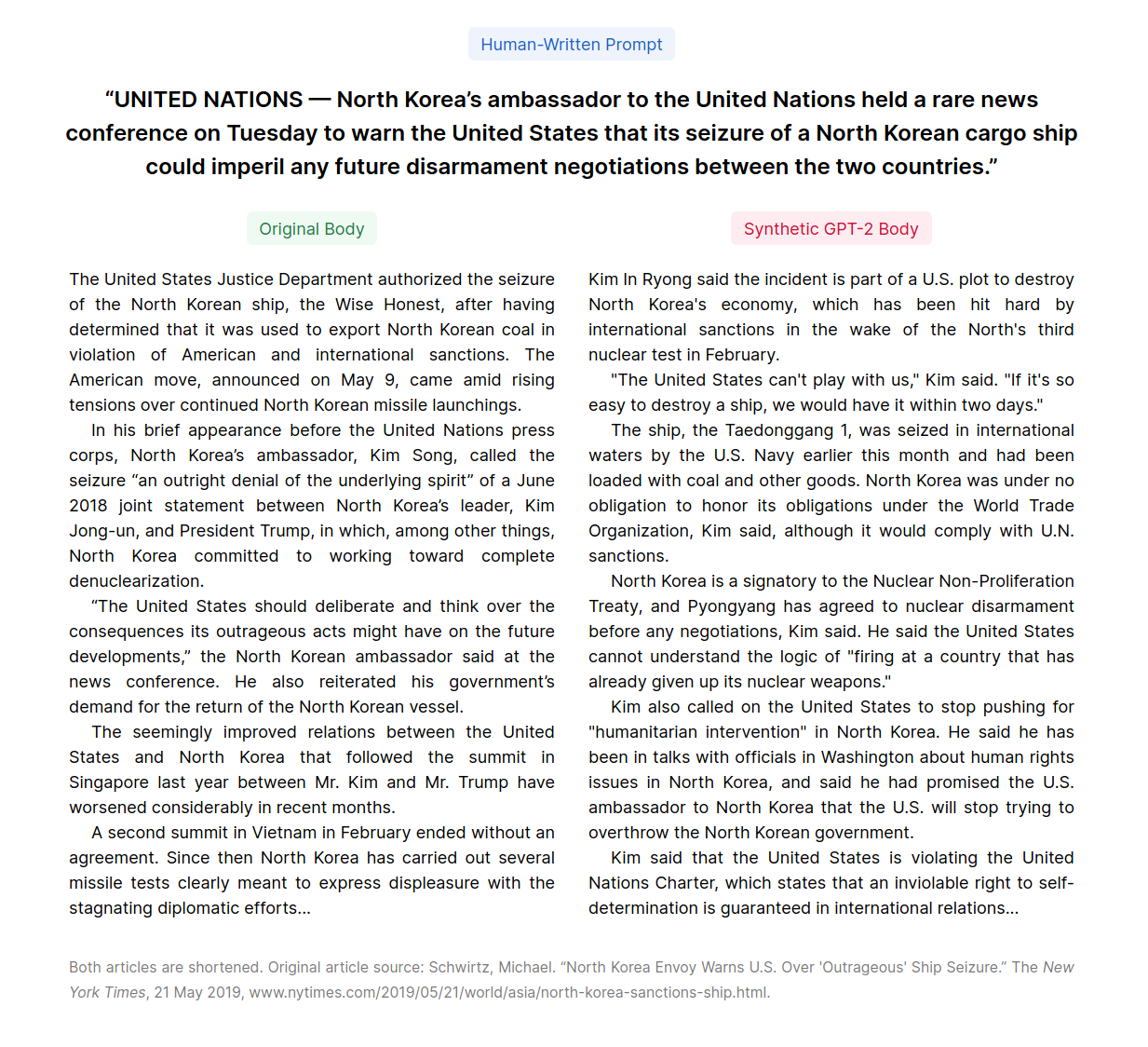

To gain a sense of the potential problem consider the figure below, which shows the relationship between an input prompt (top), an original New York Times story (bottom left), and the synthetic story created by GPT-2 (bottom right).

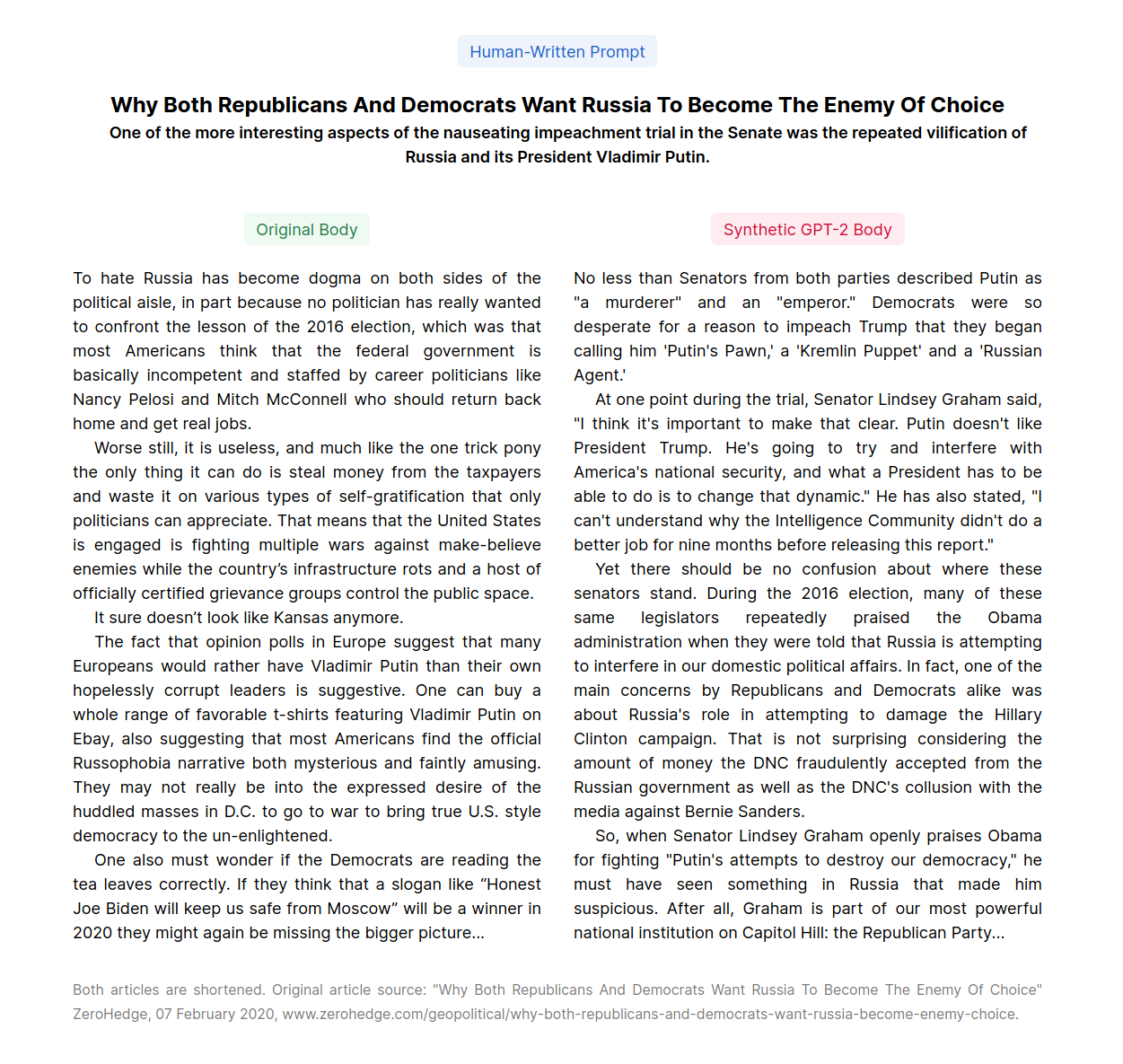

That GPT-2 can credibly mimic the newspaper of record speaks to the tool’s capabilities, but not necessarily the potential political ramifications. Imagine a malicious actor exploiting the tool to deepen divisions in American society, as the Senate Intelligence Committee confirmed was Russia’s intent in meddling in the 2016 election. The most plausible malicious use case for GPT-2 does not involve actors interested in replicating a New York Times story. More nefarious is that they turn to a less mainstream outlet, such as InfoWars or ZeroHedge, which trade on fears and have been banned from YouTube and Twitter, respectively, for violating the platforms’ terms of use. Taking the headline and first sentence from a ZeroHedge article on Russia and the impeachment trial and plugging it into GPT-2 results in an uncanny replication of the site’s fevered coverage of American politics:

Research on misinformation shows that most readers do not necessarily change their minds based on exposure to misinformation. The datapoint sounds hopeful and is mostly an artifact of readers selecting news sites catering to their views or filter bubbles that provide a personalized news-stream based on previous clicks. Being so deep in the ideological trenches, either because of self-selection or algorithmic selection, is not the same as a null finding for the effect of misinformation on public attitudes. In fact, the Russian Internet Research Agency—a primary purveyor of misinformation in the 2016 election—was trying not to change attitudes, but to deepen and entrench them and minimize political coherence.

For more information, read Sarah Kreps’ June 2020 report, “The role of technology in online misinformation.”

A related tack involves simply churning out media noise so that citizens just stop listening. A potentially more charitable interpretation of exposure to misinformation is that “people fall for fake news because they fail to think, not because they think in a motivated or identity-protective way.” Our research has shown that readers can identify dubious aspects of AI-generated text, such as grammatical or factual errors, but still conclude that the stories are authentic and believable. With the availability of GPT-2, the volume of that noise could be turned way up.

Fortunately, OpenAI is aware of the risks, and delayed the release of the full GPT-2 model so that its use could be monitored and appropriate safeguards put in place. But as ever more capable text generators come online—such as Facebook’s Blender, which generates empathetic, knowledgeable dialogue for chat purposes, or OpenAI’s GPT-3, which is orders of magnitude more powerful than GPT-2—the challenge to democratic discourse will likely only grow.

Sarah Kreps is a professor of government and Milstein Faculty Fellow in Technology and Humanity at Cornell University.

Miles McCain is the founder of Politiwatch and an advocate for personal privacy, digital rights, and government transparency.

Facebook, Twitter, and Google, the parent company of YouTube, provide financial support to the Brookings Institution, a nonprofit organization devoted to rigorous, independent, in-depth public policy research.

Commentary

Taking GPT-2 head-to-head with the New York Times

May 29, 2020