China’s new “Personal Information Protection Law” aims to protect individuals, society, and national security from harms stemming from abuse and mishandling of personal information — targeting both the private sector and government functions, writes Brookings scholar Jamie Horsley, alongside other experts. This article originally appeared in the journal DigiChina.

On August 20, China’s National People’s Congress (NPC) Standing Committee passed China’s first comprehensive data privacy law, the “Personal Information Protection Law” (PIPL, translated by DigiChina), which will take effect November 1. The law has a history stretching back more than 15 years to an earlier, aborted legislative effort, and it continued to evolve through the first and second published drafts, straight through to the final text.

This article focuses on the newest developments that emerged when the final text of the PIPL was released and explains the context around these developments. DigiChina’s previous coverage of the law, based on draft language that in many cases has not substantively changed, includes:

- A translation of the first published draft of the PIPL (Oct. 21, 2020);

- “China’s Draft Privacy Law Both Builds On and Complicates Its Data Governance” (Dec. 14, 2020);

- “Personal Data, Global Effects: China’s Draft Privacy Law in the International Context” (Jan. 4, 2021);

- “How Will China’s Privacy Law Apply to the Chinese State?” (Jan. 26, 2021);

- A translation of the second review draft of the PIPL (April 29, 2021);

- An analysis of changes between the first and second drafts (May 3, 2021);

- “Top Scholar Zhou Hanhua Illuminates 15+ Years of History Behind China’s Personal Information Protection Law” (June 8, 2021); and

- A translation of the final PIPL (Aug. 20, 2021).

In digesting the new law, two overriding points are important to keep in mind. First, the PIPL is a framework law that is not intended to provide granular detail on the majority of the policy matters it covers, but rather sets out broad principles, objectives, mandates, and responsibilities. To make these generalities concrete and specific, regulators such as the Cyberspace Administration of China will draft and issue implementing regulations, and standards-setting organizations will issue technical standards and specifications. This is why the PIPL is much shorter and less detailed than its main international counterpart, the European General Data Protection Regulation (GDPR), and detailed answers to regulatory questions — let alone the question of how the law may be enforced — may be months or years away. Indeed, regulations issued this month on “critical information infrastructure” added missing detail to provisions of the Cybersecurity Law more than four years after it entered force.

Second, while the PIPL addresses many issues frequently discussed in the context of personal privacy worldwide, it does not directly address “privacy” (隐私), which is a separate concept in Chinese law. The law’s focus is on protecting individuals, society, and national security from harms stemming from abuse and mishandling of personal information — targeting both the private sector and government functions.

Below, we discuss changes included in the final law regarding automated decision-making, minors’ personal information, cross-border data transfers, data portability, government handling of personal information, post-mortem data rights, and different obligations for large and small data handlers.

Explicit Ban on Automated Decision-Making for Price Discrimination

In recent years, a trend of businesses secretly using big data to enable differential treatment of consumers, including price discrimination, has been a growing public concern. This so-called “big data swindling” (大数据杀熟) consists of “a mix of dark patterns and dynamic pricing that online platforms employ to exploit users’ differential willingness to pay for goods and services,” according to the scholar Shazeda Ahmed. The practice is explicitly banned in the final text of the PIPL.

The second draft of the law already addressed automated decision-making, requiring transparency, fairness, and justice in decisions, as well as explanation of the process when the impact on individuals’ rights and interests could be significant. It also gave people the ability to opt out of algorithmic targeting or automated decision-making.

Article 24 of the final PIPL additionally requires personal information handlers to “not engage in unreasonable differential treatment of individuals in trading conditions” and specifically prohibits price discrimination through automated decision-making. In addition, the final law requires opt-out methods to be “convenient.”

The PIPL defines “automated decision-making” as “the activity of using computer programs to automatically analyze or assess personal behaviors, habits, interests, or hobbies, or financial, health, credit, or other status, and make decisions [based thereupon]” (Article 73). Banning the use of this kind of activity for differential treatment in consumer markets aligns with and puts a high level of legal authority behind recent regulatory moves that also appear aimed at data-driven market discrimination.

The State Administration for Market Regulation (SAMR), China’s central consumer protection regulator, in recent weeks released two proposed regulations — Provisions on Prohibited Acts of Improper Online Competition 《禁止网络不正当竞争行为规定》 and amendments to the existing Provisions on Administrative Sanctions Against Price-related Illegal Activities 《价格违法行为行政处罚规定(修订征求意见稿)》 — both ban business entities from using big data and algorithms to analyze consumer behavior and offer unreasonably differential trading conditions.

While these efforts signal serious efforts to tackle an increasingly recognized problem, uncertainties remain as to how these rules can be put into practice and effectively protect consumers from data-enabled discrimination. One key challenge lies in the technical capacity of regulators to police source code. While several high-profile accusations of algorithmically-driven price discrimination have been made against prominent Chinese ride-hailing and travel platforms, the accusations were based on conclusions drawn by consumers after using the platform, rather than based on identification of code that was explicitly written for this purpose. Regulators are now faced with the considerable challenge of sorting out how best to determine that algorithmic price discrimination has taken place.

Personal Information of Minors Under 14 Protected as ‘Sensitive Personal Information’

While the second draft of the PIPL included a parental consent requirement to collect personal data on minors under age 14, the final draft of the PIPL goes one step further: Now, minors’ personal data is regarded as “sensitive personal information,” a category that carries extra protection and procedures and includes other “information on biometric characteristics, religious beliefs, specially-designated status, medical health, financial accounts, [and] individual location tracking” (Article 28).

This “sensitive” designation means, in addition to the typical privacy law requirement to obtain a parent or guardian’s consent for handling minors’ data, China’s PIPL limits processing of minors’ data to when “there is a specific purpose and a need to fulfill, and under circumstances of strict protection measures” (Article 28). It also ups transparency requirements. Personal information handlers are already required to provide certain information, including the types of data and purpose for which it is to be used, for regular personal information. Because it is designated “sensitive,” handling minors’ data additionally requires notice “of the necessity and influence on the individual’s rights and interests,” with limited exceptions (Article 30). Further, personal information handlers are required to establish dedicated rules specifically for processing minors’ data (Article 31).

Technology’s impact on minors has been a perennial concern for the Chinese government. For two decades, online gaming addiction among children has been a special focus, and scrutiny of the gaming industry has intensified in recent years. In 2019, a new notice restricted children from playing online games between 10 p.m. and 8 a.m. This June, China also implemented an amended of Law on the Protection of Minors to protect children under 16 against addiction to online games, videos, and social networks. These developments underscore the Chinese government’s desire to put more restrictions on the gaming industry and reduce negative effects on children, and this new addition to the PIPL supports this aim by requiring companies to handle minors’ personal information with care.

New Potential Grounds, and New Requirements, for Cross-Border Data Transfers

Since the Cybersecurity Law was finalized in 2016, both domestic and foreign companies have faced uncertainty about how the transfer of personal data from China to other jurisdictions would be regulated. The PIPL provides a more detailed framework, and the final text includes a new provision explicitly allowing cross-border transfer of personal information when treaties or international agreements are in place. At the same time, the final text also imposes a new requirement on personal information exporters to ensure data protection standards are met after transfer.

There are signals that the Chinese government is interested in international agreements on data transfers. The PIPL itself encourages Chinese official participation in international personal data protection rulemaking and the promotion of “mutual recognition of personal information protection rules [or norms]” (Article 12). In a recent DigiChina interview, Professor Zhou Hanhua of the Chinese Academy of Social Sciences advocated for “avoid[ing] the balkanization of digital products through an international arrangement on the flow of information.” Under the final PIPL, if such agreements emerge in the form of treaties or international agreements that China participates in, those accords would be grounds for allowing the transfer of personal information abroad (Article 38). It remains unclear, however, whether Chinese regulators are seeking to facilitate interoperability between Chinese privacy law and other jurisdictions, to bring the international privacy playbook more in line with China’s approach, or both.

Regardless of the legal justification for a transfer — be it the new possibility of international deals or the existing grounds for transfer such as passing security assessments or concluding a contract with the receiving side that conforms to CAC standards — data exporters face a new requirement.

Under Article 38, regardless of which legal basis one relies upon to transfer personal data abroad, data exporters must “adopt necessary measures to ensure that foreign receiving parties’ personal information handling activities reach the standard of personal information protection provided in” the PIPL. There remains great uncertainty, however, as to how enforcing authorities might evaluate whether the PIPL’s standard has been met. Some commentators have speculated that this provision could evolve into something like the GDPR’s regime under which other whole jurisdictions are deemed to have “adequate” protections for transfer, but there is scant evidence for this approach so far, and there are numerous ways this provision could develop as detailed regulations are formulated.

A New Data Portability Requirement

In its final form, the PIPL gives individuals the new right to data portability — in essence the ability to extract their data from one platform or service and store or use it elsewhere. Article 45 requires that personal information handlers comply with people’s requests to transfer their data to another handler, so long as the transfer meets conditions to be set by the CAC (Article 45).

This addition is relevant to recent Chinese developments in antitrust. Internet platforms have leveraged large troves of user data to lock users on their platforms and fend off emerging competitors. It is widely believed that increasing data portability will enhance market competition, benefitting consumers and fueling innovation. For instance, an antitrust guideline published earlier this year considers data-related user switching costs in the assessment of market entry barriers.

Data portability in practice is easier said than done. Personal information may be delivered in a format that is neither machine-readable nor easily transferable to a competing firm’s products. Smaller firms may face a disproportionately high cost when servicing data portability requests. Furthermore, the personal information of one person may contain that of another — a problem that is particularly acute when it comes to social media, for instance in chat histories or photos.

Questions Remain Regarding PIPL’s Application to the Chinese State

The final law maintains most provisions contained in its first draft that specifically apply to and purport to constrain, as well as empower, the Chinese state, and it raises questions regarding the applicability of other provisions. The second draft of the PIPL removed without explanation a provision requiring state organs to not disclose or provide others with personal information they handle, except as provided by law and administrative regulations or with the individual’s consent. In principle, state organs are nonetheless subject to nondisclosure obligations of all personal information handlers set forth in Article 25, while disclosure of personal information held by government entities is subject to enforceable State Council regulations. The final text further deleted references to consent in Article 35, perhaps because state organs are presumed to always handle personal information as necessary to fulfill statutory duties and therefore should be exempted from the consent requirement under Article 13. Nonetheless, if state organs do collect or otherwise handle personal information outside the scope of their statutory authority, individuals may benefit from new language in Article 50 that specifies they have the right to file a lawsuit to enforce their information rights, which should cover administrative litigation against government handlers.

Questions remain about the extent to which state organs will in fact be required to comply with personal information handler responsibilities, including requirements set forth in Chapter V to appoint a personal information protection officer; conduct audits and impact assessments; report leaks and other risks; and establish compliance structures and publish social responsibility reports under Article 58. Will, for example, large public-facing platforms like Credit China, as well as non-public, data-rich platforms such as the National Development and Reform Commission’s National Credit Information Sharing Platform that service other government departments and authorized users, be covered by such obligations?

The final text names the Constitution in Article 1 as the legal basis for protecting personal information, something Chinese administrative law scholars had advocated to afford stronger public law protection of personal information, including by and against the State. Nonetheless, given all the questions raised by the PIPL as to its practical impact on state organs, perhaps a more significant step would be enactment of a law that specifically regulates personal information handling by state organs.

Post-Mortem Data Rights Refined

Compared to the second draft, Article 49 in the final PIPL limits the powers of the next of kin over the deceased’s personal information. While the previous draft would have handed the next of kin broad authority to exercise the personal information rights of the deceased, the final version adds language limiting their exercise to “their own lawful, legitimate interests,” signaling that these rights are not to be abused. The final version also clarifies that an individual can override these next of kin powers by making arrangements before they die.

Size Matters When It Comes to Data Handlers

The final draft of the PIPL more clearly differentiates between the obligations of large data handlers and smaller data handlers. Specifically, it imposes extra requirements on large platforms, clarifies regulatory authorities over mobile apps’ data activities, and implies smaller operations should be afforded greater flexibility in compliance.

In addition to those enhanced requirements for large platforms that are already addressed in the second draft (See DigiChina’s analysis of the second draft), Article 58 newly requires them to “establish and complete personal information protection compliance structures and systems according to State regulations” and “abide by the principles of openness, fairness, and justice; formulate platform rules; and clarify the standards for intra-platform product or service providers’ handling of personal information and their personal information protection duties.”

Then, Article 61 adds a new provision expressly authorizing China’s data regulators to “organiz[e] evaluation of the personal information protection situation such as procedures used, and publish the evaluation results.”

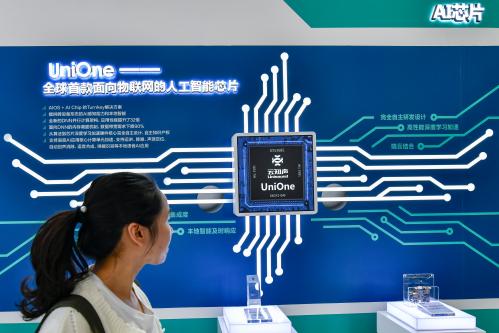

The final PIPL reflects a tightening regulatory environment in China over mobile apps. Since 2019, the Chinese government has pursued sweeping enforcement actions against apps’ personal data mishandling. Just in the last few months, authorities released the Provisions on the Scope of Necessary Personal Information for Common Used Mobile Internet Applications (《常见类型移动互联网应用程序必要个人信息范围规定》) and draft Interim Provisions on the Protection and Management of Personal Information in Mobile Internet Applications (《移动互联网应用程序个人信息保护管理暂行规定(征求意见稿)》). Platforms that provide hosting services for mobile apps are also required to roll out management programs to regulate them. By embedding these requirements in a law at the top level of China’s legislative hierarchy, the PIPL confirms China’s regulatory determination to tackle data mishandling by mobile apps and provides a robust legal basis for future regulatory and enforcement actions.

Meanwhile, the PIPL implicitly recognizes the potentially heavy compliance burdens for small data handlers who may pose comparatively limited risks to individuals. Article 62 authorizes regulators to “formulate specialized personal information protection rules and standards for small-scale personal information handlers,” but the PIPL does not define “small-scale.”

Conclusion

The final text of the PIPL is the culmination of years of legislative work and policy debate in China. Although it retains most of the major features of the first NPC draft, published just over a year before the final version is to take effect on November 1, the law underwent significant revisions.

The latest developments, examined above, are indicative of ongoing policy thinking and debate, and many of the subtleties will continue to be hashed out as regulators construct detailed rules atop the law’s framework. In combination with the Data Security Law, which takes effect September 1, and the ongoing evolution of the regime around the Cybersecurity Law, Chinese policymakers and interested parties inside China and abroad still have their work cut out for them.

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).

Commentary

Seven major changes in China’s finalized Personal Information Protection Law

August 23, 2021