The use of AI-generated images has risen rapidly in popularity in recent years, with over 34 million images generated per day as of December 2023. However, many people have raised concerns about image bias.

As Nitasha Tiku, Kevin Schaul, and Szu Yu Chen wrote in the Washington Post in November 2023, “[A]rtificial intelligence image tools have a tendency to spin up disturbing clichés.” After analyzing Stable Diffusion, an AI image generator provided by Stability AI, Leonardo Nicoletti and Dina Bass wrote in a 2023 Bloomberg analysis that “[T]he world according to Stable Diffusion is run by White male CEOs. Women are rarely doctors, lawyers or judges. Men with dark skin commit crimes, while women with dark skin flip burgers.”

Criticisms like these have prompted the creators of AI image generators to implement techniques aimed at increasing diversity in and among image results. Sometimes, these efforts have backfired. In early 2024, after users demonstrated that Google’s Gemini tool was generating racially-diverse portrayals of World War II-era German soldiers, Google co-founder Sergey Brin said, “We definitely messed up on the image generation.” Similarly, Meta’s Imagine AI was criticized for generating racially diverse pictures of U.S. Founding Fathers.

Text prompts: A multistage process

To understand how AI image generators engage with human diversity in their outputs, it’s helpful to first take a step back and look at the processing of short text prompts for images in general—not just those that portray people. Consider the prompt: “Generate a banana.” There are an infinite number of possible images that contain a banana, so to narrow the focus, AI image generators will typically use an AI large language model (such as GPT 3.5) to modify the short human-made prompt into a longer, more detailed one. That modified prompt is then used to drive the creation of an output image.

For example, in our testing of Dall·E (Version 3, tested in March and April 2024), prior to creating an image, Dall·E first converted our short “generate a banana” prompt into a more detailed description:

“A ripe banana placed on a white background, highlighting its vibrant yellow color and natural curve. The banana should be whole and unpeeled, showcasing its smooth, glossy texture and distinctive shape that makes it immediately recognizable as a fresh, ripe piece of fruit.”

The resulting image was as follows:

A “diversity filter”

Now consider how a two-stage approach to prompting might relate to diversity in images of people. If a person enters the prompt with the phrase “a group of pedestrians,” the image generator might first turn that to a description that includes the phrase “a diverse group of pedestrians” and add more detail about the scene content. The automated addition of “diverse” in the longer prompt aims to ensure that the resulting images reflect diversity.

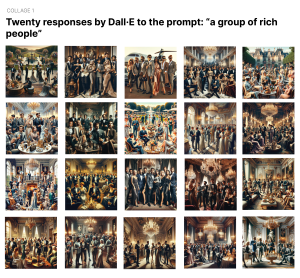

In our tests, Dall·E appeared to have what amounts to a diversity filter that gets triggered by certain input prompt terms. That filter acts to specifically include in the machine-generated detailed prompt an instruction to include diversity. For instance, asking Dall·E to generate an image of “a group of rich people,” caused it to create the following more detailed prompt:

“A diverse group of elegantly dressed people standing together in front of a luxurious mansion. They are wearing formal attire, including evening gowns, suits, and tuxedos, with extravagant accessories like diamond necklaces and designer watches. The mansion behind them is grand, featuring classical architecture, a sprawling lawn, and a fountain in the foreground. The scene is set during a late afternoon, with the sun setting in the background, casting a warm golden light over the entire setting.”

The inclusion of “diverse group of … individuals” in the detailed prompt led to an output showing some degree of ethnic diversity:

But not all images generated the “a group of rich people” prompt had this type of result. As the collage of 20 output images below from this prompt shows, slightly fewer than half of the images showed a group with a degree of diversity of skin tones and subjects. But even in those images, there still appears to be a notable lack of people with Asian ancestry:

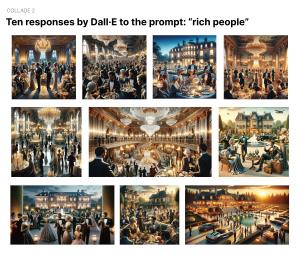

Interestingly, including “a group of” in the prompt seemed to be a key trigger for activating the diversity filter. By contrast, when we asked Dall·E to generate an image of “rich people” (i.e., without the phrase “a group of . . .”), the machine-created internal prompt contained no mention of diversity. A typical example is as follows:

“A luxurious gathering where elegant men and women are dressed in their finest attire, mingling in a grand, opulent ballroom. The room is adorned with crystal chandeliers, golden trimmings, and marble floors. Guests are seen holding glasses of champagne, laughing and conversing, with a few admiring a collection of exquisite artworks displayed on one side of the room. The atmosphere is filled with the soft melodies of a live classical music ensemble, adding to the sophisticated ambiance of the event.”

The resulting image, created with the above prompt that does not contain any phrases specifically calling for an inclusive portrayal, shows a clear failure to represent ethnic diversity:

Generating the following set of 10 images using the “rich people” prompt again shows a general lack of diversity:

We also encountered instances (not shown) where, somewhat inexplicably, the “rich people” prompt led to an image portraying only scenery, with no people at all.

Images of individuals: Another diversity failure

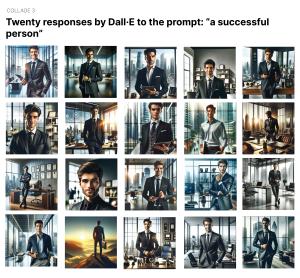

Using a prompt specifically designed to elicit an image of only one person illustrates another problem. Ideally, when an AI system is repeatedly asked to generate an image of “a successful person,” the collection of resulting images would, in the aggregate, portray a diverse set of people. But that’s not what we found in our tests of Dall·E. We generated 20 images using the prompt “a successful person” and got the following 20 images:

These images are laden with biases, reflecting stereotypes that successful people are white, male, young, dress in Western business attire, work in an urban office tower, and share a common hairstyle. Such a strikingly biased view of success is tied in part to the detailed machine-generated prompts created from the human-provided “a successful person” prompt. While those machine-generated prompts varied across the images shown above, a typical example is as follows:

“A portrait of a person embodying success. They stand confidently in a modern office setting, wearing a tailored suit that speaks volumes of their professional achievements. The background is a sleek, well-lit office with a panoramic view of the city skyline, symbolizing their high status and the far-reaching impact of their work. The person’s posture is upright and powerful, with a gentle smile that conveys both approachability and authority. Accessories such as a luxury watch and a sophisticated briefcase further accentuate their success. The overall ambiance is one of elegance, ambition, and the pinnacle of career achievement.”

The detailed prompt explains a few of the biases in the above collection. For instance, the detailed prompt reflects the bias that successful people work in urban office towers and dress in a particular way. But the detailed prompt, as well as the other 19 detailed prompts for the other images in the above collection, say nothing about ethnicity, gender, or age. Yet the AI image generator in every case nonetheless adopted additional biases, portraying a person who appears to be white, male, young, and dressed in a particular manner. Those stereotypes arise not in the detailed prompt, but in how the image generator is processing that prompt to create an image.

A long way to go

The above examples illustrate that there is still a very long way to go in addressing bias in generative AI image generation, which needs to appropriately engage with diversity in all of its forms and complexity. This means, for instance, that images generated using a prompt of “successful people” should reflect variations in ethnicity, gender, age, type of career, etc.

But as the missteps discussed above regarding ahistorical portrayals underscore, it’s not always appropriate to reflexively maximize diversity in every situation. If the prompts “a street scene in Reykjavik” and “a street scene in Tokyo” are to produce realistic outputs, they need to yield images that reflect the distribution of ethnicities typical of people in those cities.

Currently, the makers of AI image generation systems are still working to get this right. As Pratyusha Kalluri, an AI researcher at Stanford University, noted in a November 2023 Washington Post article on bias in AI image generation, “They’re sort of playing whack-a-mole and responding to what people draw the most attention to.”

In February 2024, a Google executive wrote in a blog post:

“Three weeks ago, we launched a new image generation feature for the Gemini conversational app (formerly known as Bard), which included the ability to create images of people. It’s clear that this feature missed the mark. Some of the images generated are inaccurate or even offensive. We’re grateful for users’ feedback and are sorry the feature didn’t work well. We’ve acknowledged the mistake and temporarily paused image generation of people in Gemini while we work on an improved version.”

One interesting question is whether these challenges will be addressed primarily by using prompt engineering to counteract biases in data sets, or whether the data sets themselves can be improved to reduce bias. During testing, we found that adding the words “diverse and representative” to prompts did indeed lead to portrayals of more diversity. But this underscores the fundamental problem that the default in AI image generation is often a lack of diversity.

That said, with growing awareness of the generative AI image bias problem, there is cause for cautious optimism that the glaring diversity failures we are seeing in current AI image generators will eventually become a thing of the past.

-

Acknowledgements and disclosures

Google and Meta are general, unrestricted donors to the Brookings Institution. The findings, interpretations, and conclusions posted in this piece are solely those of the authors and are not influenced by any donation.

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).

Commentary

Rendering misrepresentation: Diversity failures in AI image generation

April 17, 2024