Imagine yourself having had a heart attack. An ambulance arrives to transport you to a hospital emergency room. Your ambulance driver asks you to choose between two hospitals, Hospital A or Hospital B. At Hospital A, the mortality rate for heart attack patients is 75 percent. At Hospital B, the mortality rate is just 20 percent. But mortality rates are imperfect measures, based on a finite number of admissions. If neither rate were “statistically significantly” different from average, would you be indifferent about which hospital you were delivered to?

Don’t ask your social scientist friends to help you with your dilemma. When asked for expert advice, they apply the rules of classical hypothesis testing, which require that a difference be large enough to have no more than a 5% chance of being a fluke to be accepted as statistically significant. (For examples, see Schochet and Chiang (2010), Hill (2009), Baker et. al. (2010).) In many areas of science, it makes sense to assume that a medical procedure does not work, or that a vaccine is ineffective, or that the existing theory is correct, until the evidence is very strong that the original presumption (the null hypothesis) is wrong. That is why the classical hypothesis test places the burden of proof so heavily on the alternative hypothesis, and preserves the null hypothesis until the evidence is overwhelmingly to the contrary. But that’s not the right standard to use in choosing between two hospitals.

In 1945, Herbert Simon published a classic article in the Journal of the American Statistical Association pointing out that the hypothesis testing framework is not suited to many common decisions. He argued that in cases where decision-makers face an immediate choice between two options, where the cost of falsely rejecting the best option is not qualitatively more important or larger for one than the other (i.e. where the costs are symmetric), where it would be infeasible to postpone a decision until more data are available, then the optimal decision rule would be to choose the option with the better odds of success – even if the difference is not “statistically significant.”

Improper use of the hypothesis testing paradigm can lead to costly mistakes. A good example is the teacher tenure decision. There are at least two alternative ways to think about the problem. Under the hypothesis testing paradigm, one would start with the hypothesis that an incumbent teacher is average, and only deny tenure when that presumption was beyond a reasonable doubt. Let’s call this the “average until proven below average” formulation.

Following Professor Simon, a second approach would be to recognize that a tenure decision implicitly involves the choice between two teachers, the incumbent teacher and an anonymous novice teacher. We would compare the two teachers’ predicted effectiveness and choose the option with the better predicted outcome. Effectively, we would promote the incumbent only when their effectiveness is “better than the average novice,” and we wouldn’t promote in cases where hiring a new teacher would seem the better bet (however large or small the predicted gain is).

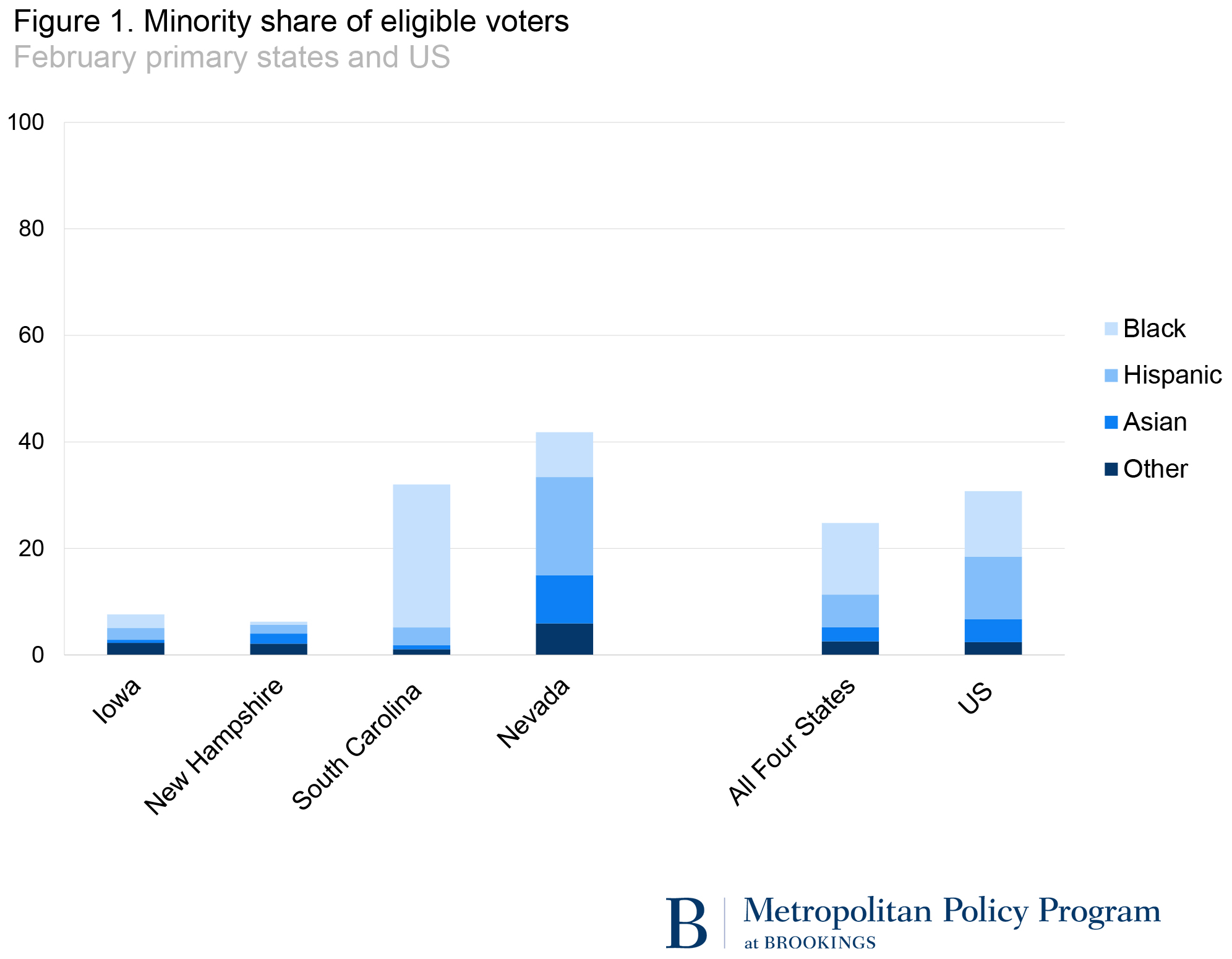

Douglas Staiger and I estimated value-added for teachers in three large urban school districts to explore the implications of these two different criteria. We focused on the subset of teachers for whom we could calculate value-added for 6 or more years. The results are portrayed in the following graph. The horizontal axis reports the percentile of teacher’s value-added in a single year. The vertical axis reports the 95 percent confidence interval boundaries (in blue) and the 25

th through 75

th percentile of their average value-added over their careers (in red).

Measures of the effectiveness of individual teachers are sufficiently “noisy” that only a few percent of teachers will have measured effectiveness “statistically significantly” different from average. As pictured in the above graph, the 95 percent confidence intervals include zero (the effectiveness of the average teacher) throughout most of the distribution. Only for a few percent of teachers at the top of the distribution and a subset of the teachers in a single percentile at the bottom is zero excluded. As a result, by the “

average until proven below-average

” criterion, only a subset of the bottom 1 percent of teachers would be turned down by that metric. More than 99 percent of all teachers would earn tenure.

The “

better than an average novice

” criterion would yield a very different result. We would turn down any teacher with predicted effectiveness less than the average novice teacher (portrayed by the horizontal line slightly below zero in the above graph). The threshold is crossed at the 25

th percentile, where median value-added is equivalent to that of the average novice. For teachers below the 25

th percentile, predicted effectiveness is below that of the average novice. Applying the “

better than an average novice

” criterion, therefore, we would turn down the bottom 25 percent of teachers and choose the option of hiring a novice teacher to teach in their place.

But which criterion will yield better results for children? To compare the two rules, we focus on teachers within the “zone of disagreement,” the range of value-added where the two criteria would produce different results. The zone extends from the 1st percentile through the 25th. As portrayed in the figure, teachers in this zone ended up producing very poor results for children. For those with predicted effectiveness equal to the average novice (at the 25th percentile), 75 percent had career value-added worse than the average teacher. But the remaining teachers in the zone of disagreement performed worse. Among those who would have been turned down by the “better than the average novice” criterion but who were saved by the “average until proven below average,” 86 percent ended up having average career value-added worse than the average teacher. The hypothesis testing paradigm would have us save the 14 percent of teachers who eventually performed above average, at the expense of the children taught by the 86 percent who were below average (often far below).

There are many examples of decisions involving symmetric costs in education: Should a student choose College A or College B based on differences in college graduation rates? Should a principal hire Teacher C or Teacher D to teach fourth grade math based on a difference in value-added scores? When facing budget cuts, should a principal retain Teacher E or Teacher F based on differences in their past evaluation scores? In such cases, decision-makers would do well to choose the option with the better odds of success, even when those differences are not “statistically significant.”

References:

Eva L. Baker, Paul E. Barton, Linda Darling-Hammond, Edward Haertel, Helen F. Ladd , Robe rt L. Linn, Diane Ravitch, Richard Rothstein, Richard J. Shavelson, and Lorrie A. Shepard “Problems with the Use of Student Test Scores to Evaluate Teachers” Economic Policy Institute Briefing Paper No. 27, August 2010.

Hill, Heather C., “Evaluating value-added models: A validity argument approach” Journal of Policy Analysis and Management Volume 28, Issue 4, pages 700–709, Autumn (Fall) 2009.

Schochet, Peter Z. and Hanley S. Chiang (2010). Error Rates in Measuring Teacher and School Performance Based on Student Test Score Gains (NCEE 2010-4004). Washington, DC: National Center for Education Evaluation and Regional Assistance, Institute of Education Sciences, U.S. Department of Education.

Simon, Herbert A. “Statistical Tests as a Basis for “Yes-No” Choices”, Journal of the American Statistical Association, Vol. 40, No. 229 (Mar., 1945), pp. 80-84.

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).