Introduction

Often when anyone hears about the ethics of autonomous cars the first thing to enter the conversation is “the Trolley Problem.” The Trolley Problem is a thought experiment where someone is presented with two situations that present nominally similar choices and potential consequences (Foot 1967; Kamm 1989; Kamm 2007; Otsuka 2008; Parfit 2011; Thompson 1976; Thompson 1985; Unger 1996). Situation A (known as Switch), is where a runaway trolley is driving down a track and will run into and kill five workmen unless an observer flips a switch and diverts the train down a sidetrack that will only kill one workman. Situation B (known as Bridge) has the observer crossing over a bridge, where she sees that the five people will be killed unless she pushes a rather large and plump individual off off the bridge onto the tracks below, thereby stopping the train and saving the five. Most philosophers agree that it is morally permissible to kill the one in Switch, but others (including most laypeople) think that it is impermissible to push the plump person in Bridge (Kagan 1989). The case has the same effects: kill one to save the five. This discrepancy in intuition has led to much spilled ink over “the problem” and led to an entire enquiry in “Trolleyology.”

Applied to autonomous cars, at first glance, the Trolley Problem seems like a natural fit. Indeed, it could be the sine qua non ethical issue for philosophers, lawyers, and engineers alike. However, if I am correct, the introduction of the Trolley is more like a death knell of any serious conversation about ethics and autonomous cars. The Trolley Problem detracts from understanding about how autonomous cars actually work, how they “think,” how much influence humans have over their decision-making processes, and the real ethical issues that face those advocating the advancement and deployment of autonomous cars in cities and towns the globe over. Instead of thinking about runaway trolleys and killing bystanders, we should have a better grounding in the technology itself. Once we have that, then we see how new—more complex and nuanced—ethical questions arise. Ones that look very little like trolleys.

I argue that we need to understand that autonomous vehicles (AVs) will be making sequential decisions in a dynamic environment under conditions of uncertainty. Once we understand that the car is not a human, and that the decision is not a single-shot, black and white one, but one that will be made at the intersection of multiple overlapping probability distributions, we will see that “judgments” about what courses of action to take are going to be not only computationally difficult, but highly context dependent and, perhaps, unknowable by a human engineer a priori. Once we can disabuse ourselves of thinking the problem is an aberrant one-off ethical dilemma, we can begin to interrogate the foreseeability of other types of ethical and social dilemmas.

The Trolley Problem detracts from understanding about how autonomous cars actually work, how they “think,” and the real ethical issues that face those advocating the advancement and deployment of autonomous cars.

This paper is organized into three parts. Part one argues that we should look at one promising area of robotic decision theory and control: Partially Observed Markov Decision Processes (POMDPs) as the most likely mathematical model an autonomous vehicle will use.1 After explaining what this model does and looks like, section two argues that the functioning of such systems does not comport with the assumptions of the Trolley Problem. This entails, then, that viewing ethical concerns about AVs from this perspective is incorrect and will blind us to more pressing concerns. Finally, part three argues that we need to interrogate what we decide are the objective and value functions of these systems. Moreover, we need to make transparent how we use the mathematical models to get the systems to learn and to make value trade-offs. For it seems absurd to place an AV as the bearer of a moral obligation not to kill anyone, but it is not absurd to interrogate the engineers who chose various objectives to guide their systems. I conclude with some observations of the types of ethical problems that will arise with AVs, and none have the form of the Trolley.

I. It’s all about the POMDP

A Partially Observed Markov Decision Process (POMDP) is a variant of a Markov Decision Process (MDP). The MDP model is a useful mathematical model for various control and planning problems in engineering and computing. For instance, the MDP is useful in an environment that is fully observable, has discrete time intervals, and few choices of action in various conditions (Puterman 2005). We could think of an MDP model being useful in something like a game of chess or tic-tac-toe. The algorithm knows the environment fully (the board, pieces, rules) and waits for its opponent to make a move. Once that move is made, the algorithm can calculate all the potential moves it has in front of it, discounting for future moves and then taking the “best” or “optimal” decision to counter.

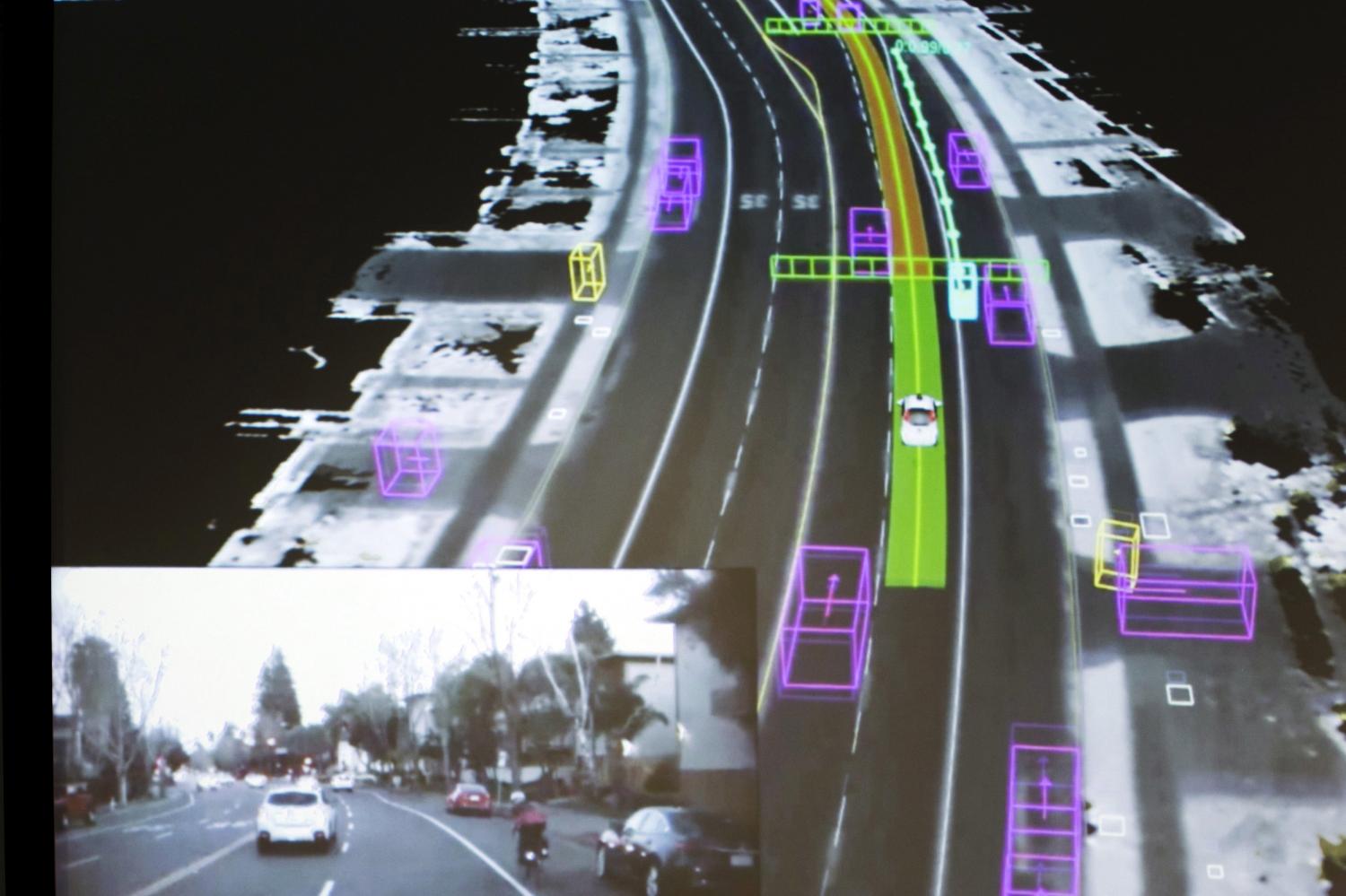

Unfortunately, many real-world environments are not like tic-tac-toe or chess. Moreover, when we have robotic systems, like AVs, even with many sensors attached to them, the system itself cannot have complete knowledge of its environment. There is incomplete knowledge due to limitations in the range and fidelity of the sensors, occlusions, and latency (the time it takes to process the sensor readings, the continuous, dynamic environment will have changed.) Moreover, a robot in this situation makes a decision using the current observations as well as a history of previous actions and observations. In more precise terms, a system is measuring everything it can at a particular state (s), and the finite set of states S = {s1, …, sn} is the environment. When a system observes itself in s, and takes an action a, it then transitions to a new state, s’, and can take action a2 (s’, a2). The set of possible actions is A = {a1, …, ak}. Thus at any given point, a system is deciding which action to take based on its present state, its prior state (if there is one), and its expected future transitioned state. The crucial difference here is that a POMDP is working in an environment where the system (or agent) has incomplete knowledge and uncertainty, and is working from probabilities; in essence, an AV is not working from an MDP, it would more than likely be working from a POMDP.

How does a system know which action to take? There are a variety of potential ways, but I will focus on one here: reinforcement learning. For a POMDP using reinforcement learning, the autonomous vehicle learns through a receipt of some reward signal. Systems like this that use POMDPs have reward (or sometimes ‘cost’) signals that tell them which actions to pursue (or avoid). But these signals are based on probability distributions of which acts in the current state, s, will lead to more rewards in a future state, sn, discounted for future acts. Let’s take an example here to explain in easier terms. I may reason that I am tired but need to finish this paper (state), so I could decide to take a nap right now (an act in my possible set of actions), and then get up later to finish the paper. However, I also do not know if I will have restful sleep, oversleep, or feel worse when I get up, thereby frustrating my plans for my paper even more, though the thought of an immediate nap could give me an immediate reward (sleep and rest). The optimal decision (or, in POMDP speak, “policy”), could be to sleep right now. However, that is not actually correct. Rather, the POMDP requires that one pick the most optimal policy under conditions of uncertainty, for sequential decisions tasks, and discounted for future reward states. In short, the optimal policy would be for me to instead grab a cup of coffee, finish my paper, and then go to bed early because I will actually maximize my total amount of sleep by not napping during the day and getting my work done quickly.

Yet the learning really only takes place once a decision is made and there is feedback to the system. In essence, there is a requirement of some sort of system memory about past behavior and reward feedback. How robust this memory needs to be is specific to tasks and systems, but what we can say at a general level is that the POMDP needs to have a belief about its actions based on posterior observations and their corresponding distributions.2 In short, the belief “is a sufficient statistic of the history,” without the agent actually having to remember every single possible action and observation (Spaan 2012).

Yet to build an adequate learning system, there is a need for many experiences to build robust beliefs. In my case of fatigue and academic writing, if I did not have a long history of experiences with writing academic papers and fatigue, one may think that whatever decision I take is at best considered a random one (that is, its 50/50 as to whether I act on my best policy). Yet, since I have a very long history of academic paper writing and fatigue, as well as the concomitant rewards and costs of prior decisions, I can accurately predict which action will actually maximize my reward. I know my best policy. This is because policies, generally, map beliefs to actions (Sutton and Barto 1998; Spaan 2012).3 Yet mathematically, what we really mean is that a policy, ∏, is a continuous set of probability distributions over the entire set of states (S). And an optimal policy is that policy that maximizes my rewards.

That function then becomes a value function, that is, a function of how an agent’s action at its initial belief state (b0), populates its expected reward return once it receives feedback and updates its beliefs about various states and observations, and thus it improves on its policy. Then it continues this pattern, again and again and again, until it can begin to better predict what acts will maximize its reward. Ostensibly, this structure permits a system to learn how to act in a world that is uncertain, noisy, messy, and not fully observable. The role of the engineer is to define the task or objective in such a way that the artificial agent following a POMDP model can take a series of actions and learn which ones correspond with the correct observations about its state of the world and act accordingly, despite uncertainty.

The AV world will undoubtedly make greater use of POMDPs in their software architectures. While there is a myriad of computational techniques to choose from, probabilistic ones like POMDPs have proven themselves amongst the leading candidates to build and field autonomous robots. As Thrun (2000) explains, “the probabilistic approach […] is the idea of representing information through probability densities” around the areas of perception and control, and “probabilistic robotics has led to fielded systems with unprecedented levels of autonomy and robustness.” For instance, recently Cunningham et al. used POMDPs to create a multi-policy decision-making process for autonomous driving that estimated when to pass a slower vehicle as well as how to merge into traffic taking into account driving preferences such as reaching goals quickly and rider comfort (Cunningham et al. 2015).

It is well known, however, that POMDPs are computationally inefficient and that as the complexity of a problem increases, some problems may be intractable. To account for this, most researchers make certain assumptions about the world and the mathematics to make the problems computable, or they use heuristic approximations to ease policy searches (Hausrecht 2000). Yet when one approximates, in any sense, there is no guarantee that a system will act in a precisely optimal manner. However we manipulate the mathematics, we pay a cost in one domain or another: either computationally or for the pursuit of the best outcomes.

There is no guarantee that something completely novel and unforeseen will not confuse an AV or cause it to act in unforeseeable ways.

The important thing to note in all of this discussion is that any AV that is running on a probabilistic method like a set or architecture of POMDPs is going to be doing two things. First, it is not making “decisions” with complete knowledge, such as in the Trolley case. Rather, it is choosing a probability of some act changing a state of affairs. In essence, decisions are made in dynamic conditions of uncertainty. Second, this means that for AVs controlled by a learning system to operate effectively, substantial amounts of “episodes” or training in various environments under various similar and different situations are required for them to make “good” decisions when they are fielded on a road. That is, they need to be able to draw from a very rich history of interactions to extrapolate out from them in a forward-looking and predictive way. However, since they are learning systems, there is no guarantee that something completely novel and unforeseen will not confuse an AV or cause it to act in unforeseeable ways. In the case of the Trolley, we can immediately begin to see the differences between how a bystander may reason and how an AV does.

II. The follies of trolleys

Patrick Lin (2017) defends the use of the Trolley Problem as an “intuition pump” to get us to think about what sorts of principles we ought to be programming into AVs. He argues that using thought experiments like this “isolates and stress-tests a couple of assumptions about how driverless cars should handle unavoidable crashes, as rare as they might be. It teases out the questions of (1) whether numbers matter and (2) whether killing is worse than letting die.” Additionally, he notes that because driverless cars are the creations of humans over time, “programmers and designers of automated cars […] do have the time to get it right and therefore bear more responsibility for bad outcomes,” thereby bootstrapping some resolution to whether there was sufficient intentionality for the act to be judged as morally right or wrong.

While I agree with Lin’s assessment that many cases in philosophy are not designed for real-world scenarios, but to isolate and press upon our intuitions, this does not mean that they are well suited for all purposes. As Peter Singer notes, reducing “philosophy…to the level of solving the chess puzzle” is rather unhelpful, for “there are things that are more important” (Singer 2010). We need to take special care to see the asymmetries between cases like the Trolley Problem and algorithms that are not moral agents but make morally important decisions. The first and easiest way to see this is to acknowledge that an AV utilizing something like a POMDP in a dynamic environment is not making a decision at one point in time but is making sequential decisions. It is making a choice based on a set of probability distributions about what act will give it the highest reward function (or minimize the most cost) based upon prior knowledge, present observations and likely future states. Unlike the Trolley cases, where there is one decision to make at one point in time, this is not how autonomous cars actually operate.

We need to take special care to see the asymmetries between cases like the Trolley Problem and algorithms that are not moral agents but make morally important decisions.

Second, and more bluntly, we’d have to model trolley-like problems in a variety of situations, and train them in those situations (or episodes) hundreds, maybe thousands, of times to get the system to learn what to do in that instance. It would not just magically make the “right” decision in that instance because the math and the prior set of observations would not, in fact could not, permit it to do so. We actually have to pre-specify what “right” is for it to learn what to do. This is because the types of algorithms we use are optimizing by their nature. They want to find the most optimal strategy to maximize its reward function, and this learning, by the way, means that it needs to make many mistakes.4 For instance, one set of researchers at Carnegie Mellon University refused to use simulations to teach an autonomous aerial vehicle to fly and navigate. Rather, they allowed it to crash, over 11,500 times, to learn simple self-supervised policies to navigate (Gandhi, Pinto and Gupta 2017). Indeed this learning-by-doing is exactly what much of the testing of self-driving cars in real road conditions is also directed towards: real life learning and not mere simulations. Yet we are not asking the cars to go crashing into people or to choose whether it is better to kill five men or five pregnant women.5

Moreover, even if one decided to simulate these Trolley cases again and again, and diversify them to some sort of sufficient degree, we must acknowledge the simple but strict point that unless one already knows the right answer, the math cannot help. Also, I am hard pressed to find any philosophers who all agree on the one way of living and the correct moral code for over 2,000 years, or even to find agreement on what to do in the Trolley Problem.6 What is even worse is that if we take the opinion that our intuitions ought to guide us in finding data for these moral dilemmas, we will not in fact, find reliable data. This can easily be seen with two simple examples to show how people do not in fact act consistently: the Allais Paradox and the Ellsberg Paradox. Both of these paradoxes challenge the basic axioms that Von Neumann and Morgenstern (1944) posited for their theory of expected utility. Expected utility theory basically states that people will choose an outcome based on whether that outcome’s expected utility is higher than all other potential outcomes. In short, it means people are utility maximizers.7 In the Allais Paradox, we find that in a given experiment people fail to actually act consistently to maximize their utility (or achieve preference satisfaction) and thus they violate the substitution axiom of the theory. (Allais 1953). In the Ellsberg Paradox, people end up choosing when they cannot actually infer the probabilities that will maximize their preferences; thus violating the axioms of completeness and monotonicity (Ellsberg 1961).

One may object here and claim that utilitarianism is not the only moral theory, and that we do not in fact want utility maximizing self-driving cars. We’d rather have cars that respect rights and lives, more akin to a virtue ethics or deontological approach to ethics. But if that is so, then we have done away with the need for Trolley Problems at the outset. It is impermissible to kill anyone if that is true, despite the numbers. Or we merely state a priori that lesser evil justifications win the day, and thus in extremis we have averted the problem (Kamm 2007; Frowe 2015). Or, if we grant that self-driving cars, relying on a mathematical descendent from classic act utilitarianism, end up calculating as an act utilitarian would, then there appears to be no problem–the numbers win the day. Wait, wait, one responds, this is all too quick. Clearly we feel that self-driving cars ought not to kill anyone, the Trolley Problem stands, and they still might find themselves in situations where they have no choice but to kill someone, so who ought it to be?

Here again, I cite that we are stuck in an uncomfortable position vis a vis the need for data and training versus the need to know what morality dictates for us as true: do we want to model moral dilemmas or do we want to solve them? If it is the former, we can do this indefinitely. We can model moral dilemmas and ask people to partake in experiments, but that only tells us the empirical reality of what those people think. And that may be a significantly different answer than what morality dictates one ought do. If it is the latter, I am still extremely skeptical that this is the right framework to be discussing the ethical quandaries that arise with self-driving cars. Perhaps the Trolley Problem is nothing more than an unsolvable distraction from the question of safety thresholds and other types of ethical questions regarding the second or third order effects of automotive automation in society.8

Indeed, if I am correct, then the entire set up of a moral dilemma for a non-moral agent to “choose” the best action is a false choice because there is no one choice that any engineer could foreseeably plan for. What is more, even if the engineer were to exhibit enough foresight and build a learning system that could pick up on subtle clues from interactions with the environment and mass amounts of data, this assumes that we have actually figured out which action is the right one to take! We’ve classified data as “good” or “bad” and fed that to a system. Yet we haven’t as human moral agents decided this at all, for there is debate in each situation about what one ought to do, as well as uncertainty. Trolley Problems are constructed in such a way where the agent has one choice, and knows with certainty what will happen should she make that choice. Moreover, that choice is constructed as a dilemma: it appears that no matter what choice she makes, she will end up doing some form of wrong. Under real-world driving conditions, this will rarely in fact be the case. And if we attempt to find a solution through the available technological means, all we have done is to show a huge amount of data to the system and have it optimize its behavior for the task it has been assigned to complete. If viewed in this way, modeling moral dilemmas as tasks and optimization appears morally repugnant.

More importantly for our purposes here, we must be explicitly clear that AI is not human. Even if an AI were a moral agent (and we agreed on what that looked like), the anthropomorphism presumed in the AV Trolley case is actually blinding us to some of the real dangers. For in classic moral philosophy Trolley cases, we assume from the outset that there is: (i) a moral agent confronted with the choice; (ii) that this moral agent is self-aware with a history of acting in the world, understands concepts, and possesses enough intelligence to contextually identify when trifle constraints are trumped by significant moral ones and (iii) the intelligence can, in some sense, balance or measure seemingly (or truly) incommensurable goods and conflicting obligations. Moreover, as Barbara Fried (2012) summarizes about the structure of Trolley Problems:

The hypotheticals typically share a number of features beyond the basic dilemma of third party harm/harm tradeoffs. These include the consequences of the available choices are stipulated to be known with certainty ex ante; that the actors are all individuals (as opposed to institutions); that the would-be victims (of the harm we impose by our actions or allow to occur by our inaction) are generally identifiable individuals in close proximity to the would-be actor(s); and that the causal chain between act and harm is fairly direct and apparent. In addition, actors usually face a one-off decision about how to act. That is to say, readers are typically not invited to consider the consequences of scaling up the moral principle by which the immediate dilemma is resolved to a large number of (or large-number) cases.

Yet not only are all the attributes noted above well beyond the present day capabilities of any AI systems, the situation in which an AV operates fails to comport with any and all of the assumptions in trolley-like cases (Roff 2017). There is a disjuncture between saying that humans will “program” the AV to make the “correct” moral choice, thereby bootstrapping the Trolley case to AVs, and between claiming that an AV is a learning automata that is sufficiently capable to make morally important decisions on the order of the Trolley problem. Moreover, we cannot just throw up our hands and ask what the best consequences will render, for in that case there is no real “problem” at issue: if one is a consequentialist, save the five over the one, no questions asked.

It is unhelpful to continue to focus and to insist that the Trolley Problem exhausts the moral landscape for ethical questions with regard to AVs and their deployment.

It is unhelpful to continue to focus and to insist that the Trolley Problem exhausts the moral landscape for ethical questions with regard to AVs and their deployment. All AIs can do is to bring into relief existing tensions in our everyday lives that we tend to assume away. This may be due to our human limitations in seeing underlying structures and social systems because we cannot take in such large amounts of data. AI, however, is able to find novel patterns in large data and plan based on that data. This data may reflect our biases, or it may simply be an aggregation of whatever situations the AV has encountered. The only thing AVs require of humans is to make explicit what tasks we require of it and what the rewards and objectives are; we do not require the AV to tell us that these are our goals, rewards and objectives. Unfortunately, this distinction is not something that is often made explicit.

Rather, the debate often oscillates between whether the human agents ought to “program” the right answer, or whether the learning system can in fact make the morally correct answer. If it is the former, we must admit it ignores the fact that a learning system does not operate in such straightforward terms. It is a learning system that will be constrained by its sensors, its experience, and the various architectures and sub-architectures of its system. But it will be acting in real time, away from its developers, and in a wide and dynamic environment, and so the human(s) responsible for its behavior will have, at best, mediated and distanced (if any) responsibility for that system’s behaviors (Matthais 2004).

If it is the latter, I have attempted to show here that the learning AV does not possess the types of qualities relevant for the aberrant Trolley case. Even if we were to train it on large amounts and variants of the Trolley case, there will always be situations that can arise that may not produce the estimated or intended decision by the human. This is simple mathematics. For one can only make generalizations about behaviors—particularly messy human behaviors that may give rise to AV Trolley like cases—when there is a significantly large dataset (we call this the law of large numbers). Unfortunately, this means that there is no way to know what one individual data point (or driver) will do in any given circumstance. And the Trolley case is always the unusual individual outlier. So, while there is value to be found in thinking through the moral questions related to Trolley-like cases, there are also limits to it as well, particularly with regard to decision weights, policies and moral uncertainty.

III. The value functions

If we agree that the Trolley Problem offers little guidance on the wider social issues at hand, particularly the value of a massive change and scientific research, then we can begin to acknowledge the wide-ranging issues that society will face with autonomous cars. As Kate Crawford and Ryan Calo (2016) explain, “autonomous systems are [already] changing workplaces, streets and schools. We need to ensure that those changes are beneficial, before they are built further into the infrastructure of everyday life.” In short, we need to identify the values that we want to actualize through the engineering, design and deployment of technologies, like self-driving cars. There is thus a double entendre at work here: we know that the software running these systems will be trying to maximize their value functions, but we also need to ensure that they are maximizing society’s too.

We know that the software running these systems will be trying to maximize their value functions, but we also need to ensure that they are maximizing society’s too.

So what are the values that we want to maximize with autonomous cars? Most obviously, we want cars to be better drivers than people. With over 5.5 million crashes per year and over 30,000 deaths in the U.S. alone, safety appears to be the primary motivation for automating driving. Over 40 percent of fatal crashes involve “some combination of alcohol, distraction, drug involvement and/or fatigue” (Fagnant and Kockelman 2015). That means that if everyone were using self-driving vehicles, at least in the U.S., there could be at least a 12,000 reduction in fatalities per year. Ostensibly, saving life is a paramount value.9

But exactly how this occurs, as well as the attendant effects of policies, infrastructure choices, and technological development are all value loaded endeavors. There is not simply an innovative technological “fix” here. We cannot “encode” ethics and wash our hands of it. The innovation, rather, needs to come from the intersection of humanities, social sciences, and policy, working alongside engineering. This is because the values that we want to uphold must first be identified, contested, and understood. Richard Feynman famously said, “I cannot create that which I do not understand.” Meaning, we cannot create, or perhaps better, recreate those things of which we are ignorant.

Indeed, I would go so far as to push Crawford and Calo in their use of the word “infrastructure” and suggest that it is in fact the normative infrastructure that is of greatest importance. Normative here has two meanings that we ought to keep in mind: (i) the philosophical or moral “ought;” and (ii) the Foucauldian “normalization” approach that identifies norms as those concepts or values that seek to control and judge our behavior (Foucault 1975). These are two very different notions of “normative,” but both are crucially important for the identification of value and the creation of value functions for autonomous technologies.

From the moral perspective, one must be able to identify all those moral values that ought to be operationalized in not merely the autonomous vehicle system, but the adjudication methods that one will use when these values come into conflict. This is not, some might think, a return to the Trolley Problem. Rather, it is a technological value choice on how one decides to design a system to select a course of action. In multi-objective learning systems, there often occur situations where objectives (that is tasks or behaviors to accomplish) conflict with one another, are correlated, or even endogenous. The engineer must design a way of finding a way of prioritizing particular objectives or creating a system for tradeoffs, such as whether to conserve energy or to maintain comfort (Moffaert and Nowé 2014).10 How they do so is a matter of mathematics, but it is also a choice about whether they are privileging particular kinds of mathematics that in turn privilege particular kinds of behaviors (such as satisficing).

Additionally, shifting focus away from tragic and rare events like Trolley cases, allows us to open up more systemic and “garden variety” problems that we need to consider for reducing harm and ensuring safety. As Allen Wood (2011) argues, most people would never have to face a Trolley case if there were safer trollies, the inability for passersby to have access to switches and good signage to “prevent anyone from being in places where they might be killed or injured by a runaway train or trolley.” In short, we need to think about use, design, and interaction for the daily experience for consumers, users, or bystanders of the technology. We must understand how AVs could change the design, layout and make-up of cities and towns, and what effects those may have on everything from access to basic resources to increasing forms of inequality.

From the Foucauldian perspective, things become slightly more interesting, and this is where I think many of the ethical concerns begin to come into view. The norms that govern how we act, the assumptions we make about the appropriateness of the actions or behaviors of others, and the value that we assign to those judgments is here a matter of empirical assessment (Foucault 1971; Foucault 1975). For instance, public opinion surveys are conduits telling us what people “think” about something. Less obvious, however, are the ways in which we subtly adjust our behavior without speaking or, in some instances, even thinking from cultural and societal cues. These are the kinds of norms Foucault is concerned about. These kinds of norms are the ones that show up in large datasets, in biases, in “patterns of life.” And it is these kinds of norms, which are the hardest ones to identify, that are the stickiest ones to change.

How this matters for autonomous vehicles lays in the assumptions that engineers make about human behavior, human values, or even what “appropriate” looks like. From a value-sensitive design (VSD) standpoint, one may consider not only the question of lethal harm to passengers or bystanders, but a myriad of values like privacy, security, trust, civil and political rights, emotional well-being, environmental sustainability, beauty, social capital, fairness, and democratic value. For VSD seeks to encapsulate not only the conceptual aspects of the values a particular technology will bring (or affect), but also how “technological properties and underlying mechanisms support or hinder human values” (Friedman, Kahn, Borning 2001).

But one will note that in all of these choices, Trolley Problems have no place. For instance, many of the social and ethical implications of AVs can be extremely subtle or simply obvious. Note the idea recently circulated by the firm Deloitte: AVs will impact retail and goods delivery services (Deloitte 2017). As it argues, retailers will attempt to use AVs to increase catchment areas, provide higher levels of customer service by sending cars to customers, cut down on delivery time, or act as “neighborhood centers” akin to a mobile corner store that delivers goods to one’s home. In essence, retailers can better cater to their customers and “nondrivers are not ‘forced’ to take the bus, subway, train or bike anymore […] and this will impact footfall and therefore (convenience) stores” (Deloitte 2017).

Yet this foreseen benefit from AVs may only apply to already affluent individuals living in reasonable proximity to affluent retail outlets. It certainly will struggle to find economic incentives in “food deserts” where low-income individuals without access to transport live at increasingly difficult distances from supermarkets or grocery stores (Economic Research Service U.S. Department of Agriculture 2017). Given that these individuals currently do not possess transport and suffer from lack of access to fresh foods and vegetables does not bode well for their ability to afford to pay prices for automation and delivery, or perhaps increased prices for the luxury of being ferried to and fro. This may in effect have more deleterious effects on poverty and the widening gap between the rich and poor, increasing rather than decreasing the areas now considered as “food deserts.”

To be sure, there is much speculation about how AVs will actually provide net benefits for society.

To be sure, there is much speculation about how AVs will actually provide net benefits for society. Many reports, from a variety of perspectives, estimate that AVs will ensure that all the parking garages are turned into beautiful parks and garden spaces (Marshall 2017), and the elderly, disabled and vulnerable have access to safe and reliable transport (Madia 2017, Anderson 2014, West 2016, Bertoncello and Wee 2015, UK House of Lords 2017). But less attention appears to be paid to how the present limitations of the technology will require substantial reformulation to urban planning, infrastructure, and the lives and communities of those around (and absent from) AVs. Hyper-loops for AVs, for example, may require pedestrian overpasses, or, as one researcher suggests, “electric fences,” to keep pedestrians from crossing at the street level (Scheider 2017). Others suggest that increased adoption of AVs will need to be cost and environmentally beneficial, so they will need to be communal and operated in larger ride shares (Small 2017). If this is so, then questions about the presence of surveillance and intervention for potential crimes, harassment, or other offensive behavior would seem to arise.

All of these seemingly small side or indirect effects of AVs will normalize usage, engender rules of behavior, systems of power, and place particular values over others. In the Foucauldian sense, the adoption and deployment of the AV will begin to change the organization and construction of “collective infrastructure” and this will require some form of governmental rationality—an imposition of structures of power—on society (Foucault 1982). For this sort of urban planning, merely to permit the greater adoption of AVs is a political choice; it will enable a “certain allocation of people in space, a canalization of their circulation, as well as the coding of their reciprocal relations” (Foucault 1982). Thus making these types of decisions transparent and apparent to the designers and engineers of AVs will help them to see the assumptions that they make about the world and what they and others value in it.

Conclusion

Ethics is all around us because it is a practical activity for human behavior. From all of the decisions that humans make, from the mundane to the morally important, there are values that affect and shape our common world. If viewed from this perspective, humans are constantly engaging in a sequential decision-making problem, trading off values all the time—not making one-off decisions intermittently. As I have tried to argue here, thinking about ethics in this one-shot problem, extremely tragic case scenario, is unhelpful at best. It distracts us from identifying the real safety problems and value tradeoffs we need to be considering with the adoption of new technologies throughout society.

In the case of autonomous vehicles, we ought to consider how the technology actually works, how the machine actually makes decisions. Once we do this, we see that the application of Trolleyology to this problem is not only a distraction, it is a fallacy.

In the case of AVs, we ought to consider how the technology actually works, how the machine actually makes decisions. Once we do this, we see that the application of Trolleyology to this problem is not only a distraction, it is a fallacy. We aren’t looking at the tradeoffs correctly, for we have multiple competing values that may be incommensurable. It is not whether a car ought to kill one to save five, but how the introduction of the technology will shape and change the rights, lives, and benefits of all those around it. Thus, the set-up of a Trolley Problem for AVs ought to be considered a red herring for anyone considering the ethical implications of autonomous vehicles, or even AI generally, because the aggregation of goods/harms in Trolley cases doesn’t travel to the real world in that way. They fail to scale, they are incommensurate, they are ridden with uncertainty and causality is fairly tricky when we want to consider second- and third-order effects. Thus, if we want to get serious about ethics and AVs, we need to flip the switch on this case.

Acknowledgements

I owe special thanks to Patrick Lin, Adam Henschke, Ryan Jenkins, Kate Crawford, Ryan Calo, Sean Legassick, Iason Gabriel and Raia Hadsel. Thank you all for your keen minds, insights, and feedback.

Reference List

Allais, Par M. (1953). “Le Comportement de L’Homme Rationnel Devant Le Risque: Critique Des Postulats et Axiomes De L’Ecole Americaine” Econometrica, 21(4): 503-546.

Anderson, James M. (2014) “Self-Driving Vehicles Offer Potential Benefits, Policy Challenges for Lawmakers” Rand Corporation, Santa Montica, CA. Available online at: https://www.rand.org/news/press/2014/01/06.html. Accessed 17 January 2018.

Bertoncello, Michele. and Dominik Wee. (2015) “Ten Ways Autonomous Driving Could Redefine the Automotive World” McKinsey & Company, June. Available online at: https://www.mckinsey.com/industries/automotive-and-assembly/our-insights/ten-ways-autonomous-driving-could-redefine-the-automotive-world. Accessed 15 January 2018.

Crawford, Kate and Ryan Calo. (2016). “There is a Blind Spot in AI Research” Nature, Vol. 538, October. Available online at: https://www.nature.com/polopoly_fs/1.20805!/menu/main/topColumns/topLeftColumn/pdf/538311a.pdf. Accessed 15 January 2018.

Cunningham, Alexander G. Enric Galceran, Ryan Eustice, Edwin Olson. (2015). “MPDM: Mulitpolicy Decision-Making in Dynamic, Uncertain Environments for Autonomous Driving” Robotics and Automation (ICRA) IEEE International Conference on Robotics and Automation, Seattle, USA.

Economic Research Service (ERS), U.S. Department of Agriculture (USDA). (2017). Food Access Research Atlas, Available online at: https://www.ers.usda.gov/data-products/food-access-research-atlas/. Accessed 17 January 2018.

Ellsberg, Daniel. (1961). “Risk, Ambiguity, and the Savage Axioms” The Quarterly Journal of Economics, 75(4): 643-669.

Fagnant, Daniel J. and Kara Kockelman. (2015). “Preparing a Nation for Autonomous Vehicles: Opportunities, Barriers and Policy Recommendations.” Transportation Research Part A, Vol. 77: 167-181.

Foot, Philippa. (1967). “The Problem of Abortion and the Doctrine of Double Effect” Oxford Review, Vol, 5: 5-15.

Foucault, Michel. (1971). The Archeology of Knowledge and the Discourse of Language, (Trns.) A. M. Sheridan Smith, (1972 edition), New York, NY: Pantheon Books.

——. (1975). Discipline & Punish: The Birth of the Prison (1977 edition), New York, NY: Vintage Books.

——. (1982). “Interview with Michel Foucault on ‘Space, Knowledge, and Power’ from Skyline in (Ed.) Paul Rabinow, The Foucault Reader (1984), New York, NY: Pantheon Books.

Fried, Barbara. (2012). “What Does Matter? The Case for Killing the Trolley Problem (Or Letting it Die), The Philosophical Quarterly, 62(248): 506.

Frowe, Helen. (2015). “Claim Rights, Duties, and Lesser Evil Justifications” The Aristotelian Society, 89(1): 267-285.

Gandhi, Dhiraj. Lerrel Pinto and Abhinav Gupta. (2017). “Learning to Fly by Crashing” Available online at: https://arxiv.org/pdf/1704.05588.pdf. Accessed 18 January 2018.

Goldman, A.I. (1979). What is Justified Belief?. In: (Eds). Pappas G.S. Justification and Knowledge. Philosophical Studies Series in Philosophy, vol 17. Springer, Dordrecht.

Hausrecht, Milos. (2000) “Value-Function Approximations for Partially Observable Markov Decision Processes” Journal of Artificial Intelligence Research, Vol. 13: 33-94.

Kagan, Shelly. (1989). The Limits of Morality, Oxford: Oxford University Press.

Kamm, Francis. (1989). “Harming Some to Save Others” Philosophical Studies, Vol. 57: 227-260.

——. (2007). Intricate Ethics: Rights, Responsibilities, and Permissible Harm, Oxford: Oxford University Press.

Lin, Patrick. (2017). “Robot Cars and Fake Ethical Dilemmas” Forbes Magazine, 3, April. Available online at: https://www.forbes.com/sites/patricklin/2017/04/03/robot-cars-and-fake-ethical-dilemmas/#3bdf4f2413a2. Accessed 12 January 2018.

Madia, Eric. (2017). “How Autonomous Cars and Buses Will Change Urban Planning (Industry Perspective) Future Structure, 24 May. Available online at: http://www.govtech.com/fs/perspectives/how-autonomous-cars-buses-will-change-urban-planning-industry-perspective.html. Accessed 16 January 2018.

Marshall, Aarian. (2017). “How to Design Streets for Humans—and Self-Driving Cars” Wired Magazine, 30 October. Available online at: https://www.wired.com/story/nacto-streets-self-driving-cars/. Accessed 22 November 2017.

Otsuka, Michael. (2008). “Double Effect, Triple Effect and the Trolley Problem: Squaring the Circle in Looping Cases” Utilitias, 20(1): 92-110.

Parfit, Darek. (2011). On What Matters, Vol. 1 and 2. Oxford: Oxford University Press.

Puterman, Martin L. (2005). Markov Decision Processes: Discrete Stochastic Dynamic Programming, London: John Wiley & Sons.

Roff, Heather M. (2017). “How Understanding Animals Can Help Us to Make the Most out of Artificial Intelligence” The Conversation, 30 March. Available online at: https://theconversation.com/how-understanding-animals-can-help-us-make-the-most-of-artificial-intelligence-74742. Accessed 12 December 2017.

Science and Technology Select Committee, United Kingdom House of Lords (2016-2017). “Connected and Autonomous Vehicles: The Future?” Government of the United Kingdom. Available online at: https://publications.parliament.uk/pa/ld201617/ldselect/ldsctech/115/115.pdf. Accessed 15 January 2018.

Singer, Peter. (2010). Interview in Philosophy Bites, (Eds.) Edmonds, D. and N. Warburton, Oxford: Oxford University Press.

Small, Andrew. (2017). “The Self-Driving Dilemma” CityLab Available online at: https://www.citylab.com/transportation/2017/05/the-self-driving-dilemma/525171/. Accessed 15 January 2018.

Spaan, Matthijs. (2012). “Partially Observable Markov Decision Processes” in (Eds). M.A. Wiering and M. van Otterlo, Reinforcement Learning: State of the Art, London: Springer Verlag.

Sutton, Richard and Andrew Barto. (1998). Reinforcement Learning: An Introduction, Cambridge: MIT Press. Thompson, Judith Jarvis (1976). “Killing, Letting Die and the Trolley Problem” The Monist, 59(2): 204-217.

——. (1985). “The Trolley Problem” The Yale Law Journal, 95(6): 1395-1415. Thrun, Sebastian. (2000). “Probabilistic Algorithms in Robotics,” AI Magazine, 21(4).

Tuinder, Marike. (2017). “The Impact on Retail” Deloitte.

Unger, Peter. (1996). Living High and Letting Die, Oxford: Oxford University Press.

Van Moffaert, Kristof., Ann Nowé. (2014). “Multi-Objective Reinforcement Learning Using Sets of Pareto Dominating Policies” Journal of Machine Learning Research, Vol. 15: 3663-3692.

Von Neumann, John and Oskar Morgenstern. (1944). Theory of Games and Economic Behavior, Princeton, NJ: Princeton University Press.

West, Darrell M. (2016). “Securing the Future of Driverless Cars” Brookings Institution, 29 September Available online at: https://www.brookings.edu/research/securing-the-future-of-driverless-cars/. Accessed 15 January 2018.

Wood, Allen. (2011). “Humanity as an End in Itself” in (Ed.) Darek Parfit, On What Matters, Vol. 2, Oxford: Oxford University Press.

-

Footnotes

- There are of course many machine learning techniques one could use, and for the sake of brevity I am simplifying various artificial intelligence techniques, like deep learning, and other technical details to focus on a classic case of the POMDP, in no way is this meant to be a panacea.

- There are techniques for learning memoryless policies. However, it is uncertain how well these would scale, particularly in the AV case. Of course, the word belief is anthropomorphic in this sense, but the system will have a memory of past actions and some estimation of likely future outcomes. We can liken this to Goldman’s explanation of a “belief-forming-process” (Goldman 1979).

- We might also just want to call belief the relevant information in the state the agent finds itself in, so the belief is the state/action pair with the memory of prior state/action pairs.

- One could object and say that the model could be specified in an expert However, this approach would be very brittle to novel circumstances and does not get the benefit of adaptive learning and experimentation. I thank Mykel Kochenderfer for pressing me on this point.

- Moral Machine is a project out of Massachusetts Institute of Technology that aims to take discussion of ethics and self-driving cars further “by providing a platform for 1) building a crowd-sourced picture of human opinion on how machines should make decisions when faced with moral dilemmas, and 2) crowd-sourcing assembly and discussion of potential scenarios of moral consequence.” Unfortunately, the entire premise for this, as I am arguing here, is faulty. Cf:. http://moralmachine.mit.edu.

- While we have some experimental philosophy issuing surveys to reveal what people’s preferences would be in a Trolley case, while there is a majority of agreement for one to throw the switch, this does not mean that the opinion on this matter is the morally correct action to take. The very limited case sampled (the classic kill one to save the five), is contrived. Thus even if we were to model a variety of such cases, it is highly unlikely that an AV would encounter any one of them, let alone provide moral cover for the action taken. Cf: https://philpapers.org/surveys/results.pl.

- Of course, one could also object that decision theory is normative and not descriptive. But this objection seems misplaced, as if people thought it prescriptive there would not be so much work on the descriptive side of the equation, particularly in the fields of economics and political science.

- I thank Ryan Calo and Kate Crawford for pushing me on these points and the helpful.

- There are some who may argue that if someone has wronged another or poses a wrongful lethal threat to another, they have forfeited their right not to be lethally harmed in response. If that is so, then there appears to be something weightier than “life” at work in this balance, for one will certainly perish. Rather, what seems of greater value in this scenario is that of rights and justice.

- It also seems amusing that the acronym for their approach to solving this problem is a “MORL” (multi objective reinforcement learning) algorithm.