As we enter the third decade of the 21st century—the digital century—it is time for the public interest to reassert itself. Thus far, the digital entrepreneurs have been making the rules about the digital economy. Early in this decade We the People must reassert a visible hand on the tiller of digital activity.

Thoughtful reflection on the first two decades of the digital century must ask, “Is this the best we can do with our marvelous new capabilities?” Does the invasion of personal privacy, the propagation of misinformation, and the dominance of digital markets by a handful of companies represent a positive step forward?

Will public policy intervene to protect personal privacy? Can our leaders act to preserve the idea of a competition-based economy? And while we catch up to decades of ignoring digital policy challenges, will we also look ahead to establish public interest expectations of the new developments such as artificial intelligence that digital technology opens?

In the 18th century, Adam Smith wrote of the “invisible hand” that governed markets. In the 19th and 20th centuries, the excesses of industrial capitalism resulted in the regulatory imposition of a “visible hand” acting in the public interest.1 The subsequent combination of capitalism and regulatory guardrails had the laudable effect of allowing the industrial economy to soar while protecting consumers and competition. At the beginning of the third decade of the digital century, it is time to introduce the visible hand of regulatory oversight into the information economy.

The Jurassic Park test

Since the mid-20th century two forces have been advancing on parallel paths. Back in 1965, Moore’s Law forecast the exponential growth in the computing power of microchips. Thirteen years later, the first commercial cellular network launched. Early in the 21st century, these two developments combined to produce powerful, low-cost computing distributed by ubiquitous networks.

The job of a microchip is to collect, compute, and communicate digital information. Linking of those capabilities across a ubiquitous network then allowed for aggregated data to feed into software algorithms. The most profitable algorithms are those that draw on information regarding both markets and individual lives.

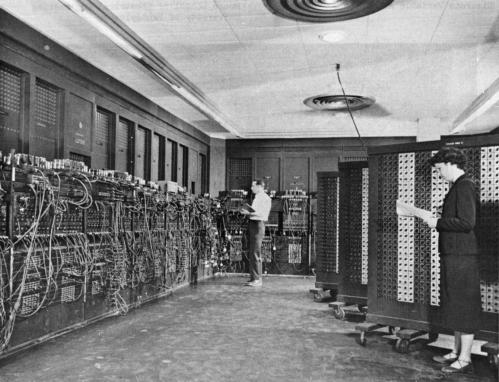

Algorithms themselves are not new. Basically, they are instruction recipes. What is new is that the computing power necessary for sophisticated algorithms has become affordable and connected. The processing power once available only from the multimillion-dollar supercomputers is now small and inexpensive enough to fit in your pocket or purse. That smartphone (debuted in the first decade of this century) is, in turn, connected to most of the other computers in the world.

But what have we done with this awe-inspiring breakthrough? The defining aspect of the revolution thus far has been using personal information to sell advertisements and pollute the democratic process with misinformation, disinformation, and malinformation.

In the classic movie Jurassic Park, Dr. Ian Malcolm (played by Jeff Goldblum) tells those who have released dinosaurs into the modern world, “Your scientists were so preoccupied with whether or not they could that they didn’t stop to think if they should.” It is an admonition applicable to the entrepreneurs who harnessed connected computing power to collect and monetize personal information. It was possible, so they did it.

The motto of the first decades of the digital century was “Move fast and break things.” Consideration of the consequences of such activities was not a priority.

Moving fast and breaking things before the public or policymakers could appreciate the consequences, let alone catch, up has worked well for the digital companies. They may not have considered the consequences of their actions, but those consequences are now our challenges. It may be impossible to put the genie back in the bottle, but at least we can teach it some manners through regulatory oversight.

A “Fourth Industrial Revolution”?

The World Economic Forum has dubbed the digital era the “fourth industrial revolution.” But is it really? It is a revolution, to be sure, but its effect is anti-industrial. Thanks to digital technology, the engine of economic growth is no longer the manufacturing of hard goods, but the manipulation of data to produce digital soft goods.

Yes, digital technology has been applied to improve those manufacturing activities that remain. Indeed, it has created new production enterprises such as 3D printing. But we have not seen a new “industrial revolution” that anywhere approaches the scale of the original. The productivity of making things, in fact, lags behind the experience in the real industrial revolution.

In his masterful The Rise and Fall of American Growth, economist Robert Gordon observed that the average annual growth in productivity per hour dropped from 2.8% in the mid-20th century to 1.6% percent as the digital era emerged.2 While there was a productivity burst between 1996 and 2004 thanks to low-cost computing and the internet, the growth then slowed. It is hardly a new “industrial revolution” when between 1970 and 2014 the average annual growth in productivity per hour was below that of the post-Civil War era.

What digital technology has produced is a consumer services revolution. Professor Gordon describes this development as “simultaneously dazzling and disappointing.” The dazzling new capabilities of digital technology “have tended to be channeled into a narrow sphere of human activity having to do with entertainment, communications, and the collection and processing of information” rather than improving broad-based economic productivity.3

While there have been some amazing productive advances—from 3D printing to 5G networks and robotics to smart cities—they are not definitive of the era. What is definitive is how we have lost our privacy in order that the data could be used to crush local businesses, monopolize new markets, and make truth a casualty of propaganda.

If this isn’t the kind of “revolution” we want, then the beginning of the third decade in the digital century is the time to do something about it.

Moore’s Law forecasts what’s next

While we are busy rebalancing the effects digital technology produced in earlier decades, we must also anticipate what’s next. We are still early in the revolution of low-cost computing and ubiquitous connectivity. As the carnival barker cried, “You ain’t seen nothin’ yet!”

For over 50 years the forecast of Intel co-founder Gordon Moore that the computing power of the microprocessor would double every 18 to 24 months has been the driving force in the digital world. While some believe Moore’s Law must inevitably slow, it is unassailable that computing power growth will continue to be up-and-to-the-right.

What makes this trajectory important is this: the increase in computing power during the next 10 years will vastly exceed what we saw in the last decade. It was the previous expansion of computing capabilities that created our current tumult. Far from stopping or slowing, that tumult-creating power will grow exponentially.

One of the applications of that computing power will be to further optimize the networks that connect us and our computers. New wireless networks are beginning to rival wired networks in their speed and latency. Fifth generation (5G) wireless technology is itself a manifestation of Moore’s Law as computers and software replace hardware to make the networks function.

5G promises to deliver data 10 to 100 times faster than its predecessor and with minimal latency (the time between a request and response). During the new decade we will be inundated with commercials and news reports about 5G—yet work has already begun on the next iteration: 6G. The 6G standard is expected to be completed before the end of the decade and to herald even greater innovations, possibly even moving beyond the internet protocol that has heretofore driven digital technology.

As we struggle to reinsert the public interest into the digital economy, we cannot lose the awareness that the computing and communication revolutions that necessitated this intervention will continue. And because that innovation will occur at a faster pace, the visible hand must also be a flexible hand allowing policy to respond to what is presently unimaginable, but sure to come.

Who makes the rules?

“Digital technology has gone longer with less regulation than any other major technology before it,” Microsoft President Brad Smith observed. “This dynamic is no longer sustainable, and the tech sector will need to step up and exercise more responsibility while governments catch up by modernizing tech policies.”

Tech companies have avoided regulation through the mantra of “permissionless innovation.” The wonders they were creating would be stifled, it was argued, by government oversight. “You can’t regulate the internet” was a criticism I frequently heard as chairman of the Federal Communications Commission from both Republicans and Democrats. It was as if some kind of magic spell of digital creativity would be broken by the expectation of social responsibility.

The mantra of magical creativity worked. The effect was that when government failed to act, the companies who moved fast to break things before anyone caught on were able to make the rules themselves.

The fact that it is the innovators who make the rules is unsurprising and consistent with history. Those with the vision have always set the course—until that course infringes on the rights of others and the public interest. The introduction of regulation to the industrial economy was a development in late 19th and early 20th centuries for the purpose of asserting public interest guardrails on previously unfettered corporate decisions. The rules, vehemently opposed by the businesses of the time, protected consumers and competition and ended up preserving capitalism by protecting it from its excesses.

Unfortunately, the rules and structures created to govern industrial activities are inadequate to the needs of the digital economy. It is not that that the protection of consumers and competition are not applicable goals, they most certainly are. But the differences between the information economy and the industrial economy necessitate different implementations.

Federal government agencies created in the industrial era were designed to deal with the activities of markets that used finite raw material hard assets to create new finished product hard assets. The digital economy uses a different model.

The capital assets of the industrial economy were finite; there was only so much coal, or oil, or ore and it was consumed by being used. The capital asset of data is infinite because it is not consumed by being used. What’s more, data assets are iterative because data is used to create new digital products that in turn produce new data that is used for new products that create new data in a never-ending process.

Expecting an industrial era regulatory agency to assimilate the effects of these differences complicates the imposition of a visible hand. The statutory provisions governing these agencies were created to address the industrial issues of decades ago. The last meaningful update of the Communications Act governing the nation’s networks, for instance, was in 1996 when the internet was AOL and screeching modems. The jurisprudential interpretations of those old statues were similarly based on industrial fact sets. And then there is the human element. The good and dedicated staff of these agencies have spent their professional lives following industrial precedents; to suddenly transform into digital age regulators is an unnatural act in defiance of muscle memory.

The tech companies are correct, however, in their concern that current approaches to regulation are too rigid and too slow to adapt at internet speed. In the process of expecting the companies to change their behavior, the government will have to modernize not just its policies, but also how it operates.

The agencies of government, having been established in the industrial era, adopted the rules-based bureaucratic management techniques of that time. For companies, however, the digital century introduced agile management practices to replace rigid rules-based corporate management. When I was running a software company in the 1980s, producing a digital product was essentially the same as producing an automobile: the product moved through steps until it reached the end of production. In a software-driven world, however, that kind of management has been replaced by “agile development” in which the rapid changes in technology mean the product is never completed. Every time your smartphone or computer updates its software, for instance, it is practicing agile management.

The creative destruction of capitalism has forced corporate management to change and adopt agility. There is no such incentive in government management. If we hope to provide meaningful oversight of the information economy, that regulation is going to have to become as agile as the activities it oversees.

Going forward

The beginning of the third decade of the digital century is an opportunity to reassess not only the consequences of the earlier decades, but also how we proceed going forward. Will the era defined by the loss of our privacy, rushing of local businesses, monopolization of markets, and a tsunami of propaganda now be extended into a future of machine learning (ML) and artificial intelligence (AI)?

Having realized the “permissionless innovation” argument has run its course, the digital companies have switched to a new message when it comes to ML and AI. Yes, rules are necessary, but the innovators should regulate themselves. The new mantra is all about “ethical principles” and “responsible practices” for AI, so long as those rules and practices are defined by the companies.

We were lulled into the “it’s complicated and only we can understand those complications” argument once before. We should not fall for the same claim twice. The third digital decade should begin with a full-throated discussion about legally enforceable standards for the use of ML and AI.

The companies that want us to let them make the rules about AI are the same companies that brought us the current problems of a digital economy. And they are using the same assets: information about each of us.

At its core, AI is nothing more than algorithms that are able to access and analyze databases to make a good prediction. We have already seen how computing power and digital networks will expand in the next decade. The result will drive new, more powerful algorithms. The data feeding those calculations resides in the hands of the companies that have already used it to sell the ability to influence our behavior.

The decisions we make in the coming few years will determine whether the wonders of AI will be dominated by the same companies that dominate social media, search, and e-commerce. These companies have a double incentive to use the information they collect from us to dominate AI. First, it helps maintain dominance in their core business of targeting us. Second, they can dominate the AI future through control of the essential input: the huge amounts of data they hold on each of us.

Thus far, we have voluntarily agreed to allow a handful of companies to surreptitiously collect personal information in quantities that would make Big Brother blush. We have no idea how that information is being used or with whom it is shared. But it is just the tip of the iceberg as we consider an AI future. The establishment of rules for the collection and use of that information for the world we know today is a beginning. But absent rules for the AI economy we are destined to find ourselves back in the hands of those who moved fast to break things before society could catch up to the consequences.

Entering the third decade of the digital century, it is time for the public interest to reassert itself. Such a reassertion requires not just repairing the problems we have allowed to be created in the last decades, but also to look ahead, understand the path of technology, and apply the lessons we have learned.

-

Footnotes

- Adam Chandler’s Pulitzer Prize winning book The Visible Hand: The Managerial Revolution in American Business (Harvard University Press, 1977) focused on middle management as being the most important force in the market economy. Here, I have redefined the term as the regulatory structure needed for the 21st century.

- Robert Gordon, The Rise and Fall of American Growth, Princeton University Press, 2016, p. 14

- Ibid, p. 7

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).