The contours of acceptable online speech, and the appropriate mechanisms to ensure meaningful online communities, are among the most contentious policy debates in America today. Moderating content that is not per se illegal but that likely creates significant harm has proven particularly divisive. Many on the left insist digital platforms haven’t done enough to combat hate speech, misinformation, and other potentially harmful material, while many on the right argue that platforms are doing far too much—to the point where “Big Tech” is censoring legitimate speech and effectively infringing on Americans’ fundamental rights. As Congress weighs new regulation for digital platforms, and as states like Texas and Florida create social media legislation of their own, the importance and urgency of the issue is only set to grow.

Yet unfortunately the debate over free speech online is also being shaped by fundamentally incorrect understandings of the First Amendment. As the law stands, platforms are private entities with their own speech rights; hosting content is not a traditional government role that makes a private actor subject to First Amendment constraints. Nonetheless, many Americans erroneously believe that the content-moderation decisions of digital platforms violate ordinary people’s constitutionally guaranteed speech rights. With policymakers at all levels of government working to address a diverse set of harms associated with platforms, the electorate’s mistaken beliefs about the First Amendment could add to the political and economic challenges of building better online speech governance.

In a new paper I examine the nature of that difficulty in more detail. In a nationally representative survey of Americans, I show that commonly held but inaccurate beliefs about the scope of First Amendment protections are linked to lower support for content moderation. Further, I also show that presenting accurate information on the First Amendment to respondents can backfire, leading to lower support for content moderation. These results highlight the challenge of developing widely supported content moderation regimes.

An experimental approach

To better understand the challenges facing content moderation regimes and building public support for them, l designed a survey experiment to address the following:

- How common are inaccurate beliefs about the scope of First Amendment speech protections?

- Do such inaccurate beliefs correlate with lower support for content moderation?

- If so, does education about the limited scope of First Amendment speech protection increase support for platforms to engage in content moderation?

To yield insight into those questions, survey participants were randomly assigned to one of three groups. The first, or control group, was asked to judge a common content moderation scenario in which a platform suspended a user for persistently sharing false information. The second group was given a quiz about the First Amendment and constitution, and asked to judge the same content moderation scenario as the control group. The third group received a training on the First Amendment, and then was asked to complete the same quiz as the second group and judge the same scenario as the control group. By establishing baseline support for content moderation through the control group, the survey treatments make it possible to establish how an understanding of the First Amendment relates to support for content moderation. (For more on the design, see here.)

The results of the experiment were striking. Fifty-nine percent of participants answered the Constitutional question incorrectly when not given any training. This group—those who got the question wrong without training—also indicated lower support for the platform’s decision to suspend the user. On a 5-point scale ranging from strongly disagree to strongly agree, participants who answered the question wrong had a mean level of support of 4.3 versus 4.5 for participants who answered the question correctly, a small absolute difference but a statistically significant one. More importantly, far fewer of the participants who answered the quiz question incorrectly expressed the highest level of support for content moderation, 55% versus 70% for those who got it right. Overall, misunderstanding of the First Amendment was thus both very common and linked to lower support for content moderation.

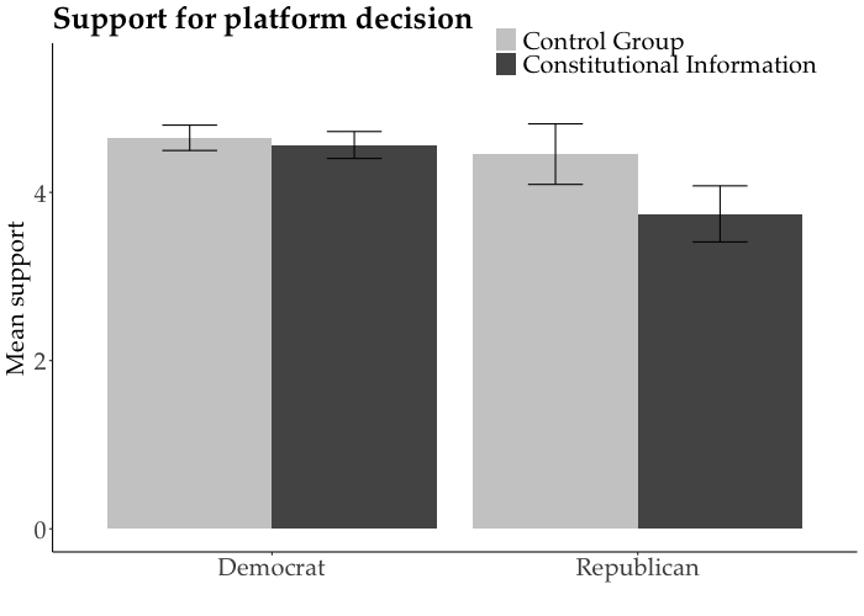

Given that linkage, providing educational training on the First Amendment could in theory increase support for content moderation. However, perhaps the most interesting result of the survey is that such training actually lowered support for content moderation. Indeed, those exposed to the constitutional training demonstrated significantly lower support for content moderation than those in the control group. This substantial decrease in support was linked to Republican identity, as shown in the figure below.

Since survey participants received the educational intervention at random, we can draw strong inferences that exposure to the educational intervention reduced support for content moderation, at least for individuals who identify as Republican. Such backfire effects have been documented across a wide range of domains and are often linked to partisan affiliations. Although the results are consistent with that literature, they also highlight a broader concern: Targeted interventions can backfire on judgments distinct from those directly targeted by the intervention. In this case, an intervention about the Constitution modified support for content moderation.

Reasonable skepticism

There are a number of plausible explanations for this backfire effect. For example, it could be that Republicans show a backfire effect precisely because they are made aware through the educational intervention that privately hosted digital venues lack constitutional protections. Put differently, Republicans may be more likely to have constitutional misunderstandings that make them comfortable with content moderation and are also therefore more likely to shift their opinion against content moderation when exposed to a targeted education. It would make sense for respondents to reduce their support for content moderation once they find that there is less legal recourse than expected when such moderation becomes oppressive. In other words, it is reasonable to be more skeptical of private decisions about content moderation once one becomes aware that the legal protections for online speech rights are less than one had previously assumed.

We could speculate that the results may also suggest an explanation for a trend in recent polling, which suggests that support for more regulation of tech companies is waning, particularly among Republicans. Could it be that the public has grown more aware of the constitutional limits of what the government can feasibly regulate and therefore more skeptical of proposed regulatory measures? The effects of increased constitutional awareness may be unintuitive and unpredictable, and my findings may generalize to explain how the public judges proposed lawmaking to regulate platforms (future work would have to confirm this speculation).

Policy ramifications

These results highlight an undertheorized difficulty of developing widely acceptable content moderation regimes. Misunderstanding the scope of the First Amendment is correlated with lower support for content moderation, yet providing accurate information about the First Amendment can reduce support for content moderation. Content moderation advocates and policymakers alike should carefully factor the possibility of backfire effects into their advocacy. An effective political strategy must account for what ordinary people believe about the state of the law, even if those beliefs are incorrect.

Indeed, such a strategy is particularly important given that inaccurate beliefs about the First Amendment are not the only misapprehension present in debates about content moderation. For example, Republican politicians and the American public alike express the belief that platform moderation practices favor liberal messaging, despite strong empirical evidence to the contrary. Many Americans likely hold such views at least in part due to strategically misleading claims by prominent politicians and media figures, a particularly worrying form of misinformation. Any effort to improve popular understandings of the First Amendment will therefore need to build on related strategies for countering widespread political misinformation.

The road to broad support for content moderation—and by extension, a safe and usable online environment—is likely to be long. Yet if we’re ever to arrive at better governance of online speech, we need to get our facts straight first.

Aileen Nielsen is a Ph.D. student at ETH Zurich’s Center for Law & Economics Center and a fellow in Law & Tech.

Commentary

Misunderstandings of the First Amendment hobble content moderation

June 6, 2022