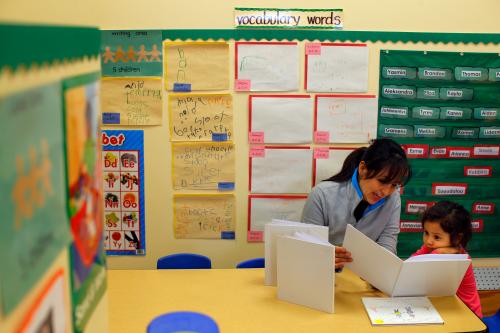

Few would argue with the premise that more attention and funding is needed for the care and development of young children in this country, nor with investing early in childhood development, supporting parents, and establishing pre-K programs of the highest quality.

In a recent Brookings blog, education experts Andres Bustamante, Kathy Hirsh-Pasek, Deborah Lowe Vandell, and Roberta Michnick Golinkoff make these suggestions to the incoming Secretary of Education Betsy DeVos.

As one component of their proposal, the authors assert that strong research demonstrates impressive effects of preschool attendance. However, the full scope of evidence on the long-term effects of preschool does not support this claim nor do the cherry-picked studies the authors cite.

Older research from the Abecedarian project, Perry Preschool, and the Chicago Parent Child Centers continues to be the primary sources cited for the argument that early childhood education has long-term positive effects. Savings are often cited, making investing in young children appear to be not just a moral good but also an economic one.

Contemporary preschool programs are not like these intensive small-scale demonstration programs. To assert that these same outcomes can be achieved at scale by pre-K programs that cost less and don’t look the same is unsupported by any available evidence.

In fact, the evidence suggests just the opposite.

There are only two rigorous studies of pre-K participation that have followed children into third grade: the Head Start Impact study and the Tennessee Voluntary Pre-K study. Each of these studies found that early gains at the end of pre-K were not sustained even as long as to the end of kindergarten. Children to whom the pre-K participants were compared caught up quickly. Unexpected and concerning, the achievement of comparison children in Tennessee study began to surpass that of the pre-K children in second and third grade.

Pre-K advocates do not like this message and are quick to dismiss the implications of these studies. But the reasons given for the dismissals are based on incorrect and misleading characterizations of each study.

Advocates dismiss the Head Start study because some of the children did not comply with their random assignment. Some who were assigned to the control group actually attended Head Start classrooms and the reverse happened as well. These “crossovers” are common in any randomized study. They are taken into account statistically. Analyses are conducted both for the original assignment children received as well as for what they actually did.

Analyses of actual participants in Head Start found no differences in achievement outcomes compared to non-attenders. But there were differences in social-emotional outcomes.

Analyses of actual participants in Head Start found no differences in achievement outcomes compared to non-attenders. But there were differences in social-emotional outcomes. The children who attended Head Start—who actually received Head Start no matter which group they were originally assigned to—were rated in the third grade as more aggressive by their parents and as having more emotional problems by their teachers.

Alternatively, pre-K advocates dismiss the Tennessee study as being low quality. The presumption is that the Tennessee program is unique and that programs elsewhere—Tulsa, Boston, and New Jersey—are higher quality.

Tennessee’s is a statewide program (TNVPK), while Boston and Tulsa’s are citywide and New Jersey’s only covers part of the state. None therefore match Tennessee’s scope. However, we have been able to compare the Tennessee program to these and others in both short-term effects and ratings of quality.

Short-term effects of pre-K participation are often evaluated using a Regression Discontinuity Design (RDD). What is important about this design is that it does not assess long-term effects, only those immediately at the end of pre-K. To date, neither Boston, Tulsa, nor New Jersey has measured long-term impacts with credible research designs.

To allow comparisons among programs on the magnitude of their effects, researchers use a metric called an effect size. We can compare the effect sizes from 155 TNVPK classrooms to an average of those from the Boston, Tulsa, New Jersey, and other programs.

In the area of literacy (knowing the letters of the alphabet, letter-sound relations, etc.) the TNVPK program achieved a very strong effect that compares to the average on literacy measures in the other programs. No programs were strong in language. And math achievement effects were intermediate.

Figure 1: Immediate pre-K effects by programs

Clearly in terms of its short-term impacts, TNVPK children made the same progress as those in the reportedly high-quality state or citywide programs. The pattern of gains across different skill areas is also the same in Tennessee compared to the other programs.

An older rating system of quality is the Early Childhood Environmental Rating Scale-Revised (ECERS-R). Based on published studies that report ECERS-R scores from pre-K and Head Start classrooms serving low-income children, the TNVPK ratings are highly similar. TNVPK classrooms received on average a similar total ECERS score to the programs in Boston, New Jersey, North Carolina, and other states.

Pre-K programs in the public schools are not set up to support parents either in their operating hours (school day, school year) or in their interactions.

One could argue that these scores are not high enough. All fall below the 4.5 score that Burchinal et al. argue represents higher quality. The point is that the quality of the TNVPK program by this measure is typical of all pre-K and Head Start classrooms, not dramatically lower as the critics would have you believe.

But rather than dismiss findings that do not fit a simple but favored scenario, attention should focus instead on exploring ways to improve early childhood education.

Current state funded pre-K programs violate all three of the recommendations from Bustamante, Hirsh-Pasek, Vandell, and Golinkoff. Expanding programs strictly for 4-year olds is “crowding out” the private sector that depends on the funding they receive for 4-year olds to allow the programs to care for infants and toddlers. Private programs are closing. Moreover, pre-K programs in the public schools are not set up to support parents either in their operating hours (school day, school year) or in their interactions. And finally the scaled up programs implemented today are linked to short-term success but then, unfortunately, to long-term fade out or worse. A more complex vision of “high quality” is needed—along with a plan for achieving it.

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).

Commentary

Misrepresented evidence doesn’t serve pre-K programs well

February 24, 2017