Russian interference in the 2016 U.S. election woke up Americans to the threat of disinformation, especially from Vladimir Putin’s Russia. But almost three years and many more interference campaigns later, the United States still lags in responding to malign foreign influence in the information space, argue Alina Polyakova and Daniel Fried. This article originally appeared in The Washington Post.

Russian interference in the 2016 U.S. election woke up Americans to the threat of disinformation, especially from Vladimir Putin’s Russia. But almost three years and many more interference campaigns later, the United States still lags in responding to malign foreign influence in the information space.

In a new report for the Atlantic Council, we looked into how European and U.S. authorities are addressing the challenge of disinformation — and found that the Europeans come out on top.

First, the good news. Democracies on both sides of the Atlantic have moved beyond “admiring the problem” — or reacting with a sort of existential despair in the face of a new threat — and have entered a new “trial and error” phase, testing new policy responses, technical fixes, and educational tools for strengthening resistance and building resilience against disinformation.

The European Union has taken the lead in crafting a response. Last December, the E.U. launched an Action Plan Against Disinformation based on principles of transparency and accountability (wisely, the plan was not based on content control). It increased funding to identify and expose disinformation and established a “rapid alert system” to do so in real time. Google, Facebook, Twitter and Mozilla have signed onto an E.U. voluntary Code of Practice, which tries to set some standards for fighting disinformation. Even though implementation has been slow, these are steps in the right direction and offer a solid basis for further action.

From these efforts, it’s clear that democracies might not be able to halt all disinformation, but they can limit it — and, hearteningly, they can do so within democratic norms. The answer isn’t censorship or making governments arbitrators of truth, but rather developing standards of Internet transparency and integrity, limiting space for impersonators, pushing social media companies to develop common standards and — though this is especially hard — addressing algorithmic bias that rewards the sensational and lurid. As democracies learned during the Cold War, we need not become them to fight them.

Now for the bad news. The United States lags behind the E.U. in terms of strategic framing of the challenge and policy actions to deal with it.

At a basic level, it remains unclear who in the U.S. government owns this problem. For example, the State Department’s Global Engagement Center has been tasked with countering state-sponsored disinformation, and it has begun to fund research and development of counter-disinformation tools while supporting civil society groups and independent media on the front lines of the threat in Europe.

The GEC, however, has no mandate in the United States — that’s the purview of the Department of Homeland Security. DHS has focused on protecting election infrastructure, such as updating or replacing electronic voting machines, hardening security around voter-data storage and harmonizing information-sharing among local, state, and federal election authorities. That’s all good, but it doesn’t address disinformation. So who is taking steps to ensure disinformation within the United States does not skew results?

For its part, Congress has proposed legislation that would increase ad transparency, govern data use and establish an interagency “fusion” cell to coordinate U.S. government responses. Again, these are all good ideas but, so far, none has passed.

Congress’s primary response has been to hold high-profile public hearings with the social media platforms. This has raised public awareness on algorithmic bias, online advertising and private data use. But they have also revealed the lack of knowledge among policymakers on digital technologies, and haven’t resulted in solutions.

Meanwhile, as the United States has failed to get a grip and Europe has proceeded cautiously, adversaries have evolved, adapted and advanced. Russia won’t be the last state to use disinformation for malign purposes: Other states with an interest in undermining democracies — such as Iran, North Korea and China — are learning from the Russian playbook.

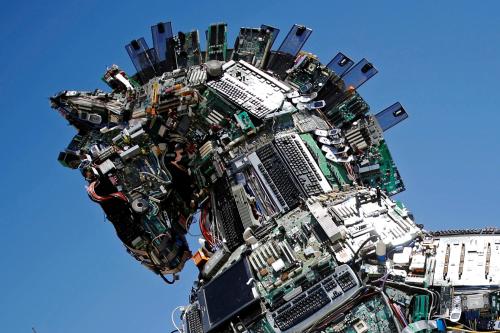

Disinformation tactics are shifting. Automated bots may have been at the cutting edge of the 2016 disinformation campaigns, but not anymore. New impersonation techniques allow foreign purveyors of disinformation to slip into, and thus distort and manipulate, domestic social media dialogue. The old Soviet technique of infiltrating authentic social groups is being updated for the 21st century, obscuring the difference between real debate and external manipulation.

As state and nonstate actors deploy new technologies and adapt to responses, exposure will become even more difficult. “Deepfakes” and other forms of “synthetic media” will only grow in sophistication. A primitive, distorted video of House Speaker Nancy Pelosi reached millions of people in hours. Higher-quality video and audio deception — available at low cost — that are harder to detect in real time will be a dangerous weapon in the emerging age of disinformation.

State-sponsored disinformation campaigns are upping their game, and they won’t be limited to election cycles. Democracies are still playing catch-up — the United States barely so.

It’s time for the United States to step up, work with Europe and together pull together like-minded governments, social media companies and civil society groups to learn from each other. With resources, time, attention and especially political will, we can develop a democratic defense against disinformation.

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).

Commentary

Europe is starting to tackle disinformation. The US is lagging.

June 20, 2019