This report from The Brookings Institution’s Artificial Intelligence and Emerging Technology (AIET) Initiative is part of “AI Governance,” a series that identifies key governance and norm issues related to AI and proposes policy remedies to address the complex challenges associated with emerging technologies.

Tax policy analysis is a well-developed field with a robust body of research and extensive modeling infrastructure across think tanks and government agencies. Because tax policy affects everyone, and especially wealthy people, it gets both a lot of attention and research funding (notably from individual foundations like those of Peter G. Peterson and Koch brothers). In addition to empirical studies, organizations like the Urban-Brookings Tax Policy Center and the Joint Committee on Taxation produce microsimulations of tax policy to comprehensively model thousands of levers of policymaking. However, because it is difficult to guess how people will react to changing public policy scenarios, these models are limited in how much they account for individual behavioral factors. Although it is far from certain, artificial intelligence (AI) might be able to help address this notable deficiency in tax policy, and recent work has highlighted this possibility.

A team of researchers from Harvard and Salesforce developed an AI system designed to propose new tax policies, which they call the AI economist. While the results of their initial analysis are not destined for the U.S. Code of Law, the approach they are proposing is potentially quite meaningful. Most current tax policy models infer how people would respond to a change in policy based on the results of prior research. In the AI economist approach, though, the actions of the computational economic participants were instead learned from a simplified game economy. They did this using a type of AI called reinforcement learning.

Reinforcement learning

“Reinforcement learning works by encouraging random exploration of possible actions, then rewarding the actions that lead to a positive outcome.”

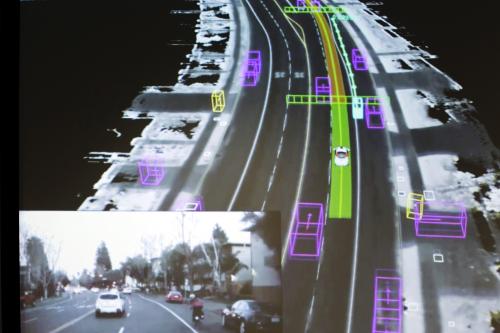

A specific subfield of AI, called reinforcement learning, has been driving advances in the modeling of complex behavior. Reinforcement learning systems are beating human players in ancient games and modern videogames alike, and enabling robotic movements that are decidedly uncanny. Reinforcement learning works by encouraging random exploration of possible actions, then rewarding the actions that lead to a positive outcome. In a simple game of pong (a virtual version of ping pong), the exploration would entail moving the paddle left and right, with a reward for each time the program manages to return the ball. The AI then learns to choose the actions that, in similar past situations, led to the preferred outcome. Over time, the randomness gives way to intentionality, and the AI will competently return every volley. This process can give way to surprisingly advanced strategies that have previously been reserved for human critical thinking. For example, in OpenAI’s hide-and-seek system, AI agents learned to manipulate the game mechanics beyond the expectations of the human designers.

The ‘AI Economist’ approach—from games to game theory

The Harvard-Salesforce researchers took this approach from games to game theory, building a simplified world where artificial agents can collect resources (stone and wood) and then make money by building houses or selling the goods among themselves. The agents differ in their level of skill, which has the dual result of incentivizing specialization and also creating economic inequity. Rather than hard-coding the behavior of these agents, they defined an optimal outcome as a mix of money and leisure time, then had the actors learn what choices made them the best off. Like it did with hide-and-seek, reinforcement learning produced the kinds of nuanced activities that we see in humans—in this case, economic activities like tax avoidance strategies. This behavioral complexity is intriguing, since it may offer a way to generate more realistic economic actors in complex models.

The system also created AI policy regulators, which adjusted marginal tax rates to try to maximize both economic efficiency and equity. The associated paper presents this as a major contribution (its title is “The AI Economist”), and it is an interesting proposal. Of course, nothing is an entirely new idea, and the idea of reinforcement learning for setting policies of a simulated economy has been considered as far back as 2004. Further, there already exist approaches to evaluate many different tax systems with far more complexity than only setting marginal rates for income bands.

Instead, it is the AI actors in the economy that deserve further consideration. There have been dramatic improvements in deep learning and reinforcement learning, best exemplified by AlphaGo’s dramatic victory over world Go champion Lee Sedol in 2016. Applying modern reinforcement learning to simulated economies is genuinely compelling. However, this new approach raises challenges new and old. To understand the potential value of the reinforcement learning approach to learning behaviors, it’s important to understand how existing tax models work, and why behavioral effects are a weakness in their design.

Behavior in modern tax models

If you’re a reader of Brookings publications, you’ve likely seen the results of the current generation of microsimulation models. Every four years, these models are used to evaluate major tax proposals from presidential campaigns. This leads to a bevy of articles comparing, for example, the proposals from Bernie Sanders, Hillary Clinton, and Donald Trump in 2016. The most sophisticated tax models, such as that of the Urban Institute–Brookings Institution Tax Policy Center (TPC), have thousands of variable inputs that can be adjusted to simulate changes in individual income and payroll tax policy. Once these are set, the model calculates the tax liability of each person in a dataset of anonymized tax returns. You can think of this like running a representative sample of households through TurboTax. This can be used to estimate how a given policy impacts the distribution of the tax burden and federal revenue.

While these models can simulate a wide variety of income and payroll tax policies, they are more limited in their accounting of individual behavioral changes. They are largely based on administrative data from tax returns, which do not provide insight into how taxpayers may react to tax policy. This administrative tax data can tell you how much money most Americans made, and how many families received tax credits like the EITC or those for children and child care. However, this data alone cannot tell us how these credits impacted decisions to work or enabled access to child care.

To do that, the models use estimates of how individuals respond to taxation from empirical research. For instance, the TPC model assumes there will be small reductions in income reported as marginal tax rates go up, with larger behavioral changes for higher-income earners. The TPC model also accounts for other important behaviors, like the effect of taxes on capital-gains realizations and the choice between taking the standard or itemized deduction. Still, the list of behavioral effects is short relative to all the ways individuals could respond to taxation. This is true of all models, including those used by the federal government (e.g., the Joint Committee on Taxation and Congressional Budget Office).

Small adjustments in policy will result in small behavioral effects, so the models are relatively accurate with changes close to the baseline policy. However, as changes become more dramatic, the behavioral impacts may become far greater, and thus, it’s much harder to assess the accuracy of the model estimates.

Two challenges to learning microeconomic behavior

The limited accounting of microeconomic behavior is a known and significant weakness in the current generation of microsimulation models for tax policy. Therefore, it is appealing that the “AI Economist” paper presents a potential way to model complex behavior of economic actors.

“The limited accounting of microeconomic behavior is a known and significant weakness in the current generation of microsimulation models for tax policy.”

Still, there are two large hurdles before this promise can be fulfilled: creating a realistic virtual economy and generating human-like behavior in AI agents.

Building a realistic virtual economy

In order for the resulting behavior to be meaningful, the gamified economy has to be far more realistic. The simplified version in which the AI actors collect stone and wood to build houses is not complex enough to create meaningfully realistic behaviors (this is not a criticism of the paper, which is a compelling proof-of-concept). Even the authors seem aware the resulting tax rates from their simplified economic environment, which bounce up and down like a camel’s back, are not yet compelling.

Building a sufficiently realistic economy simulation would require an enormous amount of time. There need to be representations of more complex markets of employment, housing, education, finance, child and elder care, governments and much more. Historically, economists have been hesitant to invest much in expansive simulations of economies. There have been some efforts, such as ASPEN, a simulation model of the U.S. economy built at Sandia National Laboratories in the late 1990s. However, the public-facing models do not seem to have persisted with enough support to continue development. Plausibly, an open-source effort could attract enough communal support to continue this work, but it would also likely need long-term dedicated funding.

The dramatic increase in the collection of economic data, especially in the digital economy, could be helpful toward this goal. However, most of this data isn’t available to the public. If researchers were able to combine massive proprietary datasets from digital giants like Google, LinkedIn, Amazon, as well as credit card transaction data, a far fuller picture of the economy would emerge. Of course, consolidating this much data into one place has clear dangers to privacy, and is unfortunately more likely to result from adtech aspirations than policy research endeavors.

Although it is difficult and resource-intensive to build a sufficiently complex economic simulation that would result in believable behavior, research on video games suggests it is possible. Starting with Edward Castronova’s analysis of Everquest in 2002, economists have noted that video game markets are realistic enough to result in outsourcing, scarcity, inflation, and arbitrage. Their effects can even spill into the real world, such as beleaguered Venezuelans generating virtual gold to sell for real international currencies.

The learned economic behaviors of AI agents can only be as informative as the economic simulation is accurate. However, even an ultra-accurate virtual environment would not necessarily lead to realistic AI agents.

Constraining AI to realistic human behavior

The reinforcement learning system in AlphaGo regularly examines options 50 to 100 moves into the future—while not literally exhaustive, it certainly exceeds human capacity. This is representative of the tireless effort of modern AI. In the case of AI economic actors, this may be helpful in finding loopholes and potential tax avoidance (or even evasion) strategies in proposed tax schemes. It’s also highly individualized, meaning the behavior is specifically learned from the actor’s situation, such as their skillset and economic outlook. This may be a substantial improvement from the more universal, set-from-above behavior in more traditional agent-based modeling (though agent behavior has been allowed to evolve in the past).

On the other hand, it also means that AI actors need to be constrained in optimizing their economic situation. This isn’t to say the AI will not take leisure, since the definition of “optimal” is set by the researchers to be a mix of relaxation and earning money. However, it does mean that AI actors are preternaturally rational, maximizing their finances and leisure in a way that no human would. The goal of using AI to simulate people is to get to closer to realistic human behavior, not machina economius.

“AI actors are preternaturally rational, maximizing their finances and leisure in a way that no human would. The goal of using AI to simulate people is to get to closer to realistic human behavior.”

This issue was demonstrated clearly when human participants were asked to play the simulated economic game created by the AI economist team. The graphical user interface used by the subjects included additional guidance that “helped participants to better understand the economic environment” and yet they still “frequently scored lower utility than in the AI experiments.” This is not surprising. It’s reasonable to expect human behavior to be complex, partially irrational, and highly varied across individuals. While this is a substantial challenge, the constrained reinforcement learning proposed by this paper has a higher ceiling in quality than prior approaches. If future attempts are able to improve on its approach, it may both improve accuracy in modeling small policy changes, and enable believable modeling of policy environments substantially different from our own, letting us explore wildly different policy worlds.

The long run

In pretty much any social-good application, AI does nothing on its own. However, with prudent application by domain experts, AI can lead to incremental improvements that, over time, have meaningful impact—as is true in policy research. Economists Susan Athey and Guido Imbens write “though the adoption of [machine learning] methods in economics has been slower, they are now beginning to be widely used in empirical work.” They are referring to machine learning methods for econometrics questions (such as causal inference), and less so simulations, but it’s possible that too will change over time.

It is reasonable to assume that any adoption of reinforcement learning for economic behavior modeling will take a long time to be meaningfully applied. This is especially true since it requires more comprehensive economic simulations to be truly informative. Still, it may be that in 20 years, this approach will have considerable impact, perhaps usurping the current debate around dynamic scoring (that is, to what extent should macroeconomic factors be accounted for in microsimulation models). Many factors suggest this might be the case—rising availability of computing power and big data, the common acceptance of behavioral economics, and the broader shift away from theoretical models toward empirical economics. If it does catch on, a fundamentally more informative approach to economic analysis might lie ahead—one that could help design a better tax system or even tell us when taxes are the wrong solution to a policy problem.

The Brookings Institution is a nonprofit organization devoted to independent research and policy solutions. Its mission is to conduct high-quality, independent research and, based on that research, to provide innovative, practical recommendations for policymakers and the public. The conclusions and recommendations of any Brookings publication are solely those of its author(s), and do not reflect the views of the Institution, its management, or its other scholars.

Microsoft provides support to The Brookings Institution’s Artificial Intelligence and Emerging Technology (AIET) Initiative, and Amazon and Google provide general, unrestricted support to the Institution. The findings, interpretations, and conclusions in this report are not influenced by any donation. Brookings recognizes that the value it provides is in its absolute commitment to quality, independence, and impact. Activities supported by its donors reflect this commitment.

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).