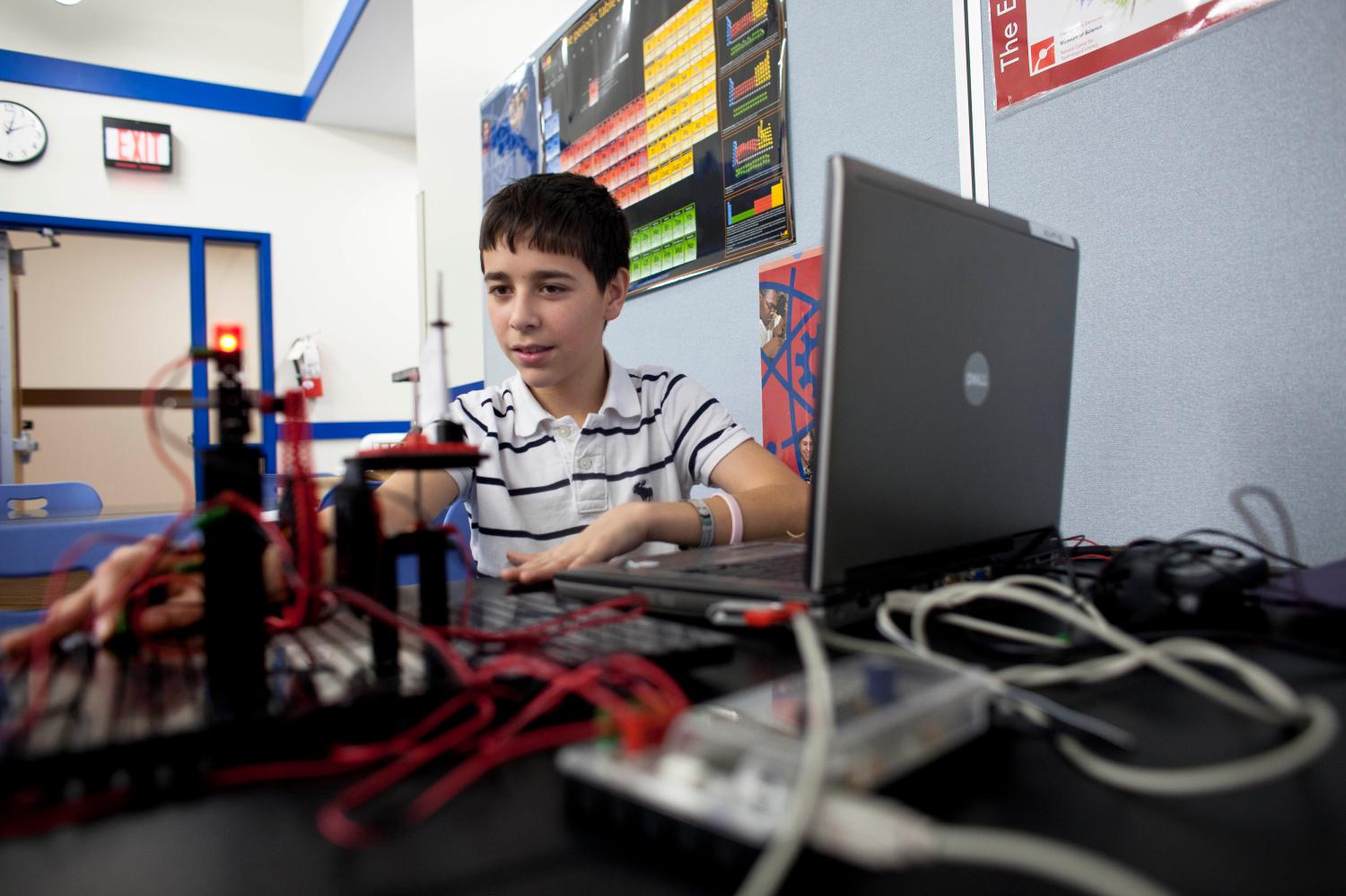

Last year’s enactment of the Every Student Succeeds Act, when viewed alongside other recent federally supported education initiatives such as the expansion of the national E-Rate program, the ConnectED initiative, and the Go Open campaign, was perceived by proponents of personalized learning as a clear victory. Their expectation is that under ESSA, educational innovations, especially ones that use technology to support effective instructional strategies, will become increasingly used in all classrooms, and more and more students will be supported in achieving their full potential.

Many of us conducting research on education technology, however, realize that the situation is much more nuanced than that. Until we know how technology can be effectively used to support individual students’ learning (often called “blended” or “personalized” learning), laws and initiatives such as the ones above—regardless of whether they increase the presence and use of technology in classrooms—will not necessarily lead to improvements in our educational system.

And what do we know about effective technology-enabled personalization? A casual glance at the evidence base shows little consensus, as a steady stream of articles, videos, and research reports on blended and personalized learning continues to grow, each arguing how effective it is, or isn’t. With all of these contradictions, what can we learn about the effectiveness of blended or personalized learning?

Researchers like me are wired to look for order in the chaos, trying to reconcile these conflicting points. But this task is easier said than done. Though this will be far from definitive, allow me to offer a few thoughts about one way I’ve found to make sense of the research in this field.

The Name Game

Differences in findings are partly due to the immaturity of this field – a major symptom of which is the lack of a clear, consistent, definition of the framework under study (is this “blended learning” or “personalized learning”?). Indeed, the fact that we can’t even agree on a single term for the framework is itself a signal that we have much more work to do.

Our lack of common definitions makes it hard to understand if irreconcilable findings are due to “cherry picking” of foundational or seminal findings to support the framework—or, on the flip side, focusing on the presence of technology alone (or some other, isolated component of the framework) in order to discredit it. Or perhaps even a more straightforward interpretation is that the variation in the field is simply due to the fact that blended and personalized learning is a large and complicated field, with many different technologies used, many different ways to use them, and many different outcomes that we could measure their effectiveness with.

Whatever the case may be, the first step towards reconciliation of these conflicting takes on the evidence base may appear to be agreeing on a single definition of the framework. But in my view, arguing about this point is a distraction. Defining a framework (as opposed to a component of the framework, or a single instructional strategy) is more difficult, and more restrictive than necessary, if our goal is to support practice. Education research has shifted from studying isolated practices or strategies in vitro, to studying them in vivo, to studying them in combination. This is a good thing. However, it complicates our ability to interpret findings and their generalizability.

Embracing the chaos?

So if we cannot impose order through agreeing on names, must we accept the chaos and just give in to the hodgepodge of terms, definitions, and findings that exist today? Not necessarily. It is perfectly valid, and in fact preferred, to define the framework as you are studying it, both a priori, in order to measure your treatment and your comparison within your study, as well as in your report of findings, so that others can understand how your definition relates to theirs. As researchers, we need to be explicit, precise, and transparent about our own definition(s) of instructional technology use, no matter how much it may differ from others’ definitions.

Yet, given my proposed approach above, some may question whether we can really measure the effectiveness of blended or personalized learning without a common definition. My response is an emphatic yes we can! We as researchers know this, but we also know how critical it is for others to participate. Practitioners may not understand that implementation is essential to understanding effectiveness, and the evidence we seek to uncover actually exists in classrooms. So, we need the cooperation of educators, administrators, funders, and others in our community (e.g., developers, and families) to support the production and curation of evidence.

I’m sure many of us understand that this can be daunting, since uncovering evidence is largely viewed as a “researcher’s” job in this field; but there are steps we can take to be more inclusive in our research. For example, we at The Learning Accelerator have developed a measurement agenda for multiple stakeholders, not just researchers. In Part 1 of this agenda, we have outlined different learning objectives for stakeholders to illustrate how they each can contribute to, and benefit from, this work. The Department of Education has also released a primer, as well as lessons learned from the Race to the Top District Grants for districts wanting to more fully participate in measuring effectiveness. In addition, the Department is building a web-based, interactive toolkit to help districts conduct “in house” studies of their own experiments in technology use. By including others in the research production process, we can also build the field’s capacity to consume research—increasing understanding of the role of research, and the applicability (and the limits) of findings.

Focus on theory, not names

In order to maximize the potential of laws like ESSA, and initiatives like E-Rate, ConnectED, and Go Open, knowledge about the effectiveness of innovations like blended and personalized learning is critical. In the end, though, researchers do not need to play the name game at all because theory building and testing is more important to improving practice than naming frameworks. Researchers have spent decades building the broad knowledge about how people learn, and which instructional strategies are effective; but as systems change and grow around us, our job has just begun. Education researchers are all, to some extent, applied researchers. We owe it to the field to continue theory building and testing, in a practical way, to provide educators with the evidence and the tools they need to best support all learners.

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).