Few policy issues over the past several years have been as contentious as the rollout of new assessments aligned to the Common Core Standards (CCS). What began with more than 40 states working together to develop the next generation of assessments has devolved into a political mess. Fewer than 30 states remain in one of the two federally-funded consortia (PARCC and Smarter Balanced), and that number continues to dwindle. Nevertheless, millions of children have begun taking new tests, either from the consortia, ACT (their Aspire series), or a new state assessment constructed to measure student performance against the CCS or other college/career-ready standards.

A key hope of these new tests is that they will overcome the weaknesses of the previous generation of state tests. Among these weaknesses were

poor alignment

with the standards they were designed to represent and

low overall levels of cognitive demand

(i.e., most items requiring simple recall or procedures, rather than deeper skills such as demonstrating understanding). There was widespread belief that these features of NCLB-era state tests sent teachers conflicting messages about what to teach, undermining the standards and leading to undesired instructional responses.

While many hope that the new tests are better than the ones they’re replacing, to date, no one has gotten “under the hood” of the new tests to see whether that’s true.

[1]

Over the past year, working for the Thomas B. Fordham Institute with independent assessment expert Nancy Doorey, I have led just such a study. Ours is the first to examine the content of actual student test forms on PARCC, Smarter Balanced, and ACT Aspire, three of the most widely used assessments in the state testing market. We compared these three tests with the Massachusetts Comprehensive Assessment System (MCAS), which many believe to be among the best of the previous generation of state tests.

To evaluate these assessments, we needed a methodology that could gauge the extent to which each test truly embodied the new generation of college and career-ready standards (and the CCS in particular). To that end, we were part of a team that worked with the National Center for the Improvement of Educational Assessment to develop a brand

new methodology

that focuses on the content of state tests. That methodology is in turn based on the

Council of Chief State School Officers’ (CCSSO) Criteria for Procuring and Evaluating High Quality Assessments

, a document that laid out criteria that define high quality assessment of the CCS and other college/career-readiness standards.

I will not spend much time here discussing the methodology itself (

you can read about it in the full report

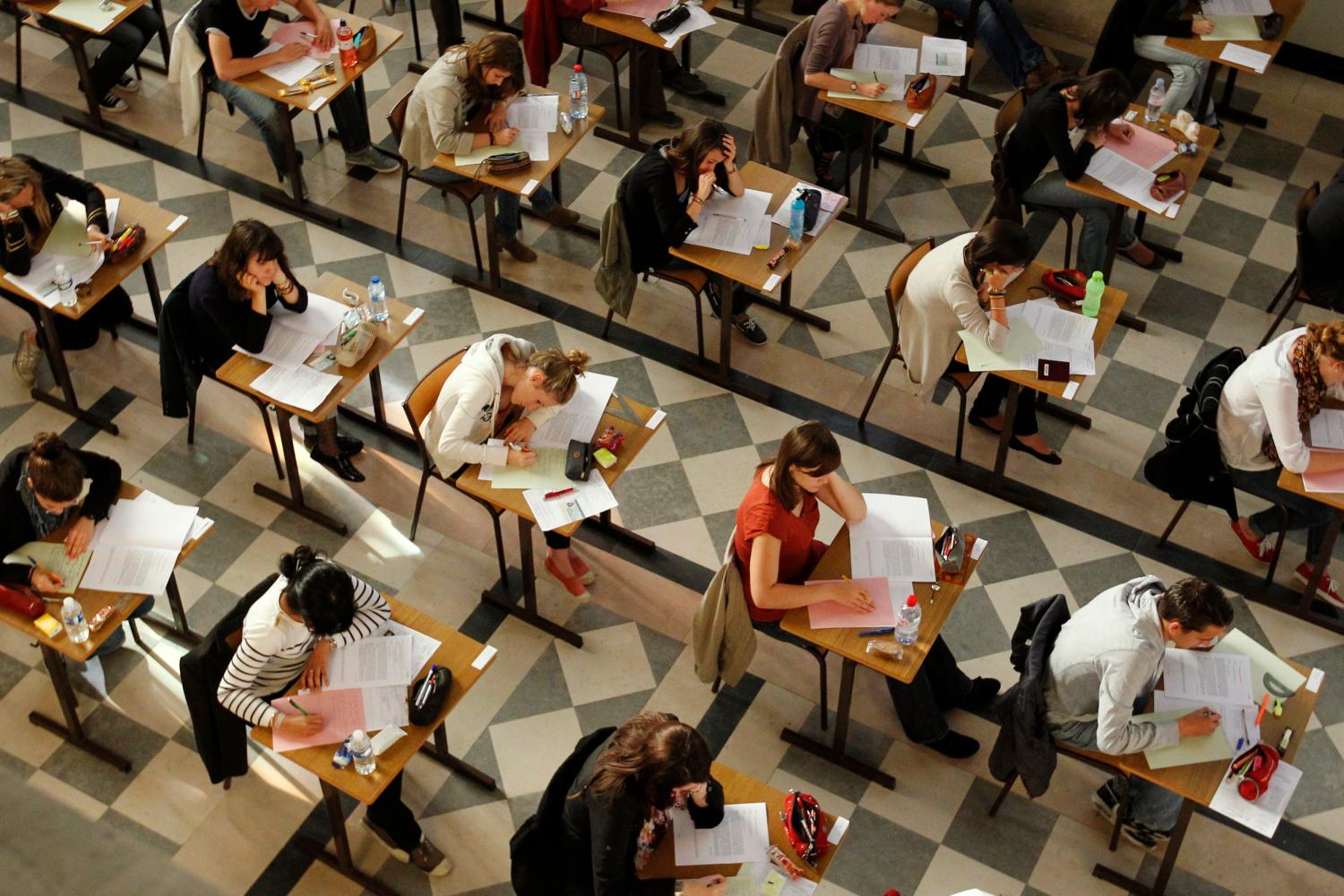

). But suffice it to say we brought together 30+ experts in k-12 teaching (math and ELA), the content areas, and assessment to evaluate each of the four tests item by item against the new methodology. The procedures involved multiple days of training and extensive, detailed rating of every item on a set of operational forms in grades 5 and 8 in math and English Language Arts (ELA). Here, I briefly summarize some of the key differences we saw across the four tests.

Our analysis found some modest differences in mathematics. For example, we found that the consortium tests were better focused on the “

major work of the grade

” than either MCAS or (especially) ACT. We also found that the cognitive demand of the consortium and ACT assessments generally exceeded that of prior state tests—if anything, our reviewers thought the cognitive demand of the ACT items was too high relative to the standards. Finally, reviewers found item quality was generally excellent, though there were a few items on Smarter Balanced (about one per form) that they thought had more serious mathematical or editorial issues.

[2]

The differences in ELA assessments were much larger. The two consortium tests had an excellent match to the CCSSO criteria in terms of covering the key content in the ELA standards. For example, the consortium tests generally involved extended writing passages that required students to write open-ended responses drawing on an analysis of one or more text passages, whereas the MCAS writing passages did not require any sort of textual analysis (and writing was only assessed in a few grades). The consortium tests also had a superior match to the criteria in their coverage of research and vocabulary/language. Finally, the consortium tests had much more cognitively demanding tasks that met or exceeded the expectations in the standards.

Overall, reviewers were confident that each of these tests was a quality state assessment that could measure student mastery of the CCS or other college/career-ready standards. However, the consortium tests stood out in some ways, mainly in ELA. I encourage you to read the report for more details on both the methods and the results.

Of course this is not the only kind of analysis one might do of the new state tests. For instance, researchers need to carefully investigate the validity and reliability evidence for new state tests, and there are plans for someone (other than me, thank goodness) to lead that work moving forward. Examples of that kind of work include the Mathematica predictive validity

study

of PARCC and MCAS and the

recent work

investigating mode (paper vs. online) differences in PARCC scores. We need more evidence about the quality of these new tests, whether it’s focused on the content (as in our study) or the technical properties. It is my hope that, over time, the market for state tests will reward the programs that have done the best job at instantiating the new standards. Our study provides one piece of evidence to help states make those important decisions.

[1] One previous study examined PARCC and Smarter Balanced focusing on depth of knowledge and overall quality judgments, finding the new consortium tests were an improvement over previous state tests.

[2] Because Smarter Balanced is a computer adaptive assessment, each student might receive a different form. We used two actual test forms at each grade level in each subject—one form for a student at the 40th percentile of achievement, and one at the 60th percentile—as recommended in the methodology. For PARCC and ACT we also used two forms per grade, and for MCAS we used one (because only one was administered).

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).