Data scientists at Facebook recently published their research on how people consume political news on the social network. The study is noteworthy because the researchers had direct access to Facebook’s own data. It examines the factors that influence the likelihood that liberals or conservatives will click on news articles that are cross-cutting or those that run counter to their beliefs. Many Americans get a significant portion of their news from Facebook and in effect the social network is the largest news platform in the U.S. The study shows how the makeup of our social networks, the Facebook News feed algorithm, and individual user choice all influence the content people consume.

The online political bubble

Social scientists have built a large body of evidence that people tend to befriend others with similar political beliefs. The Facebook study demonstrates that the polarization phenomenon also applies to the social network. The study finds that roughly speaking a Facebook user has five politically likeminded friends for every one friend on the other side of the spectrum. In a democracy it’s generally a value add for citizens to encounter a variety of political opinions. This fact does not enumerate the “right” number of friends to have from across the political aisle.

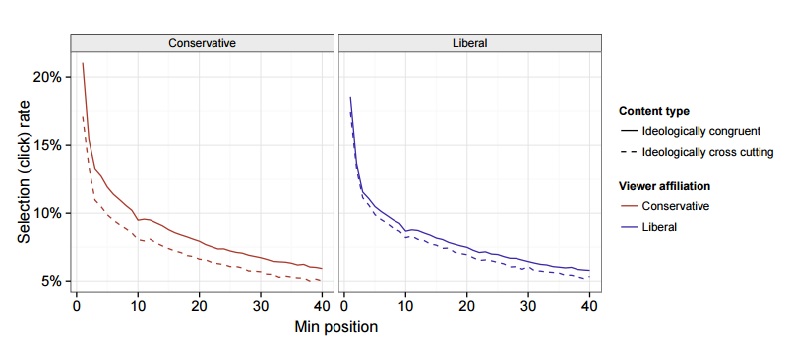

The Facebook News feed does limit the amount of cross-cutting links that viewers choose to read. The News feed algorithm ranks stories based on a variety of factors including their history of clicking on links for particular websites. If a user regularly clicks on stories from sources with a partisan leaning then the chances of seeing a similar story increases. The News feed algorithm functions in this way to make the experience of using the website more enjoyable. This approach also has some unintended negative consequences. The authors find that the News feed algorithm reduces the politically cross-cutting content by 5 percent for conservatives and 8 percent for liberals.

The Facebook News feed algorithm

Source: Science

Consuming content to confirm your beliefs

Individual choice also plays a role in exposing Facebook users to less cross-cutting content. Users make their own decisions about the stories they want to read. Even after controlling for where the stories appear in the News feed, the authors estimate that user choice decreases the likelihood of clicking on a cross-cutting link by 17 percent for conservatives and 6 percent for liberals. The study does not present the findings in a way that separates out the effects of the algorithm and individual choice. Both of these factors certainly influence each other. It appears that the impact of individual’s user choices is larger in magnitude than the News feed algorithm.

Facebook has tweaked the News feed algorithm for a variety of reasons. The company could leverage the popularity of their network to help mitigate the impact of political polarization. The website could change the algorithm to rank cross-cutting news stories highly. It could also include cross cutting links in the trending section of the website. Facebook is not just a social network. It’s the platform that millions of people use to learn about current events. Taking small steps to help combat political polarization in the long run will add to the trust that users have in Facebook.

Commentary

Political polarization on Facebook

May 13, 2015