The European Union’s (EU) AI Act (AIA) aspires to establish the first comprehensive regulatory scheme for artificial intelligence, but its impact will not stop at the EU’s borders. In fact, some EU policymakers believe it is a critical goal of the AIA to set a worldwide standard, so much so that some refer to a race to regulate AI. This framing implies that not only is there value in regulating AI systems, but that being among the first major governments to do so will have broad global impact to the benefit of the EU—often referred to as the “Brussels Effect.” Yet, while some components of the AIA will have important effects on global markets, Europe alone will not be setting a comprehensive new international standard for AI.

The extraterritorial impact of the AIA will vary widely between sectors and applications, but individually examining the key provisions of the AIA offers insight into the extent of a Brussels effect that can be expected. Considering three core provisions of the AIA reveals that:

- AI systems in regulated products will be significantly affected around the world, demonstrating a clear Brussels effect, although this will be highly mediated by existing markets, international standards bodies, and foreign governments.

- High-risk AI systems for human services will be highly influenced if they are built into online or otherwise internationally interconnected platforms, but many AI systems that are more localized or individualized will not be significantly affected.

- Transparency requirements for AI that interacts with humans, such as chatbots and emotion detection systems, will lead to global disclosure on most websites and apps.

Overall, this analysis suggests more limited global impact from the AIA than is presented by EU policymakers. While the AIA will contribute to the EU’s already significant global influence over some online platforms, it may otherwise only moderately shape international regulation. Considering this, the EU should focus on a more collaborative approach in order to bring other governments along with its perspective on AI governance.

introduction

The debate between EU institutions regarding what exactly the AIA will do (even the definition of “AI” is still being debated) continues, yet the proposal is sufficiently settled to analyze its likely international impact. The EU’s AIA will create a process for self-certification and government oversight of many categories of high-risk AI systems, transparency requirements for AI systems that interact with people, and attempt to ban a few “unacceptable” qualities of AI systems. Other analyses from the Brookings Institution have offered broad overviews of the legislation, but only some of the provisions are especially important for how this law will shape global algorithmic governance. Three categories of AI requirements are most likely to have global considerations, and warrant separate discussion: high-risk AI in products, high-risk AI in human services, and AI transparency requirements.

These AIA provisions will unquestionably have some extraterritorial impact, meaning they will affect the development and deployment of many AI systems around the world and further inspire similar legislative efforts. However, the idea of a Brussels effect has important implications beyond simply influence outside the EU’s borders—it specifically entails that the EU can unilaterally set rules that become worldwide standards, not through coercion, but through the appeal of its 450 million-strong consumer market.1

The Brussels effect manifests in two related forms, “de facto” and “de jure,” which can be distinguished using the case of the EU’s data privacy rules, specifically the General Data Protection Regulation (GDPR) and the ePrivacy Directive. Rather than developing separate processes for Europeans, many of the world’s websites have adopted the EU’s requirements to ask users for consent to process personal data and use cookies. This reflects the de facto Brussels effect, in which companies universally follow the EU’s rules in order to standardize a product or service, making their business processes simpler. This is often followed by the de jure Brussels effect, in which formal legislation is passed in other countries that align with the EU law, in part because multinational companies strongly oppose rules that would create conflicts with their recently standardized processes. This, in part, is why these privacy regulations have led to many other countries passing conforming or similar data protection legislation.

Therefore, like in data privacy, to best understand the possible effect of the AIA, one should consider both of these components: What will be the international repercussions of the AIA (the de facto Brussels effect); and to what extent will it unilaterally impact international rulemaking (the de jure Brussels effect)?

EU lawmakers have cited the Brussels effect as a reason to quickly pass the AIA, in addition to its core goals of protecting EU consumers and, to a lesser extent, spurring EU AI innovation.

“EU lawmakers have cited the Brussels effect as a reason to quickly pass the AIA, in addition to its core goals of protecting EU consumers and, to a lesser extent, spurring EU AI innovation.”

This idea, that being the first major country to pass horizontal AI regulation will have a significant and lasting economic advantage, is prevalent among EU policymakers. However, a recent report from the Center for European Policy Studies argues that Europe may lose its competitive advantage in digital governance as other countries invest in digital regulatory capacity and catch up to the EU, which is a key enabler of the EU’s influence over global rulemaking. Other academic research has argued that the underlying conditions of the AI market, such as the high costs of moving AI companies to the EU and the difficulty of measuring the impact of AI regulation, mean there is less value in regulatory competition, which aims to attract businesses through clear and effective regulations.

These broader perspectives offer reasons to be skeptical of the AIA’s global impact, but they do not analyze the AIA’s individual requirements with respect to actual business models of digital firms. Yet it is this interaction between the AIA’s specific provisions and the architecture of AI systems deployed by technology companies that suggests a more modest global impact. This paper will focus on three core components of the AIA (high-risk AI in products, high-risk AI in human services, and AI transparency requirements) and attempt to estimate their likely global impact. Notably, this requires attempting to forecast the change in corporate behavior in response to the AIA—a difficult endeavor, rife with potential for miscalculation. Despite this risk, anticipating the reactions of corporate stakeholders is necessary for evaluating and understanding the true effects of the AIA, and discerning how to build toward international consensus.

High-risk covered by product safety legislation

The proposed AIA covers a wide range of AI systems used in already-regulated sectors, such as aviation, automotive vehicles, boats, elevators, medical devices, industrial machinery, and more. In these cases, the requirements of the high-risk AI are incorporated into the existing conformity assessment process as performed by sectoral regulators, such as the EU Aviation Safety Agency for planes, or by a mix of approved third-party organizations and a central EU body, as in the case for medical devices. This means that extraterritorial companies that are selling regulated products in the EU are already going through the conformity assessment process. The AIA only changes the specifics of this oversight but not its scope or process.

Still, these changes are not trivial. Broadly, they require that companies placing AI systems into regulated products to be sold in the EU will have to pay at least some specialized attention to those AI systems. They will need to implement a risk management process, conform to higher data standards, more thoroughly document the systems, systematically record its actions, provide information to users about its function, and enable human oversight and ongoing monitoring. Some of these requirements have likely already been implemented by sectoral regulators who have been dealing with AI for a long time, but most are likely new. The result is that AI systems within regulated products will need to be documented, assessed, and monitored on their own, rather than just evaluating the broader function of the product. This sets a new and higher floor for considering AI systems in products.

In the AIA’s text, the extraterritorial scope is limited to products sold to the EU, yet there is still a high likelihood that this leads to international conformity. To continue exporting to Europe’s large consumer market, foreign companies will have to put these processes into place. Although the new rules are limited to the function of AI systems, many of these products are manufactured in large-scale industrialized processes—imagine a conveyer belt. Consistency in these conveyor belt processes means a cheaper product and more competitive prices for companies, whereas variations require different conveyor belts, plus varying testing and safety processes, and will be generally more expensive. It’s therefore reasonable to assume that many foreign manufacturers will adapt to the AIA’s rules, and once they have, they will often have a strong incentive to keep any domestic laws as consistent as possible with those in the EU.

This, on its face, appears as a classic instance of the Brussels effect, and academic research has found that the EU is most influential when it has the power to exclude products from its market, as it does here. Yet, the EU unilaterally setting rules is not a certain outcome. There are three factors that will reduce Europe’s influence on these new rules—existing markets, international standards bodies, and foreign governments.

First, because these new rules will affect already regulated products, there will be many foreign companies already selling products in the EU that will be monitoring these rules closely. This includes major exporters to the EU, such as medical devices from the US, robotic arms from Japan, vehicles from China, and many more. These large international businesses will not be regulated out of the EU without any say in the matter but will actively engage to make sure the rules don’t disadvantage their market share.

These companies will be helped by how the EU standards bodies are integrated into global standards organizations. The AIA does not directly establish specific standards for the myriad of products that use AI, as it is broadly understood that this would be impossible for any legislative body to do. Rather, the AIA delegates significant authority to the European standardization organizations (ESOs) for this task.2

Critically, the ESOs do not operate solely in the European context. There is a “high level of convergence” between the ESOs and international standards bodies that date back decades.3 This strong connection to international standard bodies gives foreign companies a clear path to being hard as the EU standards are written. In fact, the work by standard bodies inside and outside the EU has been ongoing for years, paving a long runway for global corporations to offer expertise and engage in political influence.

Lastly, foreign governments will also work to affect standards development through the ESOs’ work. This is most apparent in the EU-U.S. Trade and Technology Council, which has included a working group on technology standards. It is also worth watching if the newer EU-India Trade and Technology Council also tackles AI standards. This continues a recent trend in which countries including the United States and China have become far more actively engaged in strategic approaches to international standard setting as a method of international technology competition. With active engagement and influence from international companies, global standards bodies, and foreign governments, the EU can only be said to be demanding that there are rules for AI in products—but will not be alone in setting them.

High-risk for ai for human services

The second category of high-risk AI systems does not fit into the EU’s pre-existing regulatory framework but is instead comprised of a specified list of AI systems that affect human rights. Sometimes called “stand-alone” AI systems, these include private-sector AI applications in hiring, employee management, access to education, and credit scoring, as well as AI used by governments for access to public services, law enforcement, border control, utilities, and judicial decision-making. Recently suggested changes from the European Parliament could add insurance, medical triage, and AI systems that interact with children or affect democratic processes. The requirements on government use of AI will be important for Europe (where a broken algorithm for social welfare fraud led to the resigning of the Dutch government) but will, at most, serve as inspiration for the rest of the world, not new rules. The global impact will instead fall on the private sector AI systems in human services.

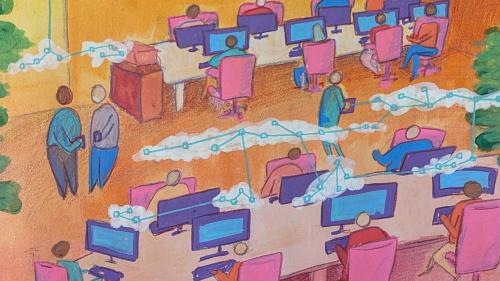

Understanding the regulation’s effect on private-sector AI in human services first requires a distinction between AI used in platforms and AI used in other software. This distinction is critical to examining the extraterritorial effects of the AIA—the more an AI system is incorporated into geographically dispersed (rather than localized) platforms, the more comprehensively the AIA’s requirements will affect its whole, rather than just its use in the EU.

Linkedin, as an entirely interconnected platform with no geographic barriers, provides a useful example on one end of this spectrum. Many of LinkedIn’s algorithms may fall into the high-risk category of the AIA,4 including those for placing job advertisements and recommender systems for finding job candidates. These AI systems use data from LinkedIn’s network of users and their interactions, meaning it is difficult to isolate the models in any clear geographic sense. This suggests that changes to the modeling process or function, as well as the documentation and analysis of the AI systems, will be somewhat universal to the platform.

This also means that it would be very difficult for LinkedIn to meet the EU standards and a hypothetical different set of regulations from another country. Any conflicts would create an immediate issue for LinkedIn, possibly requiring them to create different risk management processes, try to minimize varying metrics of bias, or record multiple different entries for record-keeping. It could even require them to change the function of the website for users in different geographies, a type of change that platforms are loath to make. As a result, LinkedIn and its parent company, Microsoft, can be expected to strongly resist additional legislative requirements on AI from other countries that are incongruous with the AIA, in turn making them less likely to be passed—a clear manifestation of the Brussels effect (both de facto and de jure).

This Brussels effect becomes far less pronounced for AI human services that are not connected to dispersed platforms but are instead built on local data and have more isolated interactions with individuals. For instance, consider a multinational company that outsources the development of AI hiring systems to analyze resumes and assess job candidate evaluations. This AI developer will build many different AI systems (or more precisely, AI models) for different job openings and possibly for different geographic regions (already made necessary by, for instance, different languages). Another example is Educational Technology software, which may connect a network of students, but will generally be deployed independently in different localities.

In these situations, it is quite likely that the developer would only follow the AIA requirements for the AI systems used in the EU, thus subject to the AIA. For the models not directly subject to the AIA, the developer would perhaps more selectively follow the AIA rules. The developer may choose to always generate and store logs that record the performance of an AI system since once this is developed it may be easy to apply universally and may be further seen as having value to the company. Yet there is no reason to assume the AIA requirements will be universally followed, especially those that require significant effort for each AI system. Although there are some efficiency gains in having a consistent process for building models, code is far more flexible than a “conveyor belt” industrialized process. Further, companies may not be as scrupulous in data collection and cleaning or model testing without any possibility of oversight, as would often be the case for the non-EU models. Human oversight and active quality management could be especially expensive. Thus, many companies may decline to perform these services outside the EU.

Broadly speaking, the less an AI system is built into an international network or a platform, the less likely it is to be directly affected by the AIA, resulting in a lessened Brussels effect. However, this distinction between AI in platforms versus localized software is easy to blur. For example, AI systems in employee management software (which will qualify as high-risk AI) will often be built into platforms that are shared across a business. While this is not a broadly accessible online platform, any multinational business with employees in the EU that uses this software will need the AI system to be compliant with the AIA. In this case, it is not obvious what might happen. It’s possible the vendor would comply with the AIA universally so its clients can freely use its software in the EU. However, it is also possible that to cover additional costs from the AIA, the vendor would charge higher subscription rates for the software to be used in the EU.

So, will the AIA have a Brussels effect on AI human services? it is safe to say yes for high-risk AI systems that are built into international platforms, and otherwise, perhaps not as much.5 Certainly, between the AIA, GDPR, and the recently passed duo of the Digital Services Act and the Digital Markets Act, the European Commission will have an enormously influential set of tools for governing online platforms—far beyond any other democratic government.

disclosure of ai that interacts with humans

The AIA will create transparency obligations for AI systems that interact with people but are not obviously AI systems. The most immediate international repercussions will arise from chatbots used for websites and apps, although one can imagine this eventually affecting AI avatars in virtual reality, too. If a chatbot doesn’t fall into a high-risk category (as it might by advising on eligibility for a loan or public service), then the only requirement would be that people are informed that they are interacting with an AI system, rather than a human. This would cover the many chatbots in commercial phone apps (e.g., those used by Domino’s and Starbucks) and websites, likely leading to a small notification stating that the chatbot is an AI system.

On websites, it is reasonable to expect the AIA to have a notable worldwide effect. Generally, adding a disclosure that states that a chatbot is an AI system would be a trivial technical change. This requirement is therefore unlikely to convince companies to create a separate chatbot for European visitors or, alternatively, deny Europeans access to the chatbot or website—the most likely outcome is that there would be universal adoption of this disclosure. Since these companies would be generally complying with the EU law globally without being forced to do so, this would be a clear instance of the de facto Brussels effect.

Chatbots used within smartphone apps may play out differently. Many commercial apps are already designed and provided specifically for different countries due to different languages or other legal requirements. In this case, the apps within European markets will disclose, but those operating elsewhere may not feel any pressure to make this change.

The transparency requirements also apply to emotion recognition systems (these, as defined by AIA, are rarely built into websites) as well as deepfakes. Regardless, while the disclosure requirement of the AIA may become widespread practice, the impact of this change will be relatively minor, even if it may be one of the more visible global effects of the AIA.

conclusion and policy recommendations

A careful analysis of its provisions broadly suggests only targeted extraterritorial impact and a limited Brussels effect from the AIA. The rules on high-risk AI in products will be mediated by global market forces while the high-risk AI in human services will be most widely impactful only on international platforms. Further, the transparency requirements will lead to only minor changes, and the few banned qualities of AIA systems will mostly be restricted to the EU. There may be other effects, such as inspiring other nations to establish AI regulation, potentially on the same high-risk categories of AI, but this would not reflect voluntary emulation, rather the more coercive side of the Brussels effect that is considered here.

Of course, the extraterritorial impact of the EU AI Act will be complex, as many businesses will respond based on their own unique circumstances and incentives, and unintended consequences are certain. This complexity makes prognostication, especially concerning the reactions of commercial actors, quite risky. However, it is worthwhile to try to anticipate the AIA’s outcomes for global governance for two reasons. First, understanding the specifics of the extraterritorial nature of the AIA is helpful for other governments to consider how they respond to the AIA, especially when implementing their own regulatory protections. Second, this analysis challenges the common European viewpoint that the economic benefits of being the “first mover” and the resulting Brussels effect are good reasons to quickly pass the AIA.

“Of course, the extraterritorial impact of the EU AI Act will be complex, as many businesses will respond based on their own unique circumstances and incentives, and unintended consequences are certain.”

For the rest of the world, while the effects of the AIA may not overwhelm, they still warrant consideration. Sectoral-specific business models and AI applications could lead to unexpected situations and possibly lead to conflicts with existing regulations. Working on rules for interconnected platforms will be particularly difficult, especially for those with high-risk AI systems. Other countries can get more proactive in working towards cohesion, both through the standards process and through regulatory stocktaking. For the U.S., it would be especially valuable to follow through on the November 2020 guidance from the Office of Management and Budget that asks agencies to review their own regulatory authority over AI systems. Despite the deadline being months ago, only the Department of Health and Human Services has released a thorough public accounting of its AI oversight responsibilities. Rather than waiting for the EU and the AIA, the Biden administration should instead follow through on the promise of an AI Bill of Rights.

As for the EU, there are many good reasons to pass the AIA—fighting fraud, reducing discrimination, curtailing surveillance capitalism, among others—but setting a global standard may not be of them. This is particularly important to the EU’s economic argument for the AIA, as many European policymakers have argued that being the first government to implement a regulatory regime will, in the long term, benefit the EU companies that adopt it. This line of thinking argues that, when the EU implements a regulation, its companies adapt first. Then, when the rest of the world adopts the European approach, the EU-based companies are best prepared to succeed in a global market with rules they have already adjusted to. Yet this logic only holds if the EU rules significantly impact the global markets, which, as this paper has shown, is uncertain, if not unlikely.6

This conclusion also suggests that the EU should advance the AIA on a timeline that meets Europe’s needs, rather than rushing to the finish line. The AIA has already been significantly delayed by political disputes, so if EU lawmakers see an urgent need to address AI harms (as is well demonstrated in the U.S.), then, by all means, they should press quickly ahead. However, doing so for the purpose of setting a global standard is misguided in the case of the AIA. That the AIA will not have a strong Brussels effect also has implications for the EU’s political messaging. The EU might have more to gain by signaling more openness to feedback from and cooperation with the rest of the democratic world, rather than saying it is “racing” to regulate—or else the EU may find itself truly alone at the finish line.

Microsoft is a general, unrestricted donors to the Brookings Institution. The findings, interpretations, and conclusions posted in this piece are solely those of the authors and not influenced by any donation.

-

Footnotes

- Some considerations of the Brussels effect also include the normative power of EU rules, but this is set aside in this paper in order to focus on more tangible changes in law and business incentives.

- Specifically, the duo of CEN and CENELEC are expected to take leading roles, while ETSI also appears to be angling for a part. That the AIA delegates so much authority to these standards bodies has been a consistent concern of many observers. (CEN is the European Committee for Standardisation; CENELEC is the European Committee for Electrotechnical Standardisation; ETSI is the European Telecommunications Standards Institute). One caveat to this argument is that the AIA may give the European Commission the power to issue technical standards itself, as an alternative to CEN/CENELEC. However, this is unlikely to be a common occurrence, given how laborious standard creation is likely to be.

- Specifically the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC).

- LinkedIn will also qualify as a Very Large Online Platform under the Digital Services Act, and its parent company Microsoft qualifies as a Gatekeeper under the Digital Markets Act, which could make LinkedIn, on paper, the most regulated social media website in the EU.

- One last, albeit minor, worldwide impact of the AIA is that it may create more public transparency into complex software systems. All high-risk AI systems need to be individually registered in a public EU-wide database with a description and their intended purpose. This might be especially informative for gig worker platforms, such as ride-sharing applications. Companies such as Uber, Lyft, and Bolt use many AI systems that will qualify as high-risk under the worker-management provision, and so they will be required to register their AI systems for trip routing, matching costumers to drivers, pricing and driver payments, estimating trip time, and more. Although the amount of documentation required of each AI system in the public registry is paltry, it may uncover currently unknown AI applications used by these global companies. If EU regulators follow up with investigations into some of these, it could uncover much more about the world’s most troubling AI applications.

- There are other economic benefits to how the AIA addresses the fraudulent claims, discriminatory outcomes, and consumer harms of unregulated AI systems. It is interesting to note that European private investment in AI increased dramatically from 2020 to 2021—from $2 billion to nearly $6.5 billion. Their investment was eclipsed by the rate of growth of both the U.S. and China. The EU remains far below its total investment of $53 billion and $17 billion, respectively. Europe has gained in the total worldwide share of AI investment in the same period (from around 4.5% to just over 7%), which is notable since this occurred during the public debate about the AIA, although it is impossible to know what would have happened without the proposed legislation.

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).