With the intense attention focused on large language models (LLMs) like ChatGPT, AI today is often discussed as a unitary force. But this framing obscures more than it reveals. AI is not one thing—it is a sprawling field of interlocking techniques, tools, and capabilities, developing and deploying across domains and specific applications as diverse as molecular property prediction, video super-resolution, and multilingual speech generation.

This research examines and illustrates these domains and applications. The exercise underscores the key reality that AI is neither singular, nor static. Rather, it comprises dynamic capabilities of growing complexity, including numerous domains like medical diagnostics, physical robotics, game-playing agents, graph reasoning, and more. This landscape is evolving rapidly, and the result is not a single revolution or form of intelligence, but instead a layered and incrementally expanding ecosystem of capabilities.

This diversity matters, not just to the marketplace but for all aspects of AI strategies and governance. It shapes what kind of testing is needed, how risk is assessed, and how or why countries choose to regulate or adopt specific forms of AI. Thinking about AI as one, monolithic thing in this diverse ecosystem can end up seeking to boil the ocean. The rest of this piece explores how to avoid that trap: first, by showing the breadth of AI domains and tasks; second, by unpacking how these capabilities map onto real systems and risks; and finally, by discussing the governance and sovereignty strategies that emerge once AI is treated as plural rather than singular.

Breaking down multiple AIs

To understand this diversity, we scraped an open dataset of AI models from Papers with Code’s State-of-the-Art (SOTA) leaderboard. This covers over 20,000 tasks across hundreds of benchmarks. These resources draw from various technical papers (such as arXiv preprints, AI conference proceedings, etc.), leaderboards, (such as SuperGLUE, HELM, Visual Questions answer reasoning) and linked GitHub repositories. The dataset provides benchmark performance across thousands of machine learning and AI tasks and models.

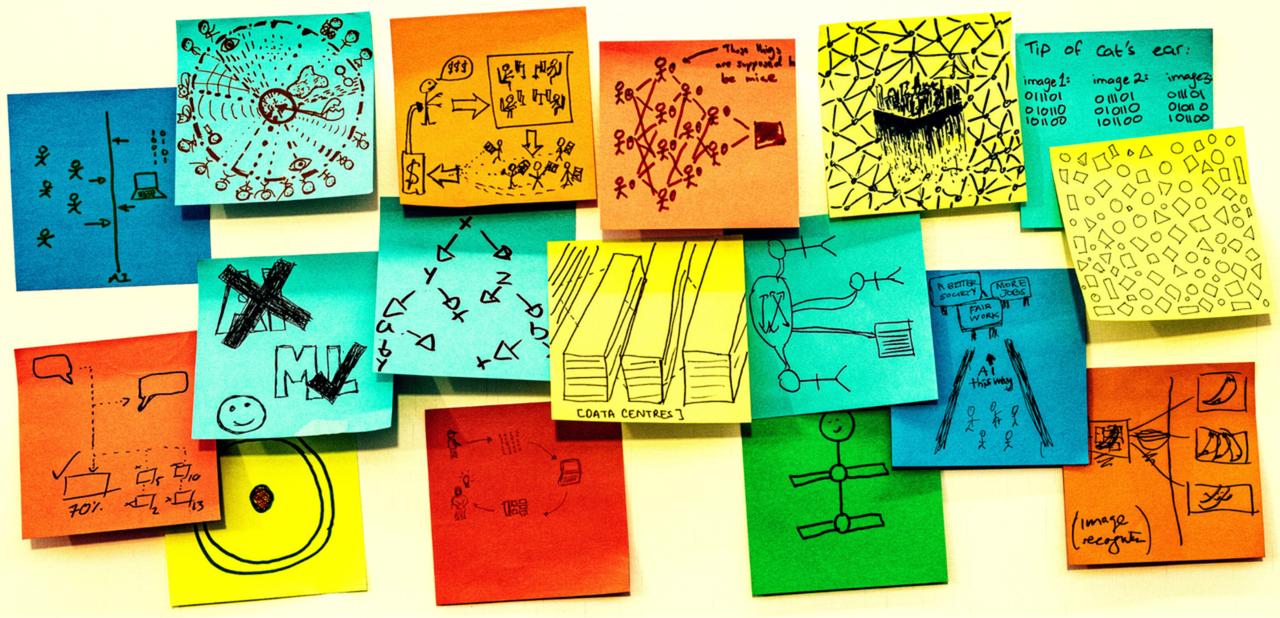

Our snapshot spans the modern deep learning era (post 2010s), with denser coverage after 2015 as benchmarks proliferated. From raw data, we first curated a hierarchical structure of AI domains, subdomains, and tasks. This taxonomy reflects how AI capabilities are evolving in both breadth and specificity. Table 1 shows this structure at a high-level, describing each domain and the key research and training required for each.

In turn, Figure 1 goes on to present a more granular tree map visualization of the specific subdomains and tasks within each domain of the full taxonomy in addition to the volume of research activity within each; it is also available on a public dashboard for further exploration. The result is a bottom-up, data-driven view of AI progress, reflecting what researchers actually benchmark “in the wild,” rather than a top-down schema such as the National Institute of Standards and Technology (NIST) taxonomy or the EU AI Act large model provisions.

In addition to the task diversity shown above, there is growing diversity in model offerings. Even among leading providers, there has been a shift from monolithic general-purpose systems to smaller specialized models. For example, Meta’s LLaMA 3 family and Mistral’s Mixtral 8x7B show that open models can be tuned for enterprise or domain-specific use. Google DeepMind has released not just Gemini for language but also domain-specific systems like AlphaFold for predicting protein folding and GraphCast for weather forecasting. Alibaba has released domain-specific models including QwenCoder, QwenMath, QwenAudio, for speech recognition tasks, and QwenImage, for image generation. Amazon’s enterprise models include AWS App Studio for business applications, AWS HealthScribe for generating clinical notes, and DeepFleet for robot traffic management.

These examples underscore that the future of AI is not only about scaling general-purpose models but also about developing and tailoring models to specific functions, cost profiles, and deployment needs. In this sense, it is useful to distinguish between different types of AI: so-called “narrow AI,” which is purpose-built for a defined set of tasks (e.g., AlphaFold for protein folding), and “general-purpose or generative AI,” which is trained on broad datasets to produce flexible outputs (e.g., large language models). Current development is moving in both directions—narrower, specialized systems for domain performance and broader generative models for general interaction—reinforcing that AI’s trajectory is plural rather than singular.

Multiple intelligences, multiple models, multiple strategies

The treemap (Figure 1) and the multiplicity of AI models reinforce that language models are just one slice of the broader AI stack. Vision systems, control agents, and 3D pose estimators have little to do with linguistic performance and much more to do with physical infrastructure, health care, and industrial control. While the underlying building blocks of neural networks (e.g., backpropagation, gradient descent) are common across domains, this does not mean that LLMs themselves are the drivers of progress in vision, robotics, or health care. In fact, most systems in autonomous driving or medical imaging use specialized, domain-tuned models rather than general LLMs, underscoring again the plurality of AI approaches.

The diversity of capabilities in artificial intelligence reflected in these domains and functions aligns evolutionary analogs in human intelligence. Visual perception, including edge detection and motion sensitivity, is among the oldest cognitive functions, tracing back over 500 million years to the Cambrian explosion. Similarly, the ability to model space and time, supported in humans by structures like the hippocampus, evolved roughly 200 million years ago and is shared across many vertebrates.

By contrast, language is a far more recent adaptation, with estimates ranging from 50,000 to 200,000 years ago depending on how we define symbolic communication and syntactic structure. This historical context matters for AI: Many of the tasks we now associate with “intelligence” in machines—perception, spatial reasoning, motor control—draw on evolutionary capacities that long predate language.

Building on this evolutionary brain development, the educational psychologist Howard Gardner developed the concept of “multiple intelligences”—that intelligence is not one-dimensional but a variety of attributes and skills that result in different ways of learning. He defined intelligence as a “biopsychological potential to process information that can be activated in a cultural setting to solve problems or create products that are of value in a culture.” The multiple domains of AI do much the same. Gardner identified eight different forms of intelligence: linguistic, logical-mathematical, spatial, musical, bodily-kinesthetic, interpersonal (often described as social), intrapersonal, and naturalistic (understanding of nature). LLMs clearly operate in the linguistic domain and applications of AI and are increasingly adept in the logical-mathematical, musical, and visual domains, while the technology is less developed in others. Of course, there are limits to the analogy. Biological intelligence is embodied in neurons rather than silicon, and in the case of language AI builds upon pre-existing human linguistic artifacts. Still, if intelligence in nature evolved in multiple dimensions, why would artificial intelligence modeled on neurons be any different?

Today’s policy conversations often focus on large language and multimodal models even though AI is evolving through more specific capabilities. AI safety, alignment, and governance must take this more diverse landscape into account. A system trained for spatial reasoning or protein folding poses very different risks, affordances, and evaluation needs than one trained to generate text. Differences that a risk-based approach should account for, though policy debates often lag in practice. Treating AI as a monolith, centered on language, risks obscuring critical dimensions of capability, safety, and policy design. As the internet scholar Milton Mueller writes, “‘artificial intelligence’ is not a single technology, but a highly varied set of machine learning applications enabled and supported by a globally ubiquitous digital ecosystem.” The governance problems we attribute to AI today—bias, misinformation, copyright, and security—were already manifested in earlier stages of the digital ecosystem, long before LLMs. What AI changes is the scope of these problems: Generative systems could likely accelerate biases within a vast training corpus and propagate them at scale, making familiar governance challenges more diffuse and wide-reaching.

AI adoption across users, regions, and functions

The focus on large models reacts to the speed at which generative AI has entered public consciousness and workplace application. As shown in Figure 4, ChatGPT reached 100 million users in just a few months, eclipsing platforms like Instagram, Facebook, and even the iPhone in terms of adoption speed. This sharp curve of accessibility and uptake is without precedent in the history of technology diffusion. It marks a shift in baseline digital expectations and technology adoption from years to days.

The universal buzz and record speed of adoption masks differences in who adopts AI, where, and for what purposes. These remain highly diverse, with actual enterprise integration varying widely across geographies. A McKinsey & Company survey found that the proportion of companies reporting the use of generative AI in at least one business function jumped from 55% in 2023 to 78% in 2024. Yet this growth is uneven. North America (74%), Europe (73%), and Greater China (73%) lead the way, while the rest of the world lags behind. The usage gap between generative AI and broader AI capabilities has also narrowed significantly from 22 percentage points in 2023 to just 7 points in 2024.

At first glance, this shrinking gap might suggest that broader AI use is catching up. But in practice, surveys show that the convergence comes from the opposite direction: Generative AI tools are rapidly absorbing functions once handled by specialized systems (e.g., facial recognition, medical imaging, or fraud detection) and extending into entirely new domains (e.g., code generation), becoming the primary interface through which businesses access a range of underlying capabilities and a resource they can use to build or distill new tools. For example, customer service chatbots increasingly rely on generative models not only for dialogue but also for classification, retrieval, and personalization—functions that once required separate models. This explains the development of domains and functions: Generative AI acts as a front-end layer, while specialized AI domains continue to provide the intelligence that enables end uses.

In the same survey, the most common enterprise use case is not advanced robotics or scientific modeling, but marketing strategy support, where AI helps generate ideas, draft content, and provide knowledge scaffolding. Following this use are knowledge management and personalization tasks centered on content manipulation and retrieval, not yet deep automation or reasoning. Recent labor market reports suggest that entry-level coding roles are among the first to feel pressure from generative AI, pointing to a divergence between enterprise use cases (which remain customer-facing) and workforce impacts (which are already visible in engineering job markets).

From multiplicity to strategy

A July 2025 roundtable hosted by the Forum for Cooperation on AI, a collaboration between Brookings and the Centre for European Policy Studies, convened global experts to explore the burgeoning concept of “national sovereignty” in AI, featuring national and regional iterations of the infrastructure, software, and processing that comprise the “AI stack.” One recurring theme was the increasing desire of nations to develop domestic or regional AI capacity that reflects local languages, values, and use cases that preserve national autonomy and security. An LLM-centric agenda can crowd out investment in these and other consequential domains (vision, robotics, time-series, scientific machine learning), creating policy blind spots and technical fragility if the hype cycle cools.

Sovereignty begins with clarity, so AI should be treated as plural. National AI plans will need to move beyond a narrow focus on LLMs or generic, one-size-fits-all approaches that center national compute capacity or chip manufacturing. Some governments already emphasize application-specific or small-model strategies—the EU in industrial automation or Canada in agriculture and mining, but sovereignty debates still tend to privilege generative AI. Strategies should be sector-specific and support a diverse range of model types, grounded in real-world use cases and patterns of adoption. In a modern industrial strategy, countries can use AI to reinforce their existing comparative advantages while also diversifying into adjacent industries and services. These strategies will also demand strategic understanding of cognitive needs, infrastructure realities, and responsible integration. The path to sovereignty is not just about controlling the stack, but about understanding which types of intelligence matter, for whom, and what.

The future of AI is not a monolith, it is a mosaic.

-

Acknowledgements and disclosures

Meta, Google, and Amazon are general, unrestricted donors to the Brookings Institution. The findings, interpretations, and conclusions posted in this piece are solely those of the authors and are not influenced by any donation.

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).