Since October 2019, the Brookings Institution’s Foreign Policy program and Tsinghua University’s Center for International Security and Strategy (CISS) have convened the U.S.-China Track II Dialogue on Artificial Intelligence and National Security. This piece was authored by members of the U.S. and Chinese delegations who participate in this dialogue.

Melanie W. Sisson

Rapid advances in the sophistication and functionality of military platforms enabled by artificial intelligence (AI) make it necessary and urgent to minimize the likelihood that states will use them in ways that cause harm to civilians. Of particular concern is the possibility that AI-powered military capabilities might cause harm to whole societies and put in question the survival of the human species.

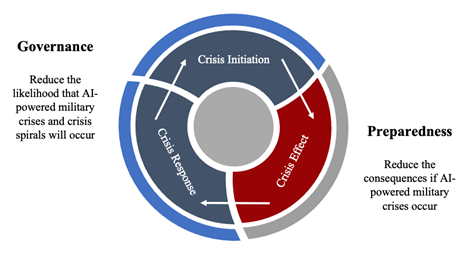

China and the United States are the leaders of AI development and diffusion, and so their governments have a special responsibility to seek to prevent uses of AI in the military domain from harming civilians. They can achieve this together by pursuing mechanisms believed to reduce the likelihood that AI-powered military crises will occur. This is the goal of governance regimes. The United States and China can also seek to protect civilians in an AI-powered military crisis by implementing mechanisms believed to reduce the consequences of those crises, if they do occur. This is the goal of preparedness regimes (Figure 1).

Governance regimes can seek to address technical and use-based causes of AI-powered military crises by requiring or prohibiting certain behaviors. Mechanisms can range from mutual statements to binding agreements and confidence-building measures that enhance communication and transparency. For such governance measures to be durable and effective, they must be based upon a mutually acceptable, analytical method for determining what to seek to govern.

Governance addresses initiators of and responses to AI-powered military crises

The U.S. Department of Defense and China’s People’s Liberation Army are actively developing military platforms that incorporate AI models. These AI models are computer programs, generally referred to as software, that get integrated into hardware: sensors, data management programs, and command and control systems. Governments can use AI-enabled military platforms to conduct intelligence, reconnaissance, and surveillance (ISR); to process, analyze, and visualize data; and to deliver conventional, cyber, and nuclear weapons.

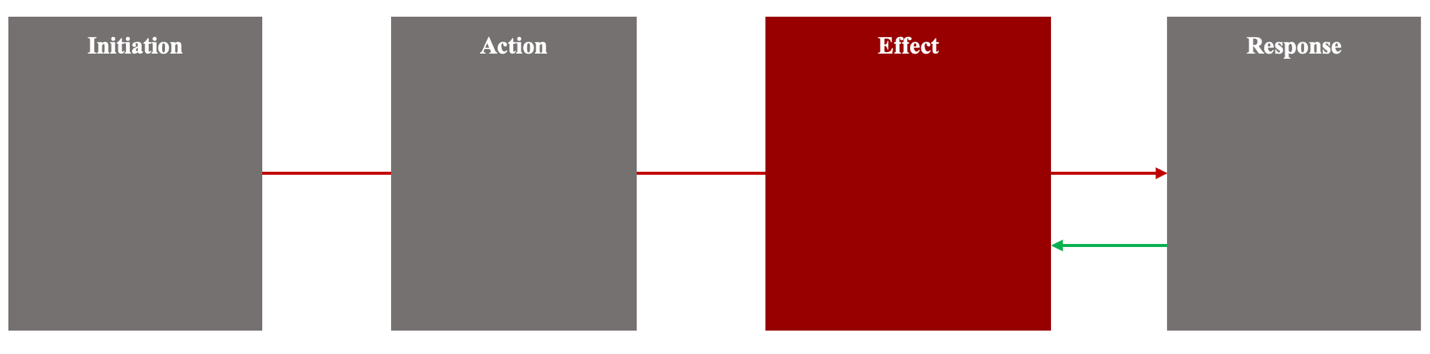

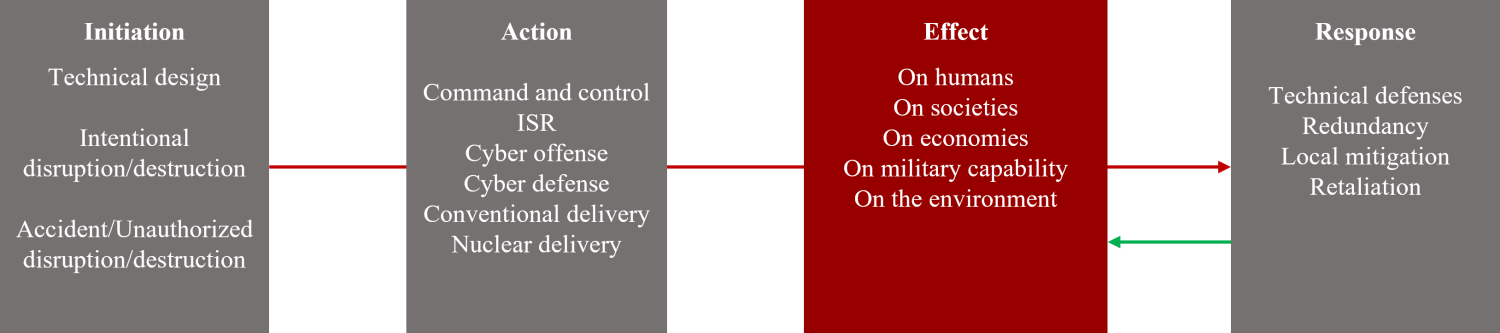

Each addition of an AI-enabled military platform into a national military’s arsenal increases the opportunity for an interaction to turn into an AI-powered military crisis. An AI-powered military crisis is a state-level interaction that occurs in four phases: initiation, action, effect, and response (Figure 2).

An AI-powered military crisis

The initiation phase contains the source of the AI-powered military crisis. In the military domain, the source can be the technical failure of an AI-enabled military application, or it can be a government decision to use an AI-powered military application in a knowingly or unknowingly provocative or dangerous way.

There are three general types of technical failures, with many specific variations, that might result in an AI-powered military crisis:

- Identifiable error in the AI model (innocent/normal error)

- Unidentifiable error in the AI model (black-box problem, malicious AI)

- Unintentional signal distortion (from the natural world, including space)

Similarly, there are four general types of government uses of AI-enabled military platforms, with many specific variations, that might initiate an AI-powered military crisis:

- Intentional disruption of function (corruption, distortion, denial, flooding)

- Intentional destruction of function (cyber, kinetic)

- Accidental/unauthorized disruption of function (corruption, distortion, denial, flooding)

- Accidental/unauthorized destruction of function (cyber, kinetic)

States observe technical failures of AI models and government uses of AI models as actions undertaken by AI-enabled military platforms. These actions produce effects that can kill or injure civilians, damage or destroy the physical infrastructure that supports civil society and economic activity, damage or destroy national military capabilities, and damage or destroy the natural environment. These effects can prompt the harmed nation to seek to mitigate damage locally, or to use its own military to engage directly with the military it identifies as having attacked its territory and/or population. Governments in this situation might also choose to retaliate.

Direct military engagement and retaliatory action have the potential to escalate the initial crisis. The use of AI-enabled military platforms in those responses creates additional opportunity for an AI-powered military platform to fail, or to be used in ways that provoke an escalatory or out-of-control spiral (Figure 3).

The four phases of an AI-powered military crisis

How states choose to cooperate to try to minimize the likelihood of AI-powered crises and crisis spirals will depend upon whether they first can agree on a method for creating a hierarchy to prioritize which initiators and responses to govern. There are many alternative methods for defining such a hierarchy.

One common method for creating a hierarchy to address the possibility that a harmful event will occur is to consider risk. Risk is the product of the probability that an event will occur and the seriousness of its harmful effects (Probability x Seriousness). However, there is no widely accepted analytical means through which to estimate the probability that any one, or even that any broad category of, AI-powered military crisis will occur.

An alternative method is to consider the number, or volume, of foreseeable scenarios in which a technical failure or government use of each category of AI-enabled military platform (e.g., ISR, command and control, cyber activity) can result in an AI-powered military crisis. This method can produce relative weights, but not all scenarios are foreseeable, and so these weights will be incomplete approximations.

A third method is to consider the relative maturity of AI-enabled military platforms. In this method, the characteristic of interest is time: how near or how distant into the future is the AI capability’s readiness for integration into a military platform? This method relies upon predictions about the pace of product development, which is a dynamic process, and so predictions must be updated as new information becomes available.

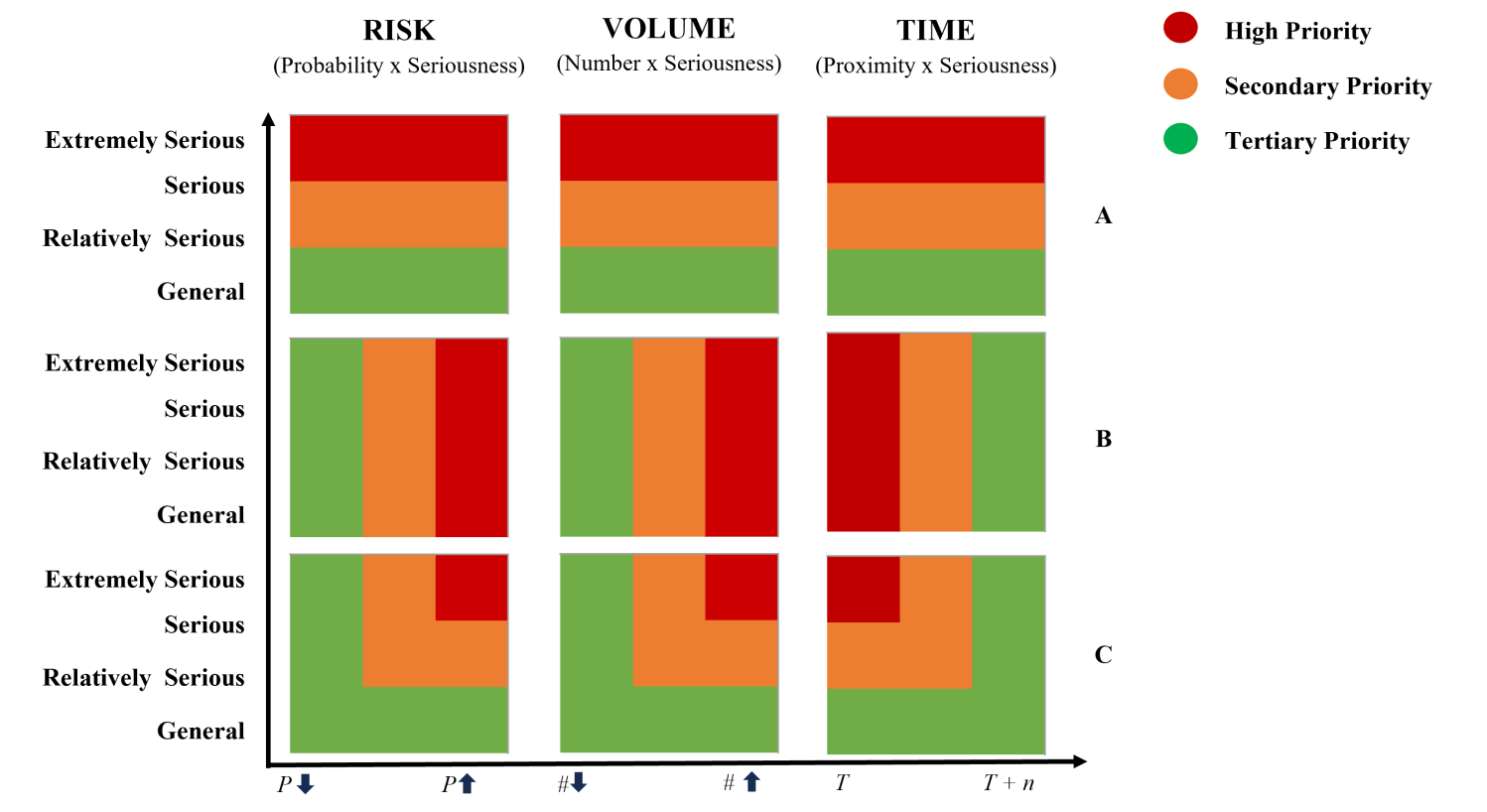

Each of these methods is flexible to different prioritization hierarchies. For all of them, states can choose to prioritize based on informed predictions of the severity of the effects they will have on civilians (Figure 4-A). Alternatively, they can choose to prioritize based on other criteria. They might decide, for example, to govern technical and use-based causes of AI-powered military crises on the basis of the probability that they will occur, the number of foreseeable scenarios, or the estimates that they will be ready for military use in the near term, regardless of the severity of the effects they might produce (Figure 4-B). They can also prioritize using a combination of features (Figure 4-C).

Alternative hierarchies and example prioritization schemas

These methods for defining a hierarchy are imperfect, and there is no basis for having greater confidence that one will be more effective at minimizing the frequency and intensity of AI-powered military crises than any other. This is unavoidable given the current state of technology and of bilateral relations. Policymakers and stakeholders cannot, however, allow this uncertainty to impede dialogue or use it as an excuse for inaction.

Colin Kahl

Melanie Sisson’s useful framework invites the United States and China to think creatively about mechanisms to mitigate emergent risks from AI-enabled military systems, including the prospect of crisis instability, harm to civilians, and broader threats to humanity.

As Sisson’s paper observes, “How states choose to cooperate to try to minimize the likelihood of AI-powered crises and crisis spirals will depend upon whether they first can agree on a method for creating a hierarchy to prioritize which initiators and responses to govern.” The paper suggests different ways to weight shared concerns based on the probability and seriousness of occurrence (risk), the anticipated frequency of occurrence (volume), or the proximity of occurrence (time). All are vital issues for the United States and China to discuss.

But even if agreement on what to prioritize proves possible, identifying technically and politically feasible mechanisms for reducing risks from AI-enabled military systems faces inherent challenges. Research on past attempts to forge arms control regimes covering dual-use technologies suggests that cooperation is particularly difficult when military and civilian uses are difficult to distinguish from one another. In the case of AI, there is a double-distinguishability problem: not only is AI software with potential military applications (such as offensive cyber tools) likely to reside in both military and civilian networks, but even in the military domain, observably distinguishing between military platforms such as drones or satellites that integrate AI software and those that do not is extremely difficult. The eventual integration of AI across almost all military and civilian systems further complicates matters because the degree of transparency required to build confidence or ensure compliance with agreements may produce real or perceived security vulnerabilities and espionage concerns, discouraging one or both parties from entering cooperative arrangements.

Despite these difficulties, we should not succumb to fatalism. Hard, narrow paths do not imply hopeless, closed ones. Given the stakes involved and the rapid integration of AI into military and national security systems, Washington and Beijing should seek to identify possible “no-regrets” risk mitigation and preparedness measures. While verifiable agreements on AI deployment in military systems may be difficult to devise and enforce, the two AI superpowers should still seek to develop a common baseline of shared AI risks. They should also explore steps to make certain uses of AI in military platforms more transparent, and they should share information and best practices on AI safety in the military realm. Together, these measures could reduce the prospect for misperceptions, miscalculations, and inadvertent escalation, while building the foundation for a broader AI dialogue aimed at strengthening strategic stability.

Some possible avenues to explore include:

- Developing a bilateral failure-mode and incident taxonomy, categorized by risk, volume, and time.

- Developing mutual definitions of dangerous AI-enabled military actions, including what constitutes an “intentional disruption of function,” “intentional destruction of function,” “accidental/unauthorized disruption of function,” and “accidental/unauthorized destruction of function.”

- Exchanging testing, evaluation, verification, and validation (TEVV) principles and documenting processes (not code) for AI integrated into ISR, command and control, and weapons platforms.

- Agreeing to mutual notification of AI‑enabled military exercises (such as those involving drone swarms and other autonomous systems) during peacetime, with post‑exercise readouts on safety lessons learned and best practices.

- Developing standardized communication procedures when AI-enabled military platforms cause unintended effects.

- Mutual steps to ensure the integrity of official U.S.-China political and military-to-military channels (including crisis communications) against synthetic media, including commitments that neither side will simulate or manipulate communications with AI, and agreed-upon text/voice verification protocols.

- Mutual pledges to ensure human control in peacetime and routine encounters between U.S. and People’s Republic of China military forces (e.g., air intercepts, maritime shadowing), requiring affirmative human authorization for any weapons employment, including by AI‑enabled platforms.

- Supplement the 2024 commitment between U.S. President Joe Biden and Chinese President Xi Jinping that nuclear weapons use decisions should be controlled by humans, not AI, with a mutual pledge not to use AI to interfere with each other’s nuclear command, control, and communications.

By forging common understandings, establishing norms, and building channels of communication, steps such as these could help mitigate the prospects of an AI-induced military crisis and lower the likelihood of escalation if incidents occur.

In parallel, the United States and China should explore ways to cooperate on various catastrophic “loss of control” scenarios emerging from frontier AI models. Both superpowers share an interest in preventing malign nonstate actors from utilizing advanced AI models to develop devastating cyber, biological, or other weapons of mass destruction. And as AI approaches the threshold of artificial general intelligence or artificial superintelligence, both countries (along with the rest of humanity) share an interest in ensuring these powerful systems remain aligned with their interests and values and remain under human control.Given the intensity of U.S.-China military and AI competition, cooperation on these issues may be difficult to imagine. But it is worth recalling that, even at the height of the Cold War, the United States and the Soviet Union were able to reduce the prospect of Armageddon by cooperating on the Nuclear Non-Proliferation Treaty and limiting their own nuclear arsenals and other destabilizing strategic systems via arms control. The United States and China owe each other—and the world—nothing less when it comes to reducing the security risks arising from AI.

Sun Chenghao

Sisson’s article provides a timely and significant contribution to the debate on how to reduce the risks associated with AI in military applications. Its four-phase model of crisis—Initiation, Action, Effect, and Response—offers a clear and systematic way to conceptualize how technical malfunctions and human decisions may escalate into large-scale crises. Equally important is its proposal to establish hierarchies of risks through analytical methods such as probability—seriousness calculations, scenario counting, and assessments of technological maturity. Building on this contribution, the next stage of research and policy development should focus on translating these analytical insights into multilevel governance mechanisms that link technical safeguards with political and institutional arrangements. For me, I believe future efforts in AI crisis governance should go further in three directions: refining the technical mechanisms at the micro level, more closely scrutinizing risk hierarchy methods, and later situating them within a political-institutional context at the macro level.

On the technical side, the proposed frameworks might require additional specification to more precisely distinguish between comprehensible errors and incomprehensible errors, rethinking the role of distinguished human-machine interaction patterns in different systems (e.g., nuclear command and control versus ISR). Beyond identified comprehensible errors such as algorithmic flaws, data imperfections, and sensor disconnections, we could also introduce some practical ways to record different types of errors. One approach would be to establish a detailed error-logging system that categorizes failures based on their nature and impact. For incomprehensible errors like black-box opacity and uncontrolled self-learning, they could be tracked through regular assessment reports. These reports would log instances where an AI system’s decisions diverge significantly from expected outcomes, such as an autonomous drone misidentifying a target or a missile defense system failing to recognize an incoming threat. By systematically recording such mismatches, we can begin to differentiate between AI failures that are caused by model limitations versus those that emerge from unexpected external factors, providing greater insight into their causes and potential solutions.

Furthermore, it is important for making progress to consider the role of human-machine interaction in preventing crisis escalation. The distinctions among human-in-the-loop (HITL), human-on-the-loop (HOTL), and human-out-of-the-loop (HOOTL) models are not merely technical but have direct implications for accountability and escalation control. Responsible governance must recognize that these models are not interchangeable and should be domain-specific. For example, nuclear command and control, as well as target identification in lethal operations, must adhere to strict HITL or HOTL architectures to ensure human accountability and compliance with international humanitarian law. By contrast, certain ISR or logistical support systems may gradually evolve toward responsibly managed HOOTL configurations, where autonomous decisionmaking can improve speed and operational efficiency without directly triggering lethal consequences. In these domains, the emphasis should be on ensuring reliability, verifiability, and post-action traceability, rather than maintaining constant human control. Yet, asserting the existence of “human control” is not sufficient; the credibility and authenticity of that control must be demonstrable. In many systems nominally labeled as HITL or HOTL, the human role is often reduced to formal approval or symbolic oversight, offering little real opportunity for intervention.

On risk hierarchy methods, a hybrid approach that prioritizes near-term risks while maintaining risk consciousness toward emerging technologies and applications could also be taken into consideration. The probability-seriousness formula is attractive in principle, but probability remains incalculable given the absence of sufficient data on real-world AI failures in military contexts. A more feasible approach may be scenario-based simulations, but these too are vulnerable to incompleteness, since not all plausible futures can be foreseen. The maturity-based method is perhaps the most operational, since it links governance to technologies closest to deployment, but it risks ignoring “immature” technologies that may suddenly leapfrog into use. A hybrid approach—prioritizing near-term mature applications while maintaining adaptive monitoring of emerging technologies—may offer a more balanced path forward.

At the Action stage, governance mechanisms should acknowledge that distinguishing between conventional and nuclear delivery systems is often technically infeasible. Many delivery platforms, such as dual-capable missiles, bombers, and shared satellite communication channels, serve both nuclear and conventional missions. Consequently, efforts to impose technical separation may be impractical or even counterproductive. Instead, the focus should shift toward establishing robust assessment and crisis-management mechanisms capable of evaluating the nature and intent of system activation in real time. For instance, enhanced verification protocols, cross-domain data fusion, and joint situational awareness frameworks could help decisionmakers interpret whether a system failure or activation signal carries nuclear intentions. In parallel, crisis communication channels should incorporate technical verification mechanisms to distinguish between genuine system failures and deliberate hostile acts—a critical distinction in avoiding escalation.

About how to promote state-level cooperation on AI-powered crises, the risk-based approach is indeed a necessary starting point for building consensus in AI crisis governance. Framing cooperation around shared perceptions of risk can help align states with diverse strategic priorities. To make this method more operational, risks could be classified by initiating actors, for instance, governmental decisions, nonstate interventions, or inherent technical flaws. Meanwhile, risks can also be ranked by urgency and potential harm. Yet, as current rating systems largely rely on subjective expert judgment, there is an urgent need to develop more standardized, data-driven risk evaluation frameworks.

The scenario-based method is equally valuable because it directs attention to context-specific risks. For example, unintentional escalation along land borders or in contested maritime zones could serve as focal cases for preventive governance and joint exercises. Finally, maturity-based hierarchies also add an important dimension. The relative readiness and reliability of AI-enabled systems, such as varying levels of automation in autonomous weapons, may influence crisis thresholds differently. Integrating maturity assessments into risk frameworks would allow states to anticipate where AI deployment is most destabilizing and where confidence-building measures are most urgently needed.

Yet, beyond these micro-level refinements, macro-level conditions will ultimately determine whether governance mechanisms succeed. Even the most sophisticated risk hierarchies or technical taxonomies will not ensure stability in the absence of political trust, strategic stability, and legal norms. The triggers of crises rarely stem solely from unilateral actions or machine failures; more often, they arise from both intended and unintended behaviors among multiple actors, such as misperceptions and misreading of the other side’s intention, or deliberate, malicious actions by parties and actors. Thus, governance arrangements cannot rest on technical rationality alone but must be embedded within a broader political and institutional framework.

Three areas deserve particular emphasis in this regard. First, red-line consensus: the United States, China, and other major technological powers should agree on categories of AI military applications that are strictly off limits. These “red lines” could serve as the functional equivalent of taboo norms, clarifying thresholds that must not be crossed and reducing the risks of uncontrolled escalation that could possibly occur in controversial areas. Second, arms race restraint: the growing signs of an “intelligentized arms race” risk pushing states into a spiral of ever more complex and destabilizing AI deployments. Without deliberate measures to constrain this dynamic, unilateral pursuit of advantage will undermine stability. Practical steps might include advance notification of deployments, post-operation reporting, and joint crisis simulations designed to increase transparency and reduce suspicion. Third, political trust and international legal frameworks: technical agreements will remain fragile unless supported by confidence-building measures and, eventually, international legal instruments. The United Nations and other multilateral platforms provide useful venues for developing baseline principles, while Track 1.5 and Track 2 dialogues can facilitate the incremental accumulation of expertise and consensus. Over time, such efforts could lay the groundwork for binding arrangements that lend durability and legitimacy to governance.

China and the United States bear particular responsibility in this regard. As the leading developers and deployers of AI military systems, their interactions will set precedents for the rest of the world. By converging on technical standards for risk classification, transparency, and human-machine interaction protocols, while simultaneously building political commitments to restraint, they can reduce both the probability and the consequences of AI-driven crises. Conversely, failure to do so risks fueling an unconstrained arms race with destabilizing global effects.

Xiao Qian

Sisson has made commendable efforts in developing a hierarchy to prioritize which initiators and responses to govern and which the United States and China may be able to agree on as one of the first steps toward AI governance in the military domain. Her work is both admirable and deserving of respect.

I share her view that China and the United States, as two major countries of AI development and diffusion, have a special responsibility to govern AI in the military domain and to prevent it from harming civilians. However, I am also acutely aware of the significant challenges that hinder genuine dialogue and cooperation between the two nations under the current climate of strategic rivalry. To move forward, both sides must break free from the existing security dilemma.

The concept of the security dilemma was proposed by American political scientist John Herz in 1950 and describes a situation in which states, in pursuing their own security, inadvertently undermine the security of others. The absence of mutual trust often leads to arms races, escalating tensions, and potential conflict. Its root cause lies in uncertainty about others’ intentions and the pervasive sense of insecurity inherent in an anarchic international system.

According to Herz, the security dilemma involves several defining elements:

- An anarchic international environment lacking authoritative institutions to regulate state behavior or ensure protection.

- Mutual suspicion and fear arising from uncertainty about other actors’ intentions.

- Competitive behaviors and security measures adopted in pursuit of self-protection.

- A resulting decrease in the overall security of all actors involved.

The ongoing competition between China and the United States in the field of artificial intelligence exhibits many characteristics of such a security dilemma.

First, despite the rapid advancement of artificial intelligence, there is still no unified or binding international treaty governing AI technologies. Global AI governance remains fragmented and regionally driven, with no universally authoritative institution in place. Major powers differ widely in their core concepts, value orientations, and regulatory approaches, leaving the international system in a state of governance anarchy.

Second, mutual suspicion, fear, and uncertainty about intentions have long shaped the trajectory of U.S.-China relations. Since the early 21st century, China’s rapid economic and military rise has sparked intense debate within the United States on how to respond. Beijing’s launch of “Made in China 2025,” its active promotion of the Belt and Road Initiative, and its increased investment in high-tech industries prompted U.S. policymakers to view China with growing caution, fearing a challenge to U.S. global leadership.

In the technological domain, Washington’s AI Action Plan explicitly emphasizes “winning the AI race” against China. To this end, the United States has broadened its definition of national security, strengthened foreign investment screening, tightened export controls on critical technologies, restricted technology transfers to China, and placed several Chinese firms on the Entity List—all in the name of safeguarding national security.

From China’s perspective, these actions are viewed as efforts to contain its AI development, preserve U.S. hegemony, and deny China its legitimate right to technological progress. Within the broader context of strategic rivalry and geopolitical competition, such deepening mistrust and uncertainty have severely narrowed the space for dialogue and cooperation on AI governance between the two powers.

Third, the world has entered an era of profound uncertainty and systemic risk. Regional conflicts and geopolitical tensions have intensified—the Ukraine crisis continues to undermine European security, while the Israel-Palestine conflict has further destabilized the Middle East. Simultaneously, emerging technologies such as AI, 5G, and quantum computing are reshaping global power structures. Non-traditional security threats—including climate change, terrorism, cybersecurity, and public health challenges—are exerting increasing influence on international politics.

In this context, many countries are reassessing the economic and security risks associated with globalization and are turning toward protectionist or security-oriented policies, thereby weakening global supply chain resilience and eroding transnational cooperation. The growing tendency of states to prioritize security competition has become a defining feature of global politics.

Fourth, the strategic rivalry between China and the United States—exemplified by Washington’s policy of constructing “small yards with high fences” and forming exclusive technology alliances with like-minded partners—has not enhanced global security. Both the Reagan National Defense Survey and the Munich Security Report 2025 reveal a widespread sense of insecurity across nations. Meanwhile, the global technological ecosystem’s fragmentation has further complicated international cooperation, heightening the risks of intensified AI competition and potential loss of control over technological development.

Sisson has, as a matter of fact, touched upon the security dilemma in her article, when she observed that there is no “Method for creating a hierarchy to prioritize which initiators and responses to govern,” and “There is no widely accepted analytical means through which to estimate the probability that any one, or even that any broad category, of AI-powered military crisis will occur.” And her proposed three methods for defining the hierarchy have given some hints on how to move beyond the security dilemma.

It is fair to say that any confidence-building measures between China and the United States on AI governance require efforts to move beyond the security dilemma, and in the current context, rebuilding basic mutual trust is the only viable path forward. Both sides must take concrete measures and make incremental adjustments.

Here are some of the suggested steps:

Reframing perceptions and managing competition.

Both countries need to reassess how they perceive and position each other in the AI domain. While a certain degree of strategic competition may be unavoidable, it is essential to avoid framing it as a zero-sum or existential struggle. Managed competition—grounded in transparency and predictability—can coexist with selective cooperation. The technology we are living with is characterized by a high degree of unpredictability and an unprecedented pace of development—traits unparalleled in human history. China and the United States, as two leading countries in AI development, need to find a way to communicate on such important issues as how to prevent existential risks and how to maintain human control. Initiating dialogue through a rigorously academic and technically grounded discussion—such as constructing a hierarchy of risks, as proposed by Sisson—offers a constructive starting point. Such an approach not only facilitates mutual understanding but also contributes to reducing misperceptions and misunderstandings between the two sides.

Establishing clear red lines for national security.

Defining and mutually recognizing security “red lines” is critical. Both sides should clearly delineate sensitive areas where cooperation is infeasible, while avoiding the tendency to continuously expand these boundaries. On this basis, they can pursue exchanges and cooperation in low-sensitivity areas, such as AI safety principles, best practices, and capacity-building efforts. Such engagement would enhance mutual understanding and foster interpretability and predictability in each other’s actions. At the same time, it is essential for both sides to keep official channels of communication open, even in times of crisis. The understanding reached between Xi and Biden—that human beings must always maintain human control over the decision to use nuclear weapons—sets a valuable precedent. It demonstrates that national security concerns should not preclude the two countries from achieving agreements on issues of existential significance that bear directly on the future of humanity.

Strengthening collaboration in AI safety.

Beyond the imperative of building trust between nations, we must also confront the challenge of cultivating trust in technology itself. The International AI Safety Report, led by Professor Yoshua Bengio, classifies the risks associated with general-purpose AI into three broad categories: malicious use risks, risks arising from malfunctions, and systemic risks. Addressing these challenges demands collective, coordinated, and truly global efforts. Without effective guardrails or commonly accepted standards, humanity will struggle to feel secure in its reliance on advanced technologies. Encouragingly, a growing community of scientists and experts—both from China and the United States, as well as from industry and academia—has already devoted itself to this shared endeavor, offering promising avenues for constructive collaboration across borders.

Advancing global AI capacity and development goals.

In 2024, China and the United States each cosponsored resolutions on AI at the United Nations General Assembly, setting a positive precedent for multilateral engagement. Building on this momentum, the two sides should continue to promote global AI governance under the U.N. framework and explore the creation of an authoritative international mechanism to regulate AI-related behavior and safeguard all actors. As the two leading powers in AI technology and innovation, the United States and China share a responsibility to contribute to AI capacity-building in developing countries, help narrow the global digital divide, and advance the United Nations Sustainable Development Goals. Joint initiatives in these areas would not only strengthen global governance but also demonstrate that responsible AI development can serve common human interests.

Closing comment

Melanie W. Sisson

The original intent of this document was to propose a method for thinking analytically about the risks that integrating AI into military assets and operations can pose to civilians. It was motivated by the observation that much of the discourse on this topic is either supremely technical, and therefore supremely narrow, or occurs at a high level of abstraction where it becomes difficult to discern any general principles. Both avenues ultimately impede meaningful action—the former because it is impenetrable to decisionmakers and unmanageable at the level of policy, and the latter because it is overly inductive and often circular.

The model described here is therefore deductive. It seeks to use the scaffolding of simplification to structure dialogue in a way that discourages descriptions of events (actual and imagined) and instead encourages intellectual engagement with the concepts, and values, that will be central to the decisions leaders will, or will not, choose to make about whether and how to govern military applications of AI.

Sun Chenghao, Colin Kahl, and Xiao Qian are therefore correct to observe that the model, as Sun aptly puts it, does not delve into “the micro level” or fully situate analysis “within a political-institutional context at the macro level.” The goal for this model, however, is not to prove or to predict. It is to be useful in a world that will continue to be restless and complex. A world in which technology will keep advancing, and in which the politics between the United States and China might not always be as rigid and inflexible as they appear to be today. The goal, in other words, is for the model to help well-intentioned people from both countries lay the groundwork for a path forward, when the time is right.

-

Acknowledgements and disclosures

SUN Chenghao would like to acknowledge both ZHANG Xueyu and BAI Xuhan contributed to this article by providing research support and editorial suggestions.

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).