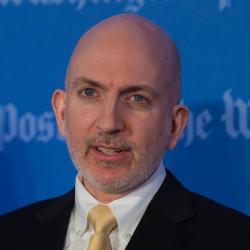

Cade Metz’s article for Wired titled “Hackers Don’t Have to Be Human Anymore. This Bot Battle Proves It” described a curious event that took place in Las Vegas on August 4, 2016. The first Defense Advanced Research Projects Agency (DARPA) Cyber Grand Challenge witnessed seven teams compete for cyber security supremacy. Unlike traditional hacking contests, however, the participants engaging in digital combat were autonomous programs running on super computers, and the targets were the other digital participants. In other words, this was not a human versus human contest, but a “bot” (short for “robot”) versus bot competition. According to DARPA, “[f]or nearly twelve hours teams were scored based on how capably their systems protected hosts, scanned the network for vulnerabilities and maintained the correct function of software.” This was clearly an innovative and creative event, which was ultimately won by “Mayhem,” software created by a startup called ForAllSecure. Just how relevant is it to the challenges facing organizations, governments, and individuals in cyberspace?

Opportunity and a threat

From the perspective of attackers, the Challenge is an opportunity and a threat. As an opportunity, the contest demonstrates that software can be programmed to identify and exploit previously unknown vulnerabilities in previously unseen code bases. This is a force multiplier for offensive security teams, although it is not an unprecedented development. For years offensive security teams have relied upon a variety of automated tools to hunt for code flaws. Code from the Challenge may accelerate the bug hunting process.

As a threat, some might wonder if automated code could put some offensive security personnel out of business. Could a high-priced “red team,” paid to simulate potential adversaries, be replaced by a cheaper bot? In the short-to-medium term, this seems unlikely. There is much more to security research and professional consulting engagements than grinding away to identify and exploit vulnerabilities. A human touch will remain a key component, if only for liability reasons, for the foreseeable future.

Problems are seldom technological

From the perspective of defenders, the Challenge is less helpful. At first glance it would seem that the bots’ capability to identify and patch vulnerabilities would be a benefit to defensive security teams. While rapid patching is a key element of proper defense, the problem facing enterprise security staffs is seldom technological. The problems are complex and sometimes interrelated. First, software vendors may be slow to provide patches, and they seldom allow customers to alter or even access code, as might be the case with open source alternatives. Therefore, a security bot that finds a vulnerability will not have the ability to fix it until the vendor codes and publishes a patch. Discovering a vulnerability may prompt the security team to implement their own work-arounds, such as limiting access via network-based controls, but that process can be cumbersome or ultimately ineffective.

While rapid patching is a key element of proper defense, the problem facing enterprise security staffs is seldom technological.

Second, security teams are creatures of the business or organization hosting them. They are not free to act on their security programs independently. On occasion they may be able to rush the deployment of a security countermeasure or software patch when it presents sufficient risk or it has received extreme media attention. More often, the security team must wait to apply patches or configuration changes, and weekly or even monthly change windows are standard.

Third, security teams are usually not allowed to have free reign on their business or organization networks. They must gingerly step around potential landmines, where disruption to a fragile but important server or application could cause millions in lost revenue. To this day there remain networks where it is forbidden to run standard network mapping or scanning tools for fear they will “knock over” that server in the closet that does something important, though no one is quite sure what. Unfortunately, the world beyond corporate enterprise computing is even worse, with so-called Internet of Things and Industrial Control Systems networks running code that dates to the 1990s and earlier.

A hodge-podge of equipment and code

The DARPA Cyber Grand Challenge, then, could be viewed as the technology demonstration project that it was, and not necessarily a model for future security paradigms. The biggest problems in real-life networks are based upon the fact that they are a hodge-podge of legacy equipment running an assortment of mix-and-match code, administered by staff struggling to perform basic functions like inventory management and so-called “cyber hygiene.” The high-tech world of “bot-on-bot” combat will remain largely separate, although elements of that world have already been present in cyberspace for decades. Anyone remembering the outbreaks of so-called network “worms” in the 1990s and early 2000s may wonder about the fuss over the DARPA Cyber Grand Challenge. However, it is worth congratulating the seven participating teams for pushing the limits of automation to show how creative programming can interact in offensive and defensive ways in artificial environments.

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).

Commentary

Cyber Grand Challenge contrasts today’s cybersecurity risks

September 14, 2016