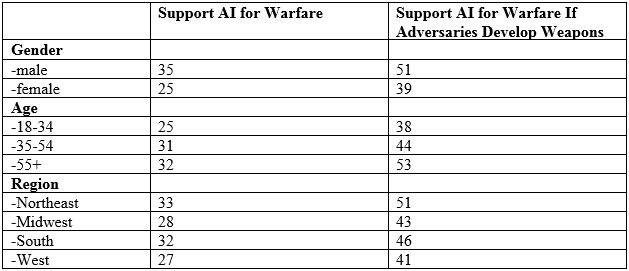

Thirty percent of adult internet users believe AI technologies should be developed for warfare, 39 percent do not, and 32 percent are unsure, according to a survey undertaken by researchers at the Brookings Institution. However, if adversaries already are developing such weapons, 45 percent believe the United States should do so, 25 percent do not, and 30 percent don’t know.

There are substantial differences in attitudes by gender, age, and geography. Men (51 percent) are much more likely than women (39 percent) to support AI for warfare if adversaries develop these kinds of weapons. The same is true for senior citizens (53 percent) compared to those aged 18 to 34. Those living in the Northeast (51 percent) were the most likely to support these weapons, compared to people living in the West (41 percent).

The Brookings survey was an online U.S. national poll undertaken with 2,000 adult internet users between August 19 to 21, 2018. It was overseen by Darrell M. West, vice president of Governance Studies and director of the Center for Technology Innovation at the Brookings Institution and the author of The Future of Work: Robots, AI, and Automation. Responses were weighted using gender, age, and region to match the demographics of the national internet population as estimated by the U.S. Census Bureau’s Current Population Survey.

AI should be based on human values

Sixty-two percent believe that artificial intelligence should be guided by human values, while 21 percent do not and 17 percent are unsure. Men (64 percent) were slightly more likely than women (60 percent) to feel this way. There also are some age differences as 64 percent of those aged 35 to 54 believe that, compared to only 59 percent of those over the age of 65.

Who should decide on AI deployment?

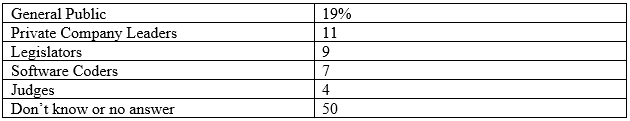

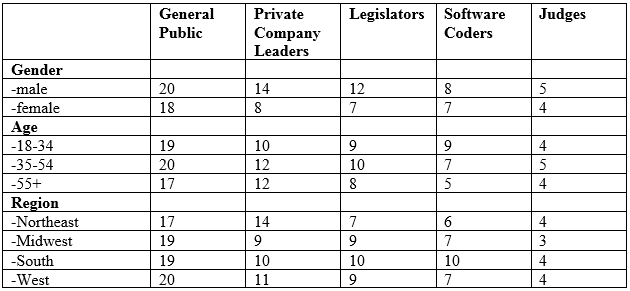

The survey asked who should decide on how AI systems are designed and deployed. Nineteen percent name the general public, followed by 11 percent who say private company leaders, 9 percent who believe legislators should decide, 7 percent who feel software coders should make the decisions, and 4 percent who cite judges. Half of those questioned are unsure who should make these decisions.

But there are some small differences by gender and geography. For example, men (14 percent) are more likely than women (8 percent) to want private company leaders to make AI decisions. In addition, those from the Northeast (14 percent) are more likely than those from the Midwest (9 percent) to say they prefer private company leaders.

Ethical safeguards

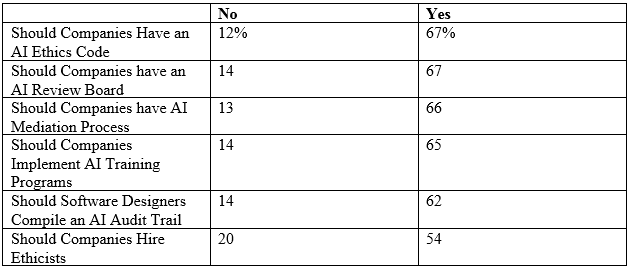

We asked people about a variety of ethical safeguards. There are strong majorities in favor of each proposal, but the most popular ones are having an AI ethics code (67 percent), having an AI review board (67 percent), having an AI mediation process in case of harm (66 percent), AI training programs (65 percent), having AI audit trails (62 percent), and hiring ethicists (54 percent).

Survey Questions and Answers

1. How important is it that artificial intelligence be guided by human values?

- 15% very unimportant

- 6% somewhat unimportant

- 15% somewhat important

- 47% very important

- 17% don’t know or no answer

2. Should companies be required to hire ethicists to advise them on major decisions involving computer software?

- 12% definitely no

- 8% possibly no

- 20% possibly yes

- 34% definitely yes

- 26% don’t know or no answer

3. Should companies have a code of AI ethics that lays out how various ethical issues will be handled?

- 7% definitely no

- 5% possibly no

- 22% possibly yes

- 45% definitely yes

- 21% don’t know no answer

4. Should companies have an AI review board that regularly addresses corporate ethical decisions?

- 8% definitely no

- 6% possibly no

- 22% possibly yes

- 45% definitely yes

- 19% don’t know or no answer

5. Should software designers compile an AI audit trail that shows how various coding decisions are made?

- 8% definitely no

- 6% possibly no

- 20% possibly yes

- 42% definitely yes

- 24% don’t know or no answer

6. Should companies implement AI training programs so staff incorporates ethical considerations in their daily work?

- 8% definitely no

- 6% possibly no

- 23% possibly yes

- 42% definitely yes

- 21% don’t know or no answer

7. Should companies have a means for mediation when AI solutions inflict harm or damages on people?

- 8% definitely no

- 5% possibly no

- 19% possibly yes

- 47% definitely yes

- 21% don’t know or no answer

8. Who should decide on how AI systems are designed and deployed? (responses rotated)

- 19% the general public

- 11% private company leaders

- 9% legislators

- 7% software coders

- 4% judges

- 50% don’t know or no answer

9. Should AI technologies be developed for warfare?

- 27% definitely no

- 11% possibly no

- 12% possibly yes

- 18% definitely yes

- 2% don’t know or no answer

10. Should AI technologies be developed for warfare if we know adversaries already are developing them?

- 16% definitely no

- 9% possibly no

- 16% possibly yes

- 29% definitely yes

- 30% don’t know or no answer

Gender:

- 55.9% male, 44.1% female in sample

- 47.9% male, 52.1% female in target population

Age:

- 6.9% 18-24, 15.3% 25-34, 19.0% 35-44, 20.0% 45-54, 22.2% 55-64, 16.6% 65+ in sample

- 13.9% 18-24, 19.4% 25-34, 17.8% 35-44, 18.3% 45-54, 16.4% 55-64, 14.2% 65+ in target population

Region:

- 13.8% Northeast, 25.7% Midwest, 35.7% South, 24.8% West in sample

- 18.0% Northeast, 22.0% Midwest, 36.4% South, 23.6% West in target population

Survey Methodology

This online survey polled 2,000 adult internet users in the United States August 19 to 21, 2018 through the Google Surveys platform. Responses were weighted using gender, age, and region to match the demographics of the national internet population as estimated by the U.S. Census Bureau’s Current Population Survey.

In the 2012 presidential election, Google Surveys was the second most accurate poll of national surveys as judged by polling expert Nate Silver. In addition, the Pew Research Center undertook a detailed assessment of Google Surveys and found them generally to be representative of the demographic profile of national internet users. In comparing Google Survey results to its own telephone polls on 43 different substantive issues, Pew researchers found a median difference of about three percentage points between Google online surveys and Pew telephone polls. A 2016 analysis of Google Surveys published in the peer-reviewed methodology journal Political Analysis by political scientists at Rice University replicated a number of research results and concluded “GCS [Google Consumer Surveys] is likely to be a useful platform for survey experimentalists.”

This research was made possible by Google Surveys, which donated use of its online survey platform. The questions and findings are solely those of the researchers and not influenced by any donation. For more detailed information on the methodology, see the Google Surveys Whitepaper.

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).

Commentary

Brookings survey finds divided views on artificial intelligence for warfare, but support rises if adversaries are developing it

August 29, 2018