This report is part of “A Blueprint for the Future of AI,” a series from the Brookings Institution that analyzes the new challenges and potential policy solutions introduced by artificial intelligence and other emerging technologies.

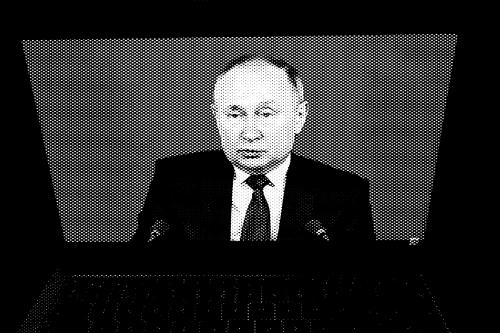

“Artificial intelligence is the future, not only for Russia, but for all humankind. It comes with colossal opportunities, but also threats that are difficult to predict. Whoever becomes the leader in this sphere will become the ruler of the world.”1 – Russian President Vladimir Putin, 2017.

“A people that no longer can believe anything cannot make up its mind. It is deprived not only of its capacity to act but also of its capacity to think and to judge. And with such a people you can then do what you please.”2 – Hannah Arendt, 1978

Speaking to Russian students on the first day of the school year in September 2017, Putin squarely positioned Russia in the technological arms race for artificial intelligence (AI). Putin’s comment (see above) signaled that, like China and the United States, Russia sees itself engaged in direct geopolitical competition with the world’s great powers, and AI is the currency that Russia is betting on. But, unlike the United States and China, Russia lags behind in research and development on AI and other emerging technologies. Russia’s economy makes up less than 2 percent of global GDP compared to 24 percent for the United States and 15 percent for China, which puts Russia on par with a country like Spain.3 Despite Putin’s focus on AI, the Russian government has not released a strategy, like China has, on how the country plans to lead in this area. The Russian government’s future investment in AI research is unknown, but reports estimate that it spends approximately $12.5 million a year4 on AI research, putting it far behind China’s plan to invest $150 billion through 2030. The U.S. Department of Defense alone spends $7.4 billion annually on unclassified research and development on AI and related fields.5

Russia’s public corruption, decline in rule-of-law, and increasingly oppressive government regulations have produced a poor business environment. As a consequence, the country trails the United States and China in terms of private investment, scientific research, and the number of AI start-ups.6 In 2018, no Russian city entered the top 20 global regional hubs for the AI sector,7 despite the much-hyped opening of the “Skolkovo Innovation Center” in 2010, which was designed to be Russia’s answer to Silicon Valley. Unlike Silicon Valley, Skolkovo did not spur the kind of private investments and innovation that the Kremlin had hoped for and has since fizzled out. Russia’s new venture, a “technopolis” named Era, which is set to open in the fall of 2018, now promises to be the new hub for emerging technologies, but it too is unlikely to spur Silicon Valley like innovation.8 It is telling that despite high-level presidential and administrative support, there is scant Russian language academic research on AI.

It is not likely that the country’s stagnant and hydrocarbon-dependent economy will do much to improve the government’s ability to ramp up investment in emerging technologies. In the longer term, Russia’s demographic crisis (Russia is projected to lose 8 percent of its population by 2050, according the UN)9 will likely lead to shortages in highly skilled workers, many of whom have already left Russia for better pay and opportunities elsewhere.10 Western sanctions on key sectors of the Russian financial sector and defense industry, which Europe and the United States imposed after Russia’s annexation of Crimea in 2014 and the United States has continued to ramp up since then, put extra pressure on the Russian economy. Taken together, the economic and demographic trends signal that in the AI race, Russia will be unable to match China on government investment or compete with the United States on private sector innovation.

The Kremlin is undoubtedly aware of the country’s unfavorable position in the global AI competition, even if such an admission is unlikely to ever be made publicly. Strategically, such a wide gap between ambition and capacity means that Russia will need to invest its limited resources carefully. Currently, Moscow is pursuing investments in at least two directions: select conventional military and defense technologies where the Kremlin believes it can still hold comparative advantage over the West and high-impact, low-cost asymmetric warfare to correct the imbalance between Russia and the West in the conventional domain. The former—Russia’s development and use of AI-driven military technologies and weapons—has received significant attention.11

AI has the potential to hyperpower Russia’s use of disinformation … And unlike in the conventional military space, the United States and Europe are ill-equipped to respond to AI-driven asymmetric warfare in the information space.

The latter—the implications of AI for asymmetric political warfare—remains unexplored.12 Yet, such nonconventional tools—cyber-attacks, disinformation campaigns, political influence, and illicit finance—have become a central tenet of Russia’s strategy toward the West and one with which Russia has been able to project power and influence beyond its immediate neighborhood. In particular, AI has the potential to hyperpower Russia’s use of disinformation—the intentional spread of false and misleading information for the purpose of influencing politics and societies. And unlike in the conventional military space, the United States and Europe are ill-equipped to respond to AI-driven asymmetric warfare (ADAW) in the information space.

Russian information warfare at home and abroad

Putin came to power in 2000, and since then, information control and manipulation has become a key element of the Kremlin’s domestic and foreign policy. At home, this has meant repression of independent media and civil society, state control of traditional and digital media, and deepening government surveillance. For example, Russia’s surveillance system, SORM (System of Operative-Search Measures) allows the FSB (Federal Security Service) and other government agencies to monitor and remotely access ISP servers and communications without the ISPs’ knowledge.13 In 2016, a new package of laws, the so-called Yarovaya amendments, required telecom providers, social media platforms, and messaging services to store user data for three years and allow the FSB access to users’ metadata and encrypted communications.14 While there is little known information on how Russian intelligence agencies are using these data, their very collection suggests that the Kremlin is experimenting with AI-driven analysis to identify potential political dissenters. The government is also experimenting with facial recognition technologies in conjunction with CCTV. Moscow alone has approximately 170,000 cameras, at least 5,000 of which have been outfitted with facial recognition technology from NTechLabs.15

Still, Moscow’s capacity to control and surveil the digital domain at home remains limited, as exemplified by the battle between the messaging app Telegram and the Russian government in early 2018. Telegram, one of the few homegrown Russian tech companies, refused to hand over its encryption keys to the FSB in early 2018.16 What followed was a haphazard government attempt to ban Telegram by blocking tens of millions of IP addresses, which led to massive disruptions in unrelated services, such as cloud providers, online games, and mobile banking apps. Unlike Beijing, which has effectively sought to censor and control the internet as new technologies have developed, Moscow has not been able to implement similar controls preemptively. The result is that even a relatively small company like Telegram is able to outmaneuver and embarrass the Russian state. Despite such setbacks, however, Moscow seems set to continue on a path toward “digital authoritarianism”—using its increasingly unfettered access to citizens’ personal data to build better microtargeting capabilities that enhance social control, censor behavior and speech, and curtail counter-regime activities.17

Under Putin, Cold War-era “active measures”—overt or covert influence operations aimed at influencing public opinion and politics abroad—have been revived and adapted to the digital age.

Externally, Russian information warfare (informatsionaya voyna) has become part and parcel of Russian strategic thinking in foreign policy. Moscow has long seen the West as involved in an information war against it—a notion enshrined in Russia’s 2015 national security strategy, which sees the United States and its allies as seeking to contain Russia by exerting “informational pressure…” in an “intensifying confrontation in the global information arena.”18 Under Putin, Cold War-era “active measures”—overt or covert influence operations aimed at influencing public opinion and politics abroad—have been revived and adapted to the digital age. Information warfare (or information manipulation)19 has emerged as a core component of a broader influence strategy. At the same time, the line between conventional (or traditional) and nonconventional (or asymmetric) warfare has blurred in Russian military thinking. “The erosion of the distinction between war and peace, and the emergence of a grey zone” has been one of the most striking developments in the Russian approach to warfare, according to Chatham House’s Keir Giles.20 Warfare, from this perspective, exists on a spectrum in which “political, economic, informational, humanitarian, and other nonmilitary measures” are used to lay the groundwork for last resort military operations.21 The importance of information warfare on the spectrum of war has increased considerably in 21st century warfare, according to contemporary Russian military thought.22Maskirovka, the Soviet/Russian term for the art of deception and concealment in both military and nonmilitary operations, is a key concept that figures prominently into Russian strategic thinking. The theory is broader than the narrow definition of military deception. In the conventional military domain, it includes the deployment of decoys, camouflage, and misleading information to deceive the enemy on the battlefield. The use of “little green men,” or unmarked soldiers and mercenaries, in Russia’s annexation of Crimea in 2014 is one example of maskirovka in military practice. So is the use of fake weapons and heavy machinery: one Russian company is producing an army of inflatable missiles, tanks, and jets that appear real in satellite imagery.23Maskirovka, as a theory and operational practice, also applies to nonmilitary asymmetric operations. Modern Russian disinformation and cyber attacks against the West rely on obfuscation and deception in line with the guiding principles of maskirovka. During the 2016 U.S. Presidential elections, for example, Russian citizens working in a troll factory in St. Petersburg, known as the Internet Research Agency (IRA), set up fake social media accounts pretending to be real Americans. These personas then spread conspiracy theories, disinformation, and divisive content meant to amplify societal polarization by pitting opposing groups against each other.24 The IRA troll factory itself, while operating with the knowledge and support of the Kremlin and the Russian intelligence services, was founded and managed by proxy: a Russian oligarch known as “Putin’s chef,” Yevgeny Prigozhin. Concord, a catering company controlled by Prigozhin, was the main funder and manager of the IRA, and it went to great lengths to conceal the company’s involvement, including the setting up a web of fourteen bank accounts to transfer funding to the IRA.25 Such obfuscation tactics were designed to conceal the true source and goals of the influence operations in the United Stated while allowing the Kremlin to retain plausible deniability if the operations were uncovered—nonconventional maskirovka in practice.

On the whole, Russia’s limited financial resources, the shift in strategic thinking toward information warfare, and the continued prevalence of maskirovka as a guiding principle of engagement, strongly suggest that in the near term, Moscow will ramp up the development of AI-enabled information warfare. Russia will not be the driver or innovator of these new technologies due its financial and human capital constraints. But, as it has already done in its attacks against the West, it will continue to co-opt existing commercially available technologies to serve as weapons of asymmetric warfare.

AI-driven asymmetric warfare

The Kremlin’s greatest innovation in its information operations against the West has not been technical. Rather, Moscow’s savviness has been to recognize that: (1) ready-made commercial tools and digital platforms can be easily weaponized; and (2) digital information warfare is cost-effective and high-impact, making it the perfect weapon of a technologically and economically weak power. AI-driven asymmetric warfare (ADAW) capabilities could provide Russia with additional comparative advantage.

Digital information warfare is cost-effective and high-impact, making it the perfect weapon of a technologically and economically weak power.

U.S. government and independent investigations into Russia’s influence campaign against the United States during the 2016 elections reveal the low cost of that effort. Based on publicly available information,26 we know that the Russian effort included: the purchase of ads on Facebook (estimated cost $100,000)27 and Google (approximate cost $4,700), set up of approximately 36,000 automated bot accounts on Twitter, operation of the IRA troll farm (estimated cost $3 million over the course of two years)28, an intelligence gathering trip carried out by two Russian agents posing as tourists in 2014 (estimated cost $50,000)29, production of misleading or divisive content (pictures, memes, etc.), plus additional costs related to the cyber attacks on the Democratic National Committee and the Clinton campaign. In sum, the total known cost of the most high-profile influence operation against the United States is likely around four million dollars.

The relatively low level of investment produced high returns. On Facebook alone, Russian linked content from the IRA reached 125 million Americans.30 This is because the Russian strategy relied on ready-made tools designed for commercial online marketing and advertising: the Kremlin simply used the same online advertising tools that companies would use to sell and promote its products and adapted them to spread disinformation. Since the U.S. operation, these tools and others have evolved and present new opportunities for far more damaging but increasingly low-cost and difficult-to-attribute ADAW operations.

Three threat vectors in particular require immediate attention. First, advances in deep learning are making synthetic media content quick, cheap, and easy to produce. AI-enabled audio and video manipulation, so-called “deep fakes,” is already available through easy-to-use apps such as Face2Face,31 which allows for one person’s expressions to be mapped onto another face in a target video. Video to Video Synthesis32 can synthesize realistic video based a baseline of inputs. Other tools can synthesize realistic photographs of AI-rendered faces, reproduce videos and audio of any world leader,33 and synthesize street scenes to appear in a different season.34 Using these tools, China recently unveiled an AI made news anchor.35 As the barriers of entry for accessing such tools continue to decrease, their appeal to low-resource actors will increase. Whereas most Russian disinformation content has been static (e.g., false news stories, memes, graphically designed ads), advances in learning AI will turn disinformation dynamic (e.g. video, audio).

Because audio and video can easily be shared on smart phones and do not require literacy, dynamic disinformation content will be able to reach a broader audience in more countries. For example, in India, false videos shared through Whatsapp incited riots and murders.36 Unlike Facebook or Twitter, Whatsapp (owned by Facebook) is an end-to-end encrypted messaging platform, which means that content shared via the platform is basically unmonitored and untraceable. The “democratization of disinformation” will make it difficult for governments to counter AI-driven disinformation. Advances in machine learning are producing algorithms that “continuously learn how to more effectively replicate the appearance of reality,” which means that “deep fakes cannot easily be detected by other algorithms.”37

Russia, China, and others could harness these new publicly available technologies to undermine Western soft power or public diplomacy efforts around the world. Debunking or attributing such content will require far more resources than the cost of production, and it will be difficult if not impossible to do so in real time.

Second, advances in affective computing and natural language processing will make it easier to manipulate human emotions and extract sensitive information without ever hacking an email account. In 2017, Chinese researchers created an “emotional chatting machine” based on data users shared on Weibo, the Chinese social media site.38 As AI gains access to more personal data, it will become increasingly customized and personalized to appeal to and manipulate specific users. Coupled with advances in natural learning processing, such as voice recognition, this means that affective systems will be able to mimic, respond to, and predict human emotions expressed through text, voice, or facial expressions. Some evidence suggests that humans are quite willing to form personal relationships, share deeply personal information, and interact for long periods of time with AI designed to form relationships.39 These systems could be used to gather information from high value targets—such as intelligence officers or political figures—by exploiting their vices and patterns of behavior.

Advances in affective computing and natural language processing will make it easier to manipulate human emotions and extract sensitive information without ever hacking an email account.

Third, deep fakes and emotionally manipulative content will be able to reach the intended audience with a high degree of precision due to advances in content distribution networks. “Precision propaganda” is the set of interconnected tools that comprise an “ecosystem of services that enable highly targeted political communications that reach millions of people with customized messages.”40 The full scope of this ecosystem, which includes data collection, advertising platforms, and search engine optimization, aims to parse out audiences in granular detail and identify new receptive audiences will be “supercharged” by advances in AI. The content that users see online is the end product of an underlying multi-billion dollar industry that involves thousands of companies that work together to assess individuals’ preferences, attitudes, and tastes to ensure maximum efficiency, profitability, and real-time responsiveness of content delivery. Russian operations (as far as we know), relied on the most basic of these tools. But, as Ghosh and Scott suggest, a more advanced operation could use the full suite of services utilized by companies to track political attitudes on social media across all congressional districts, analyze who is most likely to vote and where, and then launch, almost instantly, a customized campaign at a highly localized level to discourage voting in the most vulnerable districts. Such a campaign, due to its highly personalized structure, would likely have significant impact on voting behavior.41

Once the precision of this distribution ecosystem is paired with emotionally manipulative deep fake content delivered by online entities that appear to be human, the line between fact and fiction will cease to exist. And Hannah Arendt’s prediction of a world in which there is no truth and no trust may still come to pass.

Responding to AI-driven asymmetric warfare

Caught by surprise by Russia’s influence operations against the West, governments in the United States and Europe are now establishing processes, agencies, and laws to respond to disinformation and other forms of state-sponsored influence operations.42 However, these initiatives, while long overdue, will not address the next generation of ADAW threats emanating from Russia or other actors. So far, Western attempts to deter Russian malign influence, such as economic sanctions, have not changed Russian behavior: cyber-attacks, intelligence operations, and disinformation attacks have continued in Europe and the United States.

To get ahead of AI-driven hyper war,43 policymakers should focus on designing a deterrence strategy for nonconventional warfare.44

The first step toward such a strategy should be to identify and then seek to remedy the information asymmetries between policymakers and the tech industry. In 2015, the U.S. Department of Defense launched the Defense Innovation Unit (DIU) to fund the development of new technologies with defense implications.45 DIU’s mission could be expanded to also focus on pilot AI research and development into tools to identify and counter dynamic disinformation and other asymmetric threats.

The U.S. Congress established an AI Caucus co-chaired by Congressman John Delaney and Congressman Pete Olsen. The Caucus should make it a priority to pass legislation to set the U.S. government’s strategy on AI, much like China, France, and the EU have done. The Future of Intelligence Act, introduced in 2017, begins this process, but it should be expanded to include asymmetric threats and incorporated into the broader national security strategy and the National Defense Authorization Act. Such legislation should also mandate an immediate review of the current tools the U.S. government has to respond to an advanced disinformation attack. Part of the review should include a report, in both classified and unclassified versions, on investments in AI research and development across the U.S. government and the preparedness of various agencies in responding to future attacks. As attribution becomes more difficult, such a report should also recommend a set of baselines and metrics that warrant a governmental response and what the nature of such a response would be.

To get ahead of AI-driven hyper war, policymakers should focus on designing a deterrence strategy for nonconventional warfare.

Step two toward a deterrence strategy would involve informing the Russian government and other adversarial regimes of the consequences for deploying AI-enabled disinformation attacks. Such messaging should be carried out publicly by high-level cabinet officials and in communications between intelligence agencies. Deterrence can only be effective if each side is aware of the implications of its actions. It also requires a nuanced understanding of the adversary’s strategic intentions and tactical capabilities – Russian strategic thinking will be different than Chinese strategic thinking. Deterrence is not a one size fits all model. Policymakers will need to develop a more in-depth understanding of Russian (or Chinese) culture, perceptions, and thinking. To that end, the U.S. government should reinvest, at a significant level, into cultivating expertise and training the next generation of regional experts.

Inevitably, policy will not be able to keep pace with technological advances. Tech companies, research foundations, governments, private foundations, and major non-profit policy organizations should invest in research that will assess the short- and long-term consequences of emerging AI technologies for foreign policy, national security, and geopolitical competition. Research and strategic thinking at the intersection of technology and geopolitics is sorely lacking even as Russia—a country identified as a direct competitor to the United States in the 2017 National Security Strategy —prioritizes expanding its capacities in this area. The United States is still far ahead of its competitors, especially Russia. But even with limited capabilities, the Kremlin can quickly gain comparative advantage in AI-driven information warfare, leaving the West to once again be caught off guard.

-

Footnotes

- “’Whoever leads in AI will rule the world’: Putin to Russian children on Knowledge Day,” RT, September 1, 2017, https://www.rt.com/news/401731-ai-rule-world-putin/.

- Hannah Arendt, “Hannah Arendt: From an Interview,” The New York Review of Books 25, no. 16 (October 26, 1978), https://www.nybooks.com/articles/1978/10/26/hannah-arendt-from-an-interview/.

- World Bank, “Gross domestic product 2017,” (Washington, DC: World Bank, September 2018), http://databank.worldbank.org/data/download/GDP.pdf.

- Samuel Bendett, “In AI, Russia Is Hustling to Catch Up,” Defense One, April 4, 2018, https://www.defenseone.com/ideas/2018/04/russia-races-forward-ai-development/147178/.

- Julian E. Barnes and Josh Chin, “The New Arms Race in AI,” The Wall Street Journal, March 2, 2018, https://www.wsj.com/articles/the-new-arms-race-in-ai-1520009261.

- Asgard and Roland Berger, “The Global Artificial Intelligence Landscape,” Asgard, May 14, 2018, https://asgard.vc/global-ai/.

- Ibid.

- The optimistic projection for private investment in Russia to reach $500 million by 2020, but this will do little to put Russia on the innovation map. See Bendett, “In AI, Russia Is Hustling to Catch Up.”

- Department of Economic and Social Affairs, Population Division, “World Population Prospects: The 2017 Revision, Key Findings and Advance Tables,” (New York, NY: United Nations, 2017): 26, https://esa.un.org/unpd/wpp/Publications/Files/WPP2017_KeyFindings.pdf.

- “Russia’s Brain Drain on the Rise Over Economic Woes — Report,” The Moscow Times, January 24, 2018, https://themoscowtimes.com/news/russias-brain-drain-on-the-rise-over-economic-woes-report-60263.

- An analysis of the implications of AI for the Russian military is beyond the scope of this short paper. But see Michael Horowitz et al., “Strategic Competition in an Era of Artificial Intelligence,” (Washington, DC: Center for a New American Security, July 2018): 15-17, http://files.cnas.org.s3.amazonaws.com/documents/CNAS-Strategic-Competition-in-an-Era-of-AI-July-2018_v2.pdf; Samuel Bendett, “Here’s How the Russian Military Is Organizing to Develop AI,” Defense One, July 20, 2018, https://www.defenseone.com/ideas/2018/07/russian-militarys-ai-development-roadmap/149900/; Bendett, “In AI, Russia Is Hustling to Catch Up;” Samuel Bendett, “Should the U.S. Army Fear Russia’s Killer Robots?,” The National Interest, November 8, 2017, https://nationalinterest.org/blog/the-buzz/should-the-us-army-fear-russias-killer-robots-23098; Samuel Bendett, “Red Robots Rising: Behind the Rapid Development of Russian Unmanned Military Systems,” Real Clear Defense, December 12, 2017, https://www.realcleardefense.com/articles/2017/12/12/red_robots_risin_112770.html.

- See, however, Alina Polyakova and Spencer Boyer, The Future of Political Warfare: Russia, the West, and the Coming Age of Global Digital Competition, Brookings Institution, March 2018.

- James Andrew Lewis, “Reference Note on Russian Communications Surveillance,” Center for Strategic and International Studies, April 18, 2014, https://www.csis.org/analysis/reference-note-russian-communications-surveillance.

- “Overview of the Package of Changes into a Number of Laws of the Russian Federation Designed to Provide for Additional Measures to Counteract Terrorism,” (Washington, DC: The International Center for Not-for-Profit Law, July 21, 2016), http://www.icnl.org/research/library/files/Russia/Yarovaya.pdf.

- Cara McGoogan, “Facial recognition fitted to 5,000 CCTV cameras in Moscow,” The Telegraph, September 29, 2017, https://www.telegraph.co.uk/technology/2017/09/29/facial-recognition-fitted-5000-cctv-cameras-moscow/.

- Dani Deahl, “Russia orders Telegram to hand over users’ encryption keys,” The Verge, March 20, 2018, https://www.theverge.com/2018/3/20/17142482/russia-orders-telegram-hand-over-user-encryption-keys

- Nicholas Wright, “How Artificial Intelligence Will Reshape the Global Order,” Foreign Affairs, July 10, 2018, https://www.foreignaffairs.com/articles/world/2018-07-10/how-artificial-intelligence-will-reshape-global-order.

- For a Russian-language version of the 2015 National Security Strategy see “Указ Президента Российской Федерации от 31 декабря 2015 года N 683 “О Стратегии национальной безопасности Российской Федерации,” Rossiyskaya Gazeta, December 31, 2015, https://rg.ru/2015/12/31/nac-bezopasnost-site-dok.html. For an English translation of the 2015 Russian National Security Strategy see “Russian National Security Strategy, December 2015 – Full-text Translation,” Spanish Institute for Strategic Studies, http://www.ieee.es/Galerias/fichero/OtrasPublicaciones/Internacional/2016/Russian-National-Security-Strategy-31Dec2015.pdf. In addition, it is important to note that the oft-cited “Gerasimov Doctrine,” named for Russian General Valery Gerasimov, the Chief of the General Staff of the Russian Armed Forces, which lays out the strategy of “hybrid warfare,” was primarily written as a description of what the Russians thought the West (led by the United States) had done during the Color Revolutions and the Arab Spring.

- Jean-Baptiste Jeangène Vilmer et al., “Information Manipulation: A Challenge for Our Democracies,” (Paris, France: Ministry for Europe and Foreign Affairs and the Institute for Strategic Research of the Ministry for the Armed Forces, August 2018), https://www.diplomatie.gouv.fr/IMG/pdf/information_manipulation_rvb_cle838736.pdf.

- Keir Giles, “The Next Phase of Russian Information Warfare,” (Riga, Latvia: NATO Strategic Communications Centre of Excellence, 2016), https://www.stratcomcoe.org/next-phase-russian-information-warfare-keir-giles.

- General Valery Gerasimov, Chief of the General Staff of the Russian Federation, as quoted in Mark Galeotti, “The ‘Gerasimov Doctrine’ and Russian Non-Linear War,” In Moscow’s Shadows (blog),” July 6, 2014, https://inmoscowsshadows.wordpress.com/2014/07/06/the-gerasimov-doctrine-and-russian-non-linear-war/.

- See Jolanta Darczewska, “The Devil is in the Details: Information Warfare in the Light of Russia’s Military Doctrine”, OSW Point of View, no. 50 (May 2015): https://www.osw.waw.pl/sites/default/files/pw_50_ang_the-devil-is-in_net.pdf.

- Kyle Mizokami, “A Look at Russia’s Army of Inflatable Weapons,” Popular Mechanics, October 12, 2016, https://www.popularmechanics.com/military/weapons/a23348/russias-army-inflatable-weapons/.

- Alicia Parlapiano and Jasmine C. Lee, “The Propaganda Tools Used by Russians to Influence the 2016 Election,” The New York Times, February 16, 2018, https://www.nytimes.com/interactive/2018/02/16/us/politics/russia-propaganda-election-2016.html.

- UNITED STATES OF AMERICA v. INTERNET RESEARCH AGENCY LLC A/K/A MEDIASINTEZ LLC A/K/A GLAVSET LLC A/K/A MIXINFO LLC A/K/A AZIMUT LLC A/K/A NOVINFO LLC et al. 18 U.S.C. §§ 2, 371, 1349, 1028A (2018). https://www.justice.gov/file/1035477/download.

- This includes the indictments from the Department of Justice’s Special Counsel Investigation, findings of the US intelligence community, disclosures made by Facebook, Twitter, and Google in Congressional testimony and elsewhere, and investigative reports.

- Jessica Guynn, Elizabeth Weise, and Erin Kelly, “Thousands of Facebook ads bought by Russians to fool U.S. voters released by Congress,” USA Today, May 10, 2018, https://www.usatoday.com/story/tech/2018/05/10/thousands-russian-bought-facebook-social-media-ads-released-congress/849959001/.

- According to the Department of Justice, the total monthly operating budget for the IRA was approximately $1.25 million in 2016 (UNITED STATES OF AMERICA v. INTERNET RESEARCH AGENCY LLC A/K/A MEDIASINTEZ LLC A/K/A GLAVSET LLC A/K/A MIXINFO LLC A/K/A AZIMUT LLC A/K/A NOVINFO LLC, et al. 18 U.S.C. §§ 2, 371, 1349, 1028A (2018). https://www.justice.gov/file/1035477/download.), which included approximately 800-900 employees (Polina Rusyaeva and Andrey Zakharov, “Расследование РБК: как «фабрика троллей» поработала на выборах в США,” RBC, October 17, 2017, https://www.rbc.ru/magazine/2017/11/59e0c17d9a79470e05a9e6c1.). Out of those employees, more than 80 worked for the English language operations, which is approximately 10 percent of the overall organization (Ben Collins, Gideon Resnick, Spencer Ackerman, “Leaked: Secret Documents From Russia’s Election Trolls,” The Daily Beast, March 1, 2018, https://www.thedailybeast.com/exclusive-secret-documents-from-russias-election-trolls-leak).

- Author’s estimate based on duration and activities of the travel.

- Craig Timberg and Elizabeth Dwoskin, “Russian content on Facebook, Google and Twitter reached far more users than companies first disclosed, congressional testimony says,” The Washington Post, October 30, 2017 https://www.washingtonpost.com/business/technology/2017/10/30/4509587e-bd84-11e7-97d9-bdab5a0ab381_story.html?utm_term=.804086c68482.

- Justus Thies et al., “Face2Face: Real-time Face Capture and Reenactment of RGB Videos,” (Las Vegas, US: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016), http://www.graphics.stanford.edu/~niessner/thies2016face.html.

- Ting-Chun Wang et al., “Video-to-Video Synthesis,” arXiv, (2018), https://tcwang0509.github.io/vid2vid/.

- Supasorn Suwajanakorn, “Synthesizing Obama: Learning Lip Sync from Audio,” YouTube video, 8:00, July 11, 2017, https://www.youtube.com/watch?time_continue=201&v=9Yq67CjDqvw.

- I would like to thank Matt Chessen for bringing these new tools to my attention. See Ming-Yu Liu, “Snow2SummerImageTranslation-06,” YouTube video, 0:10, October 3, 2017, https://www.youtube.com/watch?v=9VC0c3pndbI.

- Will Knight, “This is fake news! China’s ‘AI news anchor’ isn’t intelligent at all,” MIT Technology Review, November 9, 2018, https://www.technologyreview.com/the-download/612401/this-is-fake-news-chinas-ai-news-anchor-isnt-intelligent-at-all/.

- Cory Doctorow, “A viral hoax video has inspired Indian mobs to multiple, brutal murders,” BoingBoing, June 20, 2018, https://boingboing.net/2018/06/20/indian-pizzagate.html.

- Chris Meserole and Alina Polyakova, “Disinformation Wars,” Foreign Policy, May 25, 2018, https://foreignpolicy.com/2018/05/25/disinformation-wars/.

- Hao Zhou et al., “Emotional Chatting Machine: Emotional Conversation Generation with Internal and External Memory,” (Ithaca, NY: Cornell University, April 4, 2017, last revised June 1, 2018), https://arxiv.org/abs/1704.01074.

- Liesl Yearsley, “We Need to Talk About the Power of AI to Manipulate Humans,” A View from Liesl Yearsley (blog), MIT Technology Review, June 5, 2017, https://www.technologyreview.com/s/608036/we-need-to-talk-about-the-power-of-ai-to-manipulate-humans/.

- Dipayan Ghosh and Ben Scott, “Digital Deceit: The Technologies Behind Precision Propaganda on the Internet,” (Washington, DC: New America, January 23, 2018), https://www.newamerica.org/public-interest-technology/policy-papers/digitaldeceit/.

- Ibid.

- Daniel Fried and Alina Polyakova, “Democratic Defense Against Disinformation,” (Washington, DC: The Atlantic Council, March 5, 2018), http://www.atlanticcouncil.org/publications/reports/democratic-defense-against-disinformation.

- Darrell M. West and John R. Allen, “How artificial intelligence is transforming the world,” (Washington, DC: Brookings Institution, April 24, 2018), https://www.brookings.edu/research/how-artificial-intelligence-is-transforming-the-world/.

- Christopher Paul and Rand Waltzman, “How the Pentagon Should Deter Cyber Attacks,” January 10, 2018, The Rand Blog, https://www.rand.org/blog/2018/01/how-the-pentagon-should-deter-cyber-attacks.html for a cyber deterrence framework for the Department of Defense.

- “Work with us,” Defense Innovation Unit, https://www.diux.mil/work-with-us/companies.

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).