The Latam-GPT team is refining its model with locally sourced, Spanish-language materials including court decisions from Buenos Aires, library records from Peru, school textbooks from Colombia, and other regional datasets. Coordinated by Chile’s National Center for Artificial Intelligence (CENIA), the project tests whether Latin America can move from digital consumption to creation—building AI that reflects local languages, cultures, and histories rather than importing Silicon Valley’s assumptions.

Latin America’s regional AI initiative, Latam-GPT, is being built with around 50 billion parameters—placing it in the same order of magnitude as GPT-3.5—and draws on a corpus of over 8 terabytes of text gathered from more than 30 institutions across Latin America. While precise comparisons are difficult, the training data volume appears markedly smaller than that of large global models. Behind these specs lies a larger question: Can a region that has long imported technology now become its architect?

The technical gambit: Build or borrow?

The sovereignty debate in AI often reduces it to a binary choice: train from scratch or adapt to existing models.

Switzerland recently followed the first route; its open-source, multilingual large language model (LLM) shows that sovereign AI—understood as the capacity of a country or region to control its AI systems so that they are aligned with national strategic interests, legal and ethical norms, cultural priorities, and security needs—is technically feasible. But it also highlights the demands: sustained public investment, strong institutional coordination, and centralized capacity.

Fine-tuning existing models offers a pragmatic alternative. “Head tuning” modifies the model’s final layer without changing how it processes information. Low-Rank Adaptation (LoRA) inserts small, trainable modules into the model’s inner layers. If the model were a radio, head-tuning would adjust the speaker, whereas LoRA would tweak the equalizer. Ultimately, both shape behavior without rebuilding the system.

Now imagine a base Latam-GPT with LoRA adapters fine-tuned for slang like Argentina’s “pibe,” Brazil’s “cara,” or Chile’s “cabro.” You could also apply adapters tuned to country-specific routines, like Argentina’s ARCA filings, Brazil’s PIX payments, or Chile’s Fonasa health system. In other words, imagine adapting an open-source model to local contexts with far less compute power—and in a matter of days instead of months.

Yet this path comes with hidden dependencies. Fine-tuning inherits the foundational assumptions of the base model—its tokenization strategies, attention mechanisms, and embedded cultural biases. A model trained primarily on English text may struggle with Spanish morphological complexity or indigenous language contextual richness, no matter how sophisticated the fine-tuning.

CENIA’s approach splits the difference. Rather than relying purely on adaptation, the project follows a middle path: training on regional data while leveraging a multilingual architecture. Built on LlaMA 3, the model draws from a corpus spanning over 1,500 languages in a deliberate attempt to balance global coverage with regional specificity.

The cultural dimension: Beyond translation

The technical challenges pale beside the cultural ones. Language models don’t just process text; they encode values and worldviews. When ChatGPT struggles to understand Argentine politics or Colombian social movements, it’s not a bug—it’s a feature of its training data.

Global AI models often falter in local contexts. In Haitian Creole, ChatGPT once translated “asylum” as “Ewoyezi“—a made-up word. In Latin America, users report tone-deaf responses in regional Spanish, with models defaulting to Iberian phrases or misreading local acronyms.

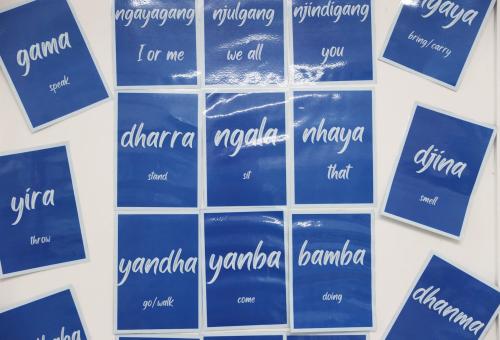

The tokenization problem is equally revealing: Language models break text into tiny pieces called tokens, and when those pieces don’t match how a language naturally works, meaning and nuance get lost. Western models tend to over-fragment non-Latin scripts, but in Latin America the challenge runs deeper. How do you capture code-switching—the fluid movement between Spanish, Portuguese, and indigenous languages—or reflect the semantic richness of “mañana” in its many cultural contexts?

When I ask ChatGPT to describe Latin American culture in 180 characters, its response is tellingly generic: “Vibrant, diverse, and rooted in indigenous, African, and European heritage, Latin American culture blends tradition, family, music, and passion in everyday life.” Latam-GPT’s team hopes to move beyond such superficial descriptions.

Latam-GPT’s training corpus includes not just Spanish and Portuguese but indigenous languages, each with distinct grammatical structures. Quechua’s agglutinative morphology, Guaraní’s evidential markers, and Mapudungun’s complex verbal system represent computational hurdles beyond vocabulary expansion.

CENIA’s experiment

What makes Latam-GPT remarkable isn’t its technical architecture, but its institutional coordination. In a region where cross-border collaboration often stalls, Latam-GPT’s design reflects the region’s institutional diversity. It provides a shared base that can be fine-tuned for national priorities—Argentine legal documents, Colombian health records, and Mexican curricula, among other use cases. This federated logic acknowledges that common linguistic roots coexist with wide differences in governance and user needs.

Unlike commercial AI development, which prioritizes proprietary advantages, Latam-GPT will be open-source and available to the public. This reflects both necessity—the region lacks resources for proprietary development—and strategic choice.

The skeptical view

For all its ambition, Latam-GPT faces structural obstacles. The most fundamental is the attention economy. AI models require constant updates and refinement. Silicon Valley’s advantage isn’t just initial capital but sustained investment. Can Latin America’s institutions maintain long-term commitment?

The talent drain problem looms large. Most countries see their best AI researchers migrate to higher-paying opportunities in the Global North, making it difficult to build the deep expertise required for sustained model development. Latam-GPT’s success depends on reversing this trend.

User adoption poses a classic chicken-or-egg dilemma. Developers build only if users switch, and users switch only if the tools are better. Solving this takes more than performance—it requires institutional support and a regional ecosystem, especially by the countries working to design and embed these design choices.

AI as infrastructure

Infrastructure used to mean roads and dams. Today it includes graphics processing units (GPUs) and structured data.

Latam-GPT tests whether AI can function as public infrastructure—managed for collective benefit, not private profit. While commercial AI chases engagement, public models should serve education, health, and cultural preservation.

The education applications are particularly promising. Several Chilean municipalities are testing Latam-GPT for reducing school dropout rates and improving public health services. The model’s regional training data should make it more effective than global alternatives for understanding local contexts.

Estimates place Brazil among the world’s leading ChatGPT users, while businesses across the region increasingly deploy AI for customer support. But this adoption highlights the dependency problem. And, as Singapore’s comparable SEA-LION initiative shows, cross-country AI efforts succeed when governance is centralized and resources are deep—conditions Latin America mostly lacks.

The path forward: pragmatic sovereignty

Latam-GPT faces the same representation problems that plague global models: ensuring indigenous peoples, migrant communities, and marginalized groups participate in the model’s validation. CENIA’s general manager admits that “it will likely take at least a decade” to achieve meaningful representation of the region’s full diversity.

Latam-GPT’s test will be whether it can provide better directions in Santiago, more accurate historical context for regional events, and nuanced understandings of local political dynamics. But its bigger challenge is sustainability: Its federated model depends on coordination mechanisms that outlast political and funding cycles.

Most importantly, Latam-GPT’s impact will be measured not solely in parameters or performance metrics but in its contribution to development. If the model helps teachers in rural Colombia or bureaucrats in urban Mexico do their jobs better, it will have succeeded regardless of technical specifications.

Ultimately, Latin America’s AI sovereignty depends on the political will to invest in long-term technological development and institutional capacity to sustain it. Latam-GPT offers a promising start—but the real test lies ahead. The goal isn’t to match Silicon Valley but to build AI that serves Latin America’s own.

-

Acknowledgements and disclosures

Google is a general, unrestricted donor to the Brookings Institution. The findings, interpretations, and conclusions posted in this piece are solely those of the authors and are not influenced by any donation.

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).

Commentary

Latam-GPT and the search for AI sovereignty

November 25, 2025