In 2008, researchers at Google noticed a startling phenomenon: Search queries for the flu correlated with the illness’s spread in a given region, and a machine-learning model could predict outbreaks. The model built by Google was initially accurate, but by 2013 it ran into trouble and overpredicted the amount of flu by a factor of 2. After several failed attempts to salvage the model, the project was quietly discontinued in 2015. While the exact causes for failure remain unknown—Google has released limited details—the company’s algorithm appears to have struggled to understand changes over time in user behavior that were caused partly by Google’s own changes to its product.

Machine learning—currently the dominant approach to artificial intelligence—contributes to technologies as diverse as autonomous driving, medical screening, and supply-chain management. Yet despite its successes, it can often fail in unintuitive ways. If we are to use machine learning effectively and responsibly in policy-critical settings such as setting bail or security-critical settings such as cyberdefense, we must accurately understand the strengths and limitations of the technology.

The takeaway for policymakers—at least for now—is that when it comes to high-stakes settings, machine learning (ML) is a risky choice. “Robustness,” i.e. building reliable, secure ML systems, is an active area of research. But until we’ve made much more progress in robustness research, or developed other ways to be confident that a model will fail gracefully, we should be cautious in relying on these methods when accuracy really matters.

When machine learning fails, the results can be spectacular. On April 23, 2013, Syrian hackers compromised the Associated Press Twitter feed and tweeted, “Breaking: Two Explosions in the White House and Barack Obama is injured”. In response to the tweet, the Dow Jones Industrial Average dropped by $136 billion dollars (although this drop was reversed 3 minutes later). While we still do not know the full story behind the drop, one culprit was analytics algorithms (a pre-cursor to ML) that rated the tweet as reliable and thereby led many people to make trades based on its content.

Similar predictive challenges are playing out in today’s COVID-19 crisis on a compressed time scale. Machine-learning systems have to operate under dynamic, real-world conditions, and when those conditions are rapidly changing, the results can be disappointing. The COVID-19 pandemic has provided a great example of how machine learning can struggle to make accurate predictions. Early in the crisis, the Institute for Health Metrics at the University of Washington built a model for COVID-related deaths that gained wide adoption among policymakers trying to understand the outbreak. But that model, which includes some machine-learning elements, has repeatedly failed to live up to reality—and most other models haven’t done much better.

The problem is that policy interventions to tackle the crisis are continuously changing not only the outcome the model is trying to predict (by changing how many people get sick), but also the data being fed into the model (by changing how many tests are available and who is tested). The models that have worked are mostly those with the modest goal of predicting only 5 days out. COVID-19 has caused problems for unrelated predictive models, too. Online retail algorithms are flummoxed by the abrupt changes in what people are buying. Changes in consumer behavior also affect algorithms modeling inventory management and fraud detection.

In all these situations, the common cause of failure is that a previously reliable signal became unreliable. The Associated Press, which had rarely been wrong before, was suddenly wrong on a grand scale. User search behavior changed in response to both the Google product and news coverage. And during a crisis such as COVID-19, there are few constants anywhere.

In general, present machine-learning systems are not good at handling these changes, and—more problematically—are not even good at noticing them. In the AP case, trading algorithms appear not to have noticed that other reliable sources didn’t tweet about the explosion. There are active areas of research trying to solve these problems, publishing papers on topics such as “out-of-distribution robustness,” “out-of-distribution detection,” “anomaly detection,” and “uncertainty estimation.” But until these fields make significant progress, we must grapple with the limitations of ML models.

Of course, the world is never constant, and machine learning doesn’t always fail when situations change. For policymakers, we offer the following rules of thumb for assessing risks of failure: two factors that make ML more likely to fail, and one that makes success easier.

First, ML will struggle most in adversarial situations, where other agents are incentivized to subvert the model. On the military side, this includes cyberdefense, intelligence collection, and any use of ML on the battlefield. On the civilian side, it applies to detecting fraud, human trafficking, poaching, or other illegal behaviors. In such settings, we must also assess the costs of failure and speed of turnaround. In a military setting, even temporary failure of an ML system can be catastrophic, while poachers who temporarily evade detection may still be eventually caught. Economic or political motivations can also incentivize actors to subvert a model—as in the case of fake news, clickbait, or other sensational content that is designed to reach the top of newsfeed rankings whether or not it is helpful for users to see.

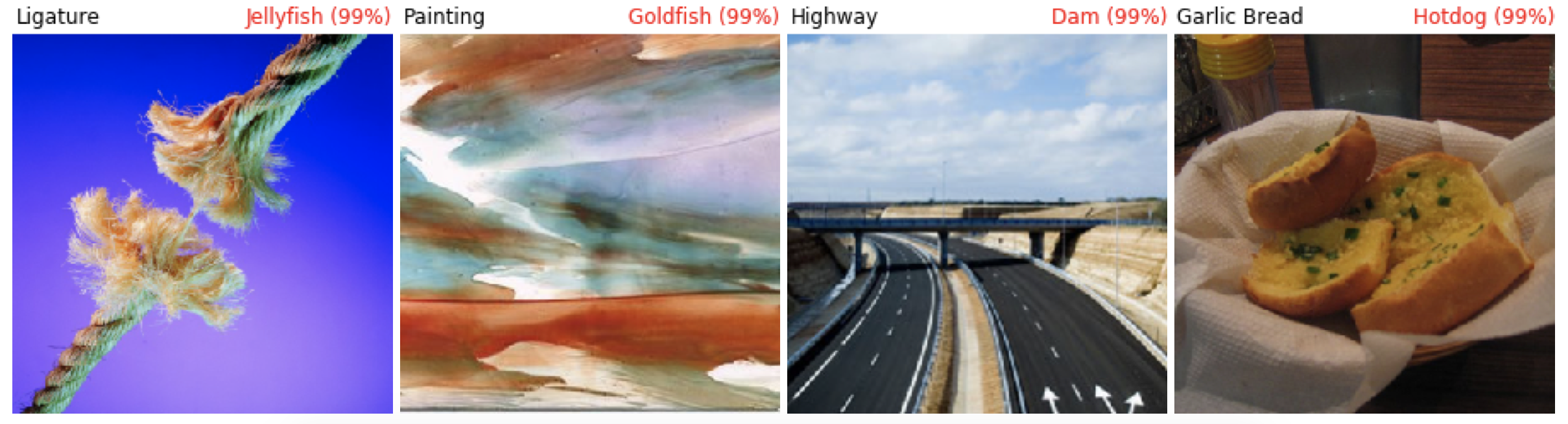

Second, problems will arise when—as in the COVID-19 era—relationships we take for granted suddenly break. ML is excellent at finding proxies that can predict whatever it is we ultimately care about—such as shopping algorithms figuring out that large purchases of unscented lotion are a good indicator of pregnancy. But machine-learning models often don’t understand when or how a proxy stops being a good predictor, so sudden changes—like the enormous behavioral changes we’ve seen over the past few months—can debilitate these models’ performance.

By contrast, the best cases for machine learning are when we have continuous data to update a model, and no single high-stakes decision. Then, even as the world changes, our model can incorporate the changing data to make better predictions, and being slightly out of date is unlikely to be catastrophic. This is the case for services such as speech recognition, machine translation, and web search, where large, continually updating models can deliver accessibility improvements with little downside. The way Google Translate has made foreign languages more accessible with remarkable improvements in computer translation is a testament to the promise of machine learning.

In more high-stakes settings, machine learning can still deliver improvements on human judgment, but that requires vigilance in the design of these algorithms. Studies have shown, for example, that judges tend to be racially biased in assigning bail to defendants. A machine system could in theory deliver more fairness than human judgment, but bail and sentencing algorithms have in recent years sometimes worsened rather than improved racial disparities in the justice system. Machine learning could be used to counter human bias, but that also requires building and auditing systems with that goal in mind.

Building and testing robust machine-learning systems is sure to be a challenge for years to come. As ML and AI become increasingly integral to different facets of society, it is crucial that we bear in mind their limitations and risks, and design processes to ameliorate them.

Jacob Steinhardt is an assistant professor of statistics at University of California, Berkeley.

Helen Toner is the director of strategy at the Center for Security and Emerging Technology at Georgetown University.

Google provides financial support to the Brookings Institution, a nonprofit organization devoted to rigorous, independent, in-depth public policy research.

Commentary

Why robustness is key to deploying AI

June 8, 2020