Introduction

In June 2014, the Supreme Court handed down its decision in Riley v. California, in which the justices unanimously ruled that police officers may not, without a warrant, search the data on a cell phone seized during an arrest. Writing for eight justices, Chief Justice John Roberts declared that “modern cell phones . . . are now such a pervasive and insistent part of daily life that the proverbial visitor from Mars might conclude they were an important feature of human anatomy.”1

This may be the first time the Supreme Court has explicitly contemplated the cyborg in case law—admittedly as a kind of metaphor. But the idea that the law will have to accommodate the integration of technology into the human being has actually been kicking around for a while.

Speaking at the Brookings Institution in 2011 at an event on the future of the Constitution in the face of technological change, Columbia Law Professor Tim Wu mused that “we’re talking about something different than we realize.” Because our cell phones are not attached to us, not embedded in us, Wu argued, we are missing the magnitude of the questions we contemplate as we make law and policy regulating human interactions with these ubiquitous machines that mediate so much of our lives. We are, in fact, he argued, reaching “the very beginnings of [a] sort of understanding [of] cyborg law, that is to say the law of augmented humans.” As Wu explained,

[I]n all these science fiction stories, there’s always this thing that bolts into somebody’s head or you become half robot or you have a really strong arm that can throw boulders or something. But what is the difference between that and having a phone with you—sorry, a computer with you—all the time that is tracking where you are, which you’re using for storing all of your personal information, your memories, your friends, your communications, that knows where you are and does all kinds of powerful things and speaks different languages? I mean, with our phones we are actually technologically enhanced creatures, and those technological enhancements, which we have basically attached to our bodies, also make us vulnerable to more government supervision, privacy invasions, and so on and so forth.

And so what we’re doing now is taking the very first, very confusing steps in what is actually a law of cyborgs as opposed to human law, which is what we’ve been used to. And what we’re confused about is that this cyborg thing, you know, the part of us that’s not human, non-organic, has no rights. But we as humans have rights, but the divide is becoming very small. I mean, it’s on your body at all times.2

Humans have rights, under which they retain some measure of dominion over their bodies.3 Machines, meanwhile, remain slaves with uncertain masters. Our laws may, directly and indirectly, protect people’s right to use certain machines—freedom of the press, the right to keep and bear arms. But our laws do not recognize the rights of machines themselves.4 Nor do the laws recognize cyborgs—hybrids that add machine functionalities and capabilities to human bodies and consciousness.5

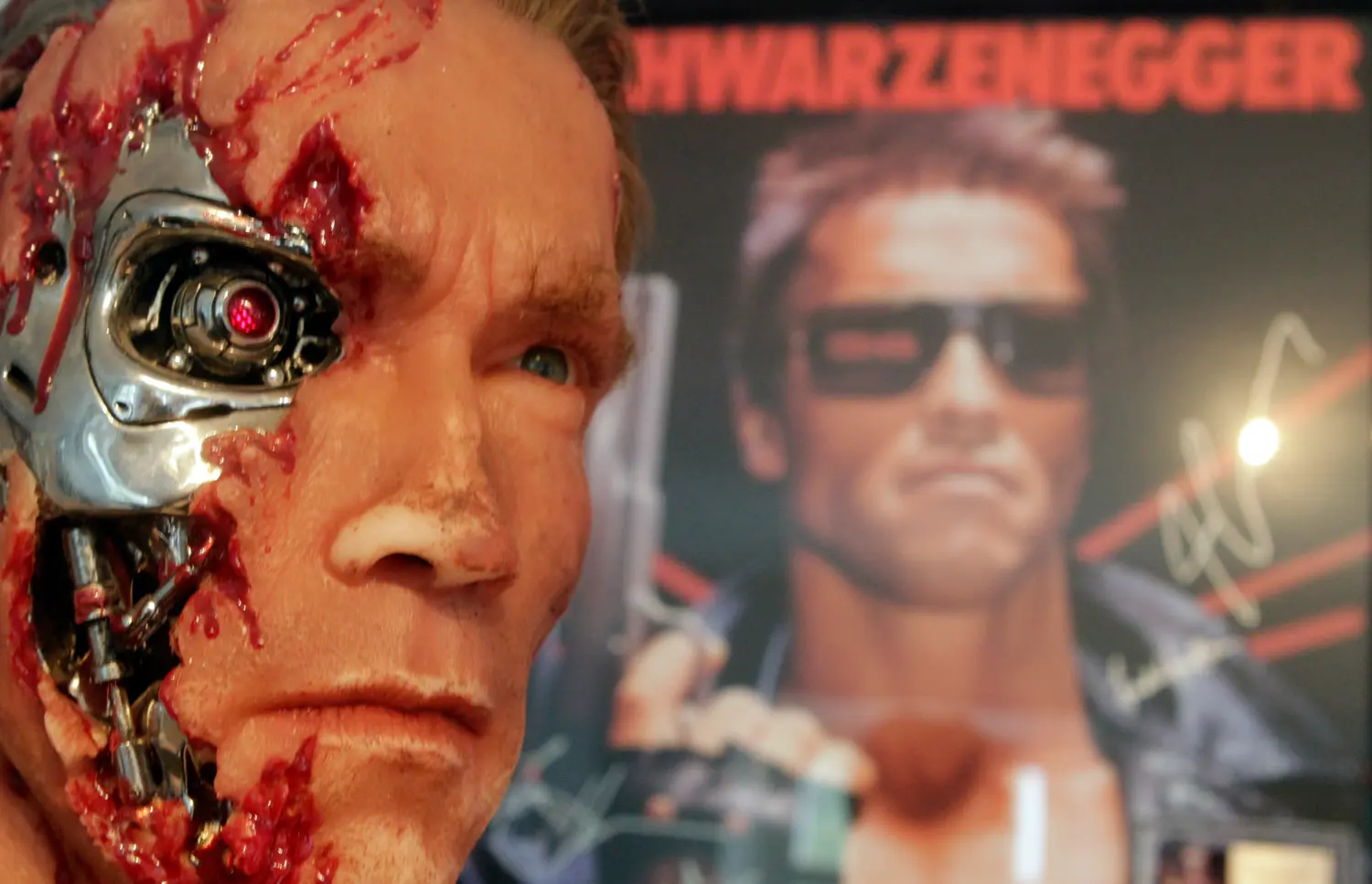

As the Riley case illustrates, our political vocabulary and public debates about data, privacy, and surveillance sometimes approach an understanding that we are—if not yet Terminators—at least a little more integrated with our machines than are the farmer wielding a plow, the soldier bearing a rifle, or the driver in a car. We recognize that our legal doctrines make a wide swath of transactional data available to government on thin legal showings—telephone call records, credit card transactions, banking records, and geolocation data, for example—and we worry that these doctrines make surveillance the price of existence in a data-driven society. We fret that the channels of communication between our machines might not be free, and that this might encumber human communications using those machines.

That said, as the Supreme Court did in Riley, we nearly always stop short of Wu’s arresting point. We don’t, after all, think of ourselves as cyborgs. The cyborg instead remains metaphor.

But should it? The question is a surprisingly important one, for reasons that are partly descriptive and partly normative. As a descriptive matter, sharp legal divisions between man and machine are turning into something of a contrivance. Look at people on the street, at the degree to which human-machine integrations have fundamentally altered the shape of our daily lives. Even beyond the pacemakers and the occasional robotic prosthetics, we increasingly wear our computers—whether Google Glass or Samsung Galaxy Gear. We strap on devices that record our steps and our heart rates. We take pictures by winking. Even relatively old-school humans are glued to their cell phones, using them not just as communications portals, but also for directions, to spend money, for informational feeds of varying sorts, and as recorders of data seen and heard and formerly—but no longer—memorized. Writes one commentator:

[E]ven as we rebel against the idea of robotic enhancement, we’re becoming cyborgs of a subtler sort: the advent of smartphones and wearable electronics has augmented our abilities in ways that would seem superhuman to humans of even a couple decades ago, all without us having to swap out a limb or neuron-bundle for their synthetic equivalents. Instead, we slip on our wristbands and smart-watches and augmented-reality headsets, tuck our increasingly powerful smartphones into our pockets, and off we go—the world’s knowledge a voice-command away, our body-metrics and daily activity displayable with a few button-taps.6

No, the phones are not encased in our tissue, but our reliance on them could hardly be more narcotic if they were. Watch your fellow passengers the next time you’re on a plane that lands. No sooner does it touch down than nearly everyone engages their phones, as though a part of themselves has been shut down during the flight. Look at people on a bus or on a subway car. What percentage of them is in some way using phones, either sending information or receiving some stimulus from an electronic device? Does it really matter that the chip is not implanted in our heads—yet? How much of your day do you spend engaged with some communications device? Is there an intelligible difference between tracking it and tracking you?

This brings us to the normative half of the inquiry. Should we recognize our increasing cyborgization as more than just a metaphor, given the legal and policy implications both of doing so and of failing to do so? Our law sees you and your cell phone as two separate entities, a person who is using a machine. But robust protections for one may be vitiated in the absence of concurrent protections for the other. Our law also sees the woman with a pacemaker and the veteran with a robotic prosthesis or orthosis as people using machines. Where certain machines are physically incorporated into or onto the body, or restore the body to its “normal” functionality rather than enhance it, we might assume they are more a part of the person than a cell phone. Yet current laws offer no such guarantees. The woman is afforded no rights with respect to the data produced by her pacemaker,7 and the quadriplegic veteran has few rights beyond restitution for property damage when an airline destroys his mobility assistance device and leaves him for months without replacement.8

As we will explain, the general observation that humans are becoming cyborgs is not new.9 But commentators have largely used the term “cyborg” to capture, as a descriptive matter, what they see as unprecedented merger between humans and machines, and to express concerns about the ways in which the body and brain are increasingly becoming sites of control and commodification. In contrast, with normative vigor, we push the usefulness of the concept, suggesting the ways in which conceptualizing our changing relationship to technology in terms of our cyborgization may facilitate the development of law and policy that sensitively accommodates that change.

The shift that comes of understanding ourselves as cyborgs is nowhere more apparent than in the surveillance realm, where discussion of the legal implications of our technology dependence is often couched in and restricted to privacy terms. Under this conventional construction, it is privacy that is key to our identities, and technology is the poisoned chalice that enables, on the one hand, our most basic functioning in a highly networked world, and on the other, the constant monitoring of our activities. For example, in a 2013 Christmas day broadcast, Edward Snowden borrowed a familiar trope to portend a dark fate for a society threatened by the popularization of technologies that George Orwell had never contemplated. “We have sensors in our pockets that track us everywhere we go. Think about what this means for the privacy of the average person,”10 he urged. With some poignancy, Snowden went on to pay special homage to all that privacy makes possible. Privacy matters, according to Snowden, because “privacy is what allows us to determine who we are and who we want to be.”11

There is, however, another way to think about all of this: what if we were to understand technology itself as increasingly part of our very being?

Indeed, do we care so much about whether and how the government accesses our data perhaps because the line between ourselves and the machines that generate the data is getting fuzzier? Perhaps the NSA disclosures have struck such a chord with so many people because on a visceral level we know what our law has not yet begun to recognize: that we are already juvenile cyborgs, and fast becoming adolescent cyborgs; we fear that as adult cyborgs, we will get from the state nothing more than the rights of the machine with respect to those areas of our lives that are bound up with the capabilities of the machine.

In this paper, we try to take Wu’s challenge seriously and think about how the law will respond as the divide between human and machine becomes ever-more unstable. We survey a variety of areas in which the law will have to respond as we become more cyborg-like. In particular, we consider how the law of surveillance will shift as we develop from humans who use machines into humans who partially are machines or, at least, who depend on machines pervasively for our most human-like activities.

We proceed in a number of steps. First, we try to usefully define cyborgs and examine the question of to what extent modern humans represent an early phase of cyborg development. Next we turn to a number of controversies—some of them social, some of them legal—that have arisen as the process of cyborgization has gotten under way. Lastly, we take an initial stab at identifying key facets of life among cyborgs, looking in particular at the surveillance context and the stress that cyborgization is likely to put on modern Fourth Amendment law’s so-called third-party doctrine—the idea that transactional data voluntarily given to third parties is not protected by the guarantee against unreasonable search and seizure.

What Is a Cyborg and Are We Already Cyborgs?

Human fascination with man-machine hybrids spans centuries and civilizations.12 From this rich history we extract two lineages of modern thought to help elucidate the theoretical underpinnings of the cyborg.

We are—if not yet Terminators—at least a little more integrated with our machines.

In 1960, Manfred Clynes coined the term “cyborg”13 for a paper he coauthored with Nathan Kline for a NASA conference on space exploration.14 As conceived by Clynes and Kline, the cyborg—a portmanteau of “cybernetics” and “organism”14—was not merely an amalgam of synthetic and organic parts. It represented, rather, a particular approach to the technical challenges of space travel—physically adapting man to survive a hostile environment, rather than modifying the environment alone.15

The proposal would prove influential. Soon after the publication of Clynes and Kline’s paper, NASA commissioned “The Cyborg Study.” Released in 1963, the study was designed to assess “the theoretical possibility of incorporating artificial organs, drugs, and/or hypothermia as integral parts of the life support systems in space craft design of the future, and of reducing metabolic demands and the attendant life support requirements.”16 This sort of cyborg can be understood as a commitment to a larger project. As a “self-regulating man-machine,” the cyborg was designed “to provide an organization system in which . . . robot-like problems are taken care of automatically and unconsciously, leaving man free to explore, to create, to think, and to feel.”17 Distinguishing man’s “robot-like” functions from the higher-order processes that rendered him uniquely human, Clynes and Kline presented the cyborg as the realization of a concrete transhumanist goal: man liberated from the strictly mechanical (“robot-like”) limitations of his organism and the conditions of his environment by means of mechanization.

Outside the realm of space exploration, use of the term “cyborg” has evolved to encompass an expansive mesh of the mythological, metaphorical and technical.18 According to Chris Hables Gray, who has written extensively on cyborgs and the politics of cyborgization, “cyborg” has become “as specific, as general, as powerful, and as useless a term as tool or machine.”20 Perhaps because of its plasticity, the term has become more popular among science-fiction writers and political theorists than among scientists, who prefer more exacting vocabularies—using terms like biotelemetry, teleoperators, bionics and the like.19

The idea that we are already cyborgs—indeed, that we have always been cyborgs—has been out there for some time. For example, in her seminal 1991 feminist manifesto, Donna Haraway deployed the term for purposes of building an “ironic political myth,” one that rejected the bright-line identity markers purporting to separate human from animal, animal from machine. She famously declared, “[W]e are all chimeras, theorized and fabricated hybrids of machine and organism; in short, we are cyborgs.”20

Periodically repackaged as a radical idea, the claim has not remained confined to the figurative or sociopolitical realms. Technologists, too, have proposed that humans have already made the transition to cyborgs. In 1998, Andy Clark and David Chalmers proposed that where “the human organism is linked with an external entity in a two-way interaction” the result is “a coupled system that can be seen as a cognitive system in its own right.”21 Clark expanded on these ideas in his 2003 book Natural-Born Cyborgs:

My body is an electronic virgin. I incorporate no silicon chips, no retinal or cochlear implants, no pacemaker. I don’t even wear glasses (though I do wear clothes), but I am slowly becoming more and more a cyborg. So are you. Pretty soon, and still without the need for wires, surgery, or bodily alterations, we shall all be kin to the Terminator, to Eve 8, to Cable . . . just fill in your favorite fictional cyborg. Perhaps we already are. For we shall be cyborgs not in the merely superficial sense of combining flesh and wires but in the more profound sense of being human-technology symbionts: thinking and reasoning systems whose minds and selves are spread across biological brain and nonbiological circuitry.22

The idea that humans are already cyborgs has met with resistance from those who note that “[p]ointing to something like cell-phone use and saying ‘we’re all cyborgs’ is not substantially different from pointing to cooking or writing and saying “we’re all cyborgs.”23 But this is actually Clark’s point. As suggested by the title of his book, Clark does not regard the human “tendency toward cognitive hybridization” as a modern phenomenon. He sees the history of humanity as marked by a series of “mindware upgrades,” from the development of speech and counting, to the production of moveable typefaces and digital encodings.24 Although he recognizes the particular postmodern appeal of the cyborg, “a potent cultural icon of the late twentieth century,” Clark suggests that when whittled down, our futuristic conception of human-machine hybrids amounts to nothing more than “a disguised vision of (oddly) our own biological nature.”25

The idea that humans are already cyborgs has met with resistance from those who note that “[p]ointing to something like cell-phone use and saying ‘we’re all cyborgs’ is not substantially different from pointing to cooking or writing and saying “we’re all cyborgs.”23 But this is actually Clark’s point. As suggested by the title of his book, Clark does not regard the human “tendency toward cognitive hybridization” as a modern phenomenon. He sees the history of humanity as marked by a series of “mindware upgrades,” from the development of speech and counting, to the production of moveable typefaces and digital encodings.24 Although he recognizes the particular postmodern appeal of the cyborg, “a potent cultural icon of the late twentieth century,” Clark suggests that when whittled down, our futuristic conception of human-machine hybrids amounts to nothing more than “a disguised vision of (oddly) our own biological nature.”25

Not all cyborg theorists find the notion that we have always been cyborgs compelling. In the introduction to his influential 1995 collection The Cyborg Handbook, Chris Hables Gray addresses, and rejects, this conflation and essentialization of the primitive and the modern:

But haven’t people always been cyborgs? At least back to the bicycle, eyeglasses, and stone hammers? This is an argument many people make, including early cyborgologists like Manfred Clynes and J.E. Steele. The answer is, in a word, no . . . . Cyborgian elements of previous human-tool and human-machine relationships are only visible from our current point of view. In quantity, and quality, the relationship is new.”26

Similarly, cyborg anthropologist27 Amber Case maintains that there is a meaningful distinction to be made between past technologies and those developed in recent decades, based on the ways in which and extent to which they shape and change how humans connect to one another.28

There are those who have suggested that we may not yet be cyborgs but that, given the exponential growth of computing power and technological development, we will soon be. According to Ray Kurzweil—the preeminent inventor, futurist and now Google’s director of engineering—we are at the “knee of the curve.” Kurzweil’s exploration of the coming obliteration of the distinction between human and machine recalls many of the bio-transcendent ideas and ambitions of Clynes and Kline and their intellectual predecessor, Norbert Weiner. Kurzweil states,

Our version 1.0 biological bodies are likewise frail and subject to a myriad of failure modes . . . . While human intelligence is sometimes capable of soaring in its creativity and expressiveness, much human thought is derivative, petty and circumscribed. The Singularity will allow us to transcend these limitations of our biological bodies and brains.29

A second history runs parallel to the Clynes-and-Kline narrative, one that begins around the same time but in a slightly different theoretical space. Wu, an apparent proponent of the “subtler cyborg” theory, is among those who attribute the beginnings of the “project of human augmentation” to J.C.R. Licklider.30 Licklider is sometimes referred to as the father of the Internet in part for his role in shaping the Pentagon’s funding priorities as head of the Information Processing Techniques Office, a division of the Advanced Research Projects Agency (ARPA).31 In 1960—the same year Clynes and Kline published “Cyborgs and Space”—Licklider published “Man-Computer Symbiosis,” in which he predicted the “close coupling” of man and the electronic computer. Together they would constitute a “semiautomatic system,” in contradistinction to the symbiotic system that was the mechanically extended man.32 Licklider wrote, “‘Mechanical extension’ has given way to replacement of men, to automation, and the men who remain are there more to help than to be helped.”33

Licklider’s ideas offer a fundamentally different way of thinking about the cyborg. In his view, the human does not remain a central part of the picture. Where Clynes, Kline and Clark arguably see fusion (between machine and man), a Lickliderite might be said to see substitution (machine for man). Under the Licklider view, no longer is the cyborg a project centered on unleashing man’s potentiality; the cyborg is man getting out of the way.

We don’t mean to settle these largely philosophical arguments about the nature of the cyborg’s technological trajectory here. For present purposes, let us begin with a working definition of the term “cyborg” and posit that a process of cyborgization of society is taking place. Steve Mann, inventor of the “wearable computer,” defines cyborg in terms of hybridization, as “a person whose physiological functioning is aided by or dependent upon a mechanical or electronic device.”34 The Oxford English Dictionary, meanwhile, defines cyborg more explicitly in terms of augmentation: as “[a] person whose physical tolerances or capabilities are extended beyond normal human limitations by a machine or other external agency that modifies the body’s functioning; an integrated man–machine system.”35 Under either definition, different people fall in different places on the spectrum of pure human to consummate cyborg. But quite a number of us are inching closer to a subtle Arnold Schwarzenegger. And the increasing cyborgization of the populace—however we choose to define the phenomenon—raises important questions about access,36 discrimination,37 military action,38 privacy,391 bodily integrity,40 autonomy,41 property42 and citizenship.43 These are questions that, ultimately, the law will have to address.

Cyborgs Among Us: Controversies and Policy Implications

One way to think about the policy issues that cyborgs will force us to address is to examine the controversies already arising out of our incipient cyborgization.

Many of these controversies track familiar binaries, such as substitution for missing or defective human parts versus enhancement or extension of normal human capabilities. The significance of this particular distinction has long been recognized in the bioethics sphere. Dieter Sturma, director of the German Reference Centre for Ethics in the Life Sciences, has stated that we should be open to “technical systems [that] reduce the suffering of a patient,” while warning that enhancement technology could become a problem.44

For starters, human enhancement is often associated with cheating and unfair advantage. Everything from professional athletes’ use of performance-enhancement drugs and government investment in military superwarriors has been criticized on these grounds.45 Vaccines, on the other hand, go largely unchallenged, no doubt because though they might be said to enhance the immune system, their function is to serve as prophylactic treatment against disease and are ideally administered to all.

But the distinction between substitution and enhancement is less stable than might be assumed.46 For it turns on how society chooses to define what constitutes health and what constitutes deficiency. That distinction is itself subject to variation depending on social context and individual goals, and subject to change with advances in science that allow for the manipulation of previously unalterable biological conditions.47 VSP, the country’s largest optical health insurance provider, for example, recently signed on to offer Google Glass subsidized frames and prescription lenses.48 By giving wearable devices “a medical stamp of approval,”51 the move suggests the beginnings of a breakdown in the distinction between mediated vision and mediated reality.

Neil Harbisson, a cyborg activist and artist born with a form of extreme colorblindness that limits him to seeing in only black and white, is equipped with an “eyeborg,” a device implanted in his head that allows him to “hear” color.49 In 2012, police officers attacked Harbisson and broke the camera off his head because they believed, mistakenly, that he was filming them during a street demonstration.50

The functionality of the eyeborg and the injuries Harbisson sustained raise questions not only about the enhancement-substitution distinction but also about the distinction between embedded and external devices. Our intuition might be to separate embedded from external technologies on the grounds that the difference often tracks whether the technologies are “integral” to the functioning of the human body. This could seem reasonable depending on the particular technologies we decide to compare: for example, smartphones are external to the body and presently not allowed in many federal courtrooms; barring someone fitted with a pacemaker from entry, on the other hand, would seem untenable.

But the difference between the embedded and the external is not so easily reduced to the difference between the integral and the superficial. Consider devices designed to compensate for physical deficiency but in ways not readily perceived by our society as prosthetic in form and function.51 Linda MacDonald Glenn, a bioethicist and lawyer, cites the case of a disabled Vietnam veteran who, as an incomplete quadriplegic, is entirely dependent on a powered mobility assistance device (MAD) to not only move and travel but also to protect himself against hypotensive episodes.52 An airline damaged his MAD beyond repair in October 2009 and left him without a replacement for a year, causing him to be bedridden for eleven months and suffer ulcers as a result.53 The airline offered minimal damages—$1500 in compensation—on the grounds that they harmed not their customer but his device only.54 Glenn argues that modern day assistive devices are no longer “inanimate separate objects” but “interactive prosthetics”: these include implants, transplants, embedded devices, nanotechnology, neural prosthetics, wearables and bioengineering.55 As such, liability rules that cover damage to or interference with use of traditional property could need reassessment in the interactive-prosthetic context.

This is not to dismiss the difference between what is embedded and what is external. After all, whether or not a technology can be considered medically superficial in function, once we incorporate it into the body such that it is no longer easily removed, it is integral to the person in fact. A number of bars,56 strip clubs57 and casinos58 have banned the use of Google Glass based on privacy protection concerns, and movie theaters have banned it for reasons related to copyright protection.59 But such bans could pose problems when the equivalent of Google Glass is physically screwed into an individual’s head.

Take Steve Mann. Unlike Harbisson, Mann suffers no visual impairment. But Mann wears an EyeTap, a small camera that can be connected to his skull to mediate what he sees—for instance, he can filter out annoying billboards—and stream what he sees onto the Internet. In 2012, Mann was physically assaulted by McDonald’s employees in what the press described as the world’s first anti-cyborg hate crime; Mann was able to prove the attack happened by releasing images taken with the EyeTap.60 The episode naturally raised questions about Mann’s rights as a cyborg, but the fact that Mann’s eyepiece affords him the ability to record everything he sees also raises questions about the privacy rights of noncyborgs when faced with individuals embedded with technologies with potentially invasive capabilities. These technologies are potentially invasive not only for third parties but also for the “users” themselves. In 2004, tiny RFID chips were implanted in 160 of Mexico’s top federal prosecutors and investigators to grant them automatic access to restricted areas. Ostensibly a security measure, the chips ensured certainty as to who accessed sensitive data and when,61 but also raise questions about when an individual may be compelled to undergo modification—perhaps as a condition of sensitive employment, or perhaps for other, murkier reasons.

A 2009 U.S. National Science Foundation report on the “Ethics of Human Enhancement” suggests moral significance in the distinction between a tool incorporated as part of our bodies and a tool used externally to the body, although—for example—a neural implant that gives an individual access to the Internet may not seem different in kind to a laptop.62 Specifically, the report argues that “assimilating tools into our persons creates an intimate or enhanced connection with our tools that evolves our notion of personal identity.” The report does, however, note that the “always-on or 24/7 access characteristic” might work against attempts to distinguish a neural chip from a Google Glass-like wearable computer.63

The “always-on” aspect of certain intimate technologies, embedded or not, gives people a certain pause when considering the ownership rights, data rights and security needs of cyborgs. Southwestern Law Professor Gowri Ramachandran has emphasized the potential need to specially regulate technologies that aid bodily function and mobility:

[I]t is completely unremarkable for property rights to exist in electronic gadgets. But we might be concerned if owners of patents on products such as pacemakers and robotic arms were permitted to enforce “end user license agreements” (“EULAs”) against patients. These EULAs could in effect restrict what patients can do with products that have become merged with their own bodies. And we should rightly, I argue, be similarly concerned with the effects of property rights in wheelchairs, cochlear implants, tools used in labor, and other such devices on the bodies of those who need or desire to use them.64

As it turns out, the state of the law with respect to pacemakers and other implanted medical devices provides a particularly vivid illustration of a cyborg gap. Most pacemakers and defibrillators are outfitted with wireless capabilities that communicate with home transmitters that then send the data to the patient’s physician.65 Experts have demonstrated the existence of enormous vulnerabilities in these software-controlled, Internet-connected medical devices, but the government has failed to adopt or enforce regulations to protect patients against hacking attempts.66 To date there have been no reports of such hacking—but then again, it would be extremely difficult to detect this type of foul play.67 The threat is sufficiently viable that former Vice President Dick Cheney’s doctor ordered the disabling of his heart implant’s wireless capability, apparently to prevent a hacking attempt, while Cheney was in office.68

And then there’s the more mundane question of the rights that should be afforded to people who engage in cyborgism that is seemingly simply recreational in nature. The explosive popularity of “wearable” technology points to the coming seamless integration of bodily and technological functions and suggests not a trend but an emerging way of life. In December 2013, Google introduced a new update to Google Glass—a feature that allows users to take a photo by simply winking.69 And this is only the beginning: one day a computer that interfaces with human perception may be able to overlay these insights on our own, imperceptibly enhancing our powers of analysis.70 This stuff will be great fun for lots of people who do not in any strict sense need it. It will also be inexpensive and readily available. And its use will be highly annoying—and intrusive—to other people, at least some of the time. The result will be disputes that the law will need to mediate.

With our machines, we are augmented humans and prosthetic gods, though we’re remarkably blasé about the fact.

Commentators who favor an expansive interpretation of who qualifies as a cyborg reject the idea that the physical body need undergo modification, in favor of focusing on the ways in which technology changes the brain. This approach introduces a third dimension to the otherwise contrived binaries between restorative and enhancing technologies and between embedded and external ones. For example, the invention of the printing press has been distinguished from the development of other tools in that it is “an invention that boosts our cognitive abilities by allowing us to off-load and communicate ideas out into the environment.”71 Of course, the smartphone takes off-loading to a new level. As Wu suggests, off-loading is a major feature of our relationship with and growing reliance upon modern tools that significantly enhance our abilities as cyborgs but reduce our capabilities as man qua man. Recently he wrote:

With our machines, we are augmented humans and prosthetic gods, though we’re remarkably blasé about the fact, like anything we’re used to. Take away our tools, the argument goes, and we’re likely stupider than our friend from the early twentieth century, who has a longer attention span, may read and write Latin, and does arithmetic faster. 72

How exactly we will mediate between the rights of cyborgs and the rights of anti-cyborgs remains to be seen—but we are already seeing some basic principles emerge. For example, the proposition that individuals should have special rights with respect to the use of therapeutic or restorative technologies appears to be so accepted that it has prompted a kind of intuitive carve-out for those who otherwise oppose wearable and similar technologies. Such is the case with Stop the Cyborgs, an organization that emerged directly in response to the public adoption of “wearable” technologies such as Google Glass. On its website, the group promotes “Google Glass ban signs” for owners of restaurants, bars and cafes to download and encourages the creation of “surveillance-free” zones.73 Yet the site also expressly requests that those who choose to ban Google Glass and “similar devices” from their property to also respect the rights of those who rely on assistive devices.74

Principles for Juvenile Cyborgs: Surveillance and Beyond

We can expect our increasing cyborgization to have the most significant immediate effects on the law in the surveillance arena. And here cyborgism is a two-edged sword. For the cyborg both enables surveillance and is unusually subject to it.

The cyborg both enables surveillance and is unusually subject to it.

We are, at this stage, at most juvenile cyborgs—more likely still infant cyborgs. We do not yet have a detailed sense of the scope, speed, or depth of our ongoing integration with machines. Will it remain, as it mostly is now, a sort of consumer dependence on objects and devices that make themselves useful, and eventually essential? Or will it evolve into something deeper—a physically more intimate connection between human and machine, and a dependence among more people for functions that we regard, or come to regard, as core human activity?

The cliché goes that an order-of-magnitude quantitative change is a qualitative change. Put differently, it is not merely that technology gets faster or more sophisticated; when the original speed or complication is raised to the power of ten, the change is one in kind, rather than simply in degree.

Today we may be baby cyborgs, our reliance on certain technologies increasing quantifiably, but at some point we will be looking at a qualitative change—a point at which we are truly no longer using those technologies but have sufficiently fused with them so as to reduce the government’s claims of tracking “them” and not “us” to an untenable legal fiction. Contrary to the layman’s assumption, that need not be the point at which we surgically implant chips into our wrists or introduce nanobots into our bloodstreams; it could be a simple question of the extent of our reliance or frequency and pervasiveness of our use.

Until we know how far down the cyborg spectrum how many of us are going to travel, it is folly to imagine that we can fashion definitive policy for surveillance—or anything else—in a world of cyborgs. It is more plausible, however, to imagine that we might discern certain principles and considerations that should inform policy as we mature into adolescent cyborgs and ultimately into adult cyborgs. Indeed, such an examination is vital if we are to make deliberate choices about whether and to what extent the protections and liberties we enjoy as humans are properly afforded to—or forfeited by—cyborgs.

The cyborg both enables surveillance and is unusually subject to it.

1. Data Generation

The first consideration that must factor into our discussions is that cyborgs inherently generate data. Human activity by default does not—at least, not beyond footprints and fingerprints and DNA traces. We can think and move without leaving meaningful traces; we can speak without recording. Digital activity, by contrast, creates transactional records. A cyborg’s activity is thus presumptively recorded and that data may be stored or transmitted. To record or to transmit data is also to enable collection or interception of that data. Unless one specifically engineers the cyborg to resist such collection or interception, it will by default facilitate surveillance. And even if one does engineer the cyborg to resist surveillance, the data still gets created. In other words, a world of cyborgs is a world awash in data about individuals, data of enormous sensitivity and, the further cyborgization progresses, ever-increasing granularity.

A world of cyborgs is a world awash in data.

Thus the most immediate impact of cyborgization on the law of surveillance will likely be to put additional pressure on the so-called third-party doctrine, which underlies a great deal of government collection of transactional data and business records. Under the third-party doctrine, an individual does not have a reasonable expectation of privacy with respect to information he voluntarily discloses to a third party, like a bank or a telecommunications carrier, and the Fourth Amendment therefore does not regulate the acquisition of such transactional data from those third parties by government investigators. The Supreme Court declined to extend constitutional protections to bank records in United States v. Miller75 based on the theory that “the Fourth Amendment does not prohibit the obtaining of information revealed to a third party and conveyed by [the third party] to Government authorities, even if the information is revealed on the assumption that it will be used only for a limited purpose and the confidence placed in the third party will not be betrayed.”76 The third-party doctrine underlies a huge array of collection, everything from the basic building blocks of routine criminal investigations to the NSA’s bulk telephony metadata program.

The third-party doctrine has long been controversial, even among humans. It has attracted particular criticism as backward in an era in which third-party service providers hold increasing amounts of what was previously considered personal information. Commentators have urged everything from overruling the doctrine entirely77 to adapting the doctrine to extend constitutional protections to Internet searches.78

But the doctrine seems particularly ill-suited to cyborgs. A world of humans can, after all, indulge the fiction that we each have a meaningful choice about whether to engage the modern banking system or the telephone infrastructure. It can adopt the position—however unrealistic in practice—that we have the option of not using telephones if we prefer not to give our metadata to telephone companies, and that we can pay cash for everything if we do not like the idea of the FBI getting our credit card records without a warrant. But the cyborg does not meaningfully have choice. Digital machines produce data as an inherent feature of their existence. The more we come to see the machine as an extension of the person—first by the pervasiveness of its use, then by its physical integration with its user, and ultimately through cybernetic integration with the user—the less plausible will seem the notion that these are simply tools which we choose to use and whose data we thus voluntarily turn over to service providers. The more like cyborgs we become, the more that data will seem like the inevitable byproduct of our lives, and thus entitled to heightened legal protection.

For an example, let’s return to the pacemaker. The pacemaker does not just control a patient’s heartbeat. It also monitors it, along with blood temperature, breathing, and heart electrical activity. In some very technical sense, we might consider this extracted data to be information voluntarily given to a third party. One does not have to get a pacemaker, after all. Nor does one have to get it monitored. These are choices, albeit choices with unusually high stakes. Yet even to formulate the issue thus brings a smile to one’s lips. And it’s hard to believe that Smith v. Maryland79 or Miller would have come out the same way had the data in question been produced by a device necessary to keep someone alive, had it quite literally bore on the person’s most vital bodily functions, and had it been physically embedded within the person’s chest.

A world of cyborgs is a world awash in data.

It is not merely that pacemaker users lack control over who accesses their data. Current laws impose no affirmative duty on manufacturers to allow pacemaker users access to their own data. The top five manufacturers do not allow patients to access the data produced by their pacemakers at all.83 This state of affairs has prompted one activist, Hugo Campo, to fight for the right to access the data collected by his own defibrillator, for years without success.84 This perverse state of affairs is an outgrowth of our failure to conceive of the relationship between the patient and pacemaker as one of integration, rather than mere use. The same logic would see pacemaker data as covered by the third-party doctrine.

The cyborg, in other words, is uniquely vulnerable to surveillance. The explosion in wearable technology underscores the dated nature of current laws in addressing the privacy concerns of the technology user—specifically, about the secondary uses of inherently intimate consumer data.85 The type of information collected by wearables ranges from an individual’s physiological responses to environmental factors to data more commonly associated with computer use—geolocation and personal interests. To a lesser extent, this is true too of smartphones, which all contain and collect in real time data that humans did not use to carry with them in traceable forms. All of this is collectable—the issue that bothered the justices in Riley.

2. Data Collection

While cyborgs generate the kind of comprehensive data that subjects them to surveillance, cyborgs also collect data, making them a powerful instrument of surveillance.

Cyborg data collection can be benign; much of it consists these days of people posting a lot of selfies and pictures of their kids on Facebook, for example, or people recording their own experiences. But the result is also a world in which one has to interact with others on the assumption that they are, or that they may be, recording aspects of the engagement. This is what animates those—like the people who run the Stop the Cyborgs website—who fear the ubiquitous presence of small, low-visibility surveillance devices. Cyborgization innately transforms people into agents capable of collection and retention and processing of large volumes of information.

The cyborg is, indeed, an instrument of highly distributed surveillance. Mann’s wearable computer is an outgrowth of a political stance: he has long advocated using technology to “invert the panopticon” and turn the tables on surveillance authorities. A play on the term “surveillance,” which translates from the French as watching from above, “sousveillance” reflects the idea of a populace watching the state from below.80 And wearable technologies with recording functions could indeed secure individuals in a number of ways, notably by deterring and documenting crime.

Sousveillance may secure the cyborg but it also imposes costs on cyborgs and noncyborgs alike. “Google Glass is possibly the most significant technological threat to ‘privacy in public’ I’ve seen,” Woodrow Hartzog, an affiliate scholar at the Center for Internet and Society at Stanford Law School, told Ars Technica last year. “In order to protect our privacy, we will need more than just effective laws and countermeasures. We will need to change our societal notions of what ‘privacy’ is.”81 But efforts to combat the perceived privacy threat posed by certain technologies raise their own set of ethical and legal issues. For instance, technologies have been developed to detect and blind cameras, as well as neutralize vision aids and other assistive technologies.82 The cyborg thus raises the problem of how society—and its law—will respond to large numbers of people recording their routine interactions with others.

In short, the further down the cyborg spectrum we go, the more we are both agents of and subject to surveillance.

3. Constructive Integration

When dealing with a world of cyborgs, to conduct surveillance against a machine is to conduct surveillance against a person. There will often be no distinction between the two. This does not necessarily mean one should be more reticent about the surveillance of machines; we may well decide as a society to lighten up about the surveillance of people. The point is that we should not kid ourselves about what we are doing when we collect on the non-biological side of the cyborg. When we learn the GPS coordinates of a phone, there is a person attached to that phone. When we check how our Fitbit data compares to that of our friends, we are not comparing our wristbands to other wristbands but our physical performance to that of other people. When we examine a computer’s search history, we are looking at the trajectory of a person’s thought.

When dealing with a world of cyborgs, to conduct surveillance against a machine is to conduct surveillance against a person.

Imagine for a moment the logical technological terminus of the cellphone revolution. Instead of carrying a device, dialing it, and speaking into it, the individual would simply have the ability to talk—as though her interlocutor were present—with any individual in the world. She would merely identify mentally to whom she was talking, and the telecommunications chip in her head would connect with the telecommunications chip in the recipient’s head. She would not even be conscious of using technology. What would separate this system from magic is that there would be actual telecommunications going on. Two communications devices, each interfacing with a human brain, would connect before sending and receiving signals. That means that they would produce metadata about which systems they had connected to, and the signals they sent would be subject to interception. That, in turn, would mean that a list of everyone you had talked to was readily available, at least in some technological sense. We submit, however, that if this were our technical reality, the notion that users voluntarily chose to communicate using a device they knew entrusted metadata to a phone company and that they had no reasonable expectation of privacy in those data would be as silly as is the idea that you have no expectation of privacy in the data produced by your heart.

In other words, the more essential the role our machines play in our lives, the more integral the data they produce are to our human existences, and the more inextricably intertwined the devices become with us—socially, physically, and biologically—the less plausible will seem the notion that the data they produce is material we voluntarily turn over to a third party like some file cabinet we give to a friend. A society of cyborgs—or a society that understands itself as on the cyborg spectrum—will have a whole different cultural engagement with the idea of electronic surveillance than will a society that understands itself as composed of humans using tools.

This shift may explain, at a subconscious level anyway, some of the fury over the past year about the NSA disclosures. People around the world infuriated by what they have learned about American intelligence collection practices are certainly not consciously thinking of themselves as cyborgs. But the point is that we no longer experience surveillance of the phone networks simply as surveillance of machines either. There was a time, not that long ago, when NSA coverage of large volumes of overseas calls—including those with one end in the U.S.—did not bother people all that much. It was no secret that NSA captured a huge amount of such material and that it incidentally captured U.S. person communications along the way, weeding out these communications using minimization procedures. But we did not make that many international calls and we did not Skype with people overseas very often. We did not send emails all over the world many times a day. We were not constantly engaging the network as though it were part of ourselves. Today, as juvenile cyborgs, we experience surveillance of that architecture very directly as surveillance of us. We can no longer disassociate ourselves from those machines. Our engagement with them is pervasive enough that systematic collection of data from those networks—even if accompanied by appropriate procedures and limiting rules—inevitably appears as collection on our innermost thoughts and private lives, closer and more oppressive than it did when the network and we were further apart.

As cyborgization progresses, we will therefore be faced with constant choices about whether to invest the machines with which we are integrating with some measure of the rights of humans or whether to divest humans of some rights they expected before they developed machine parts. The construction we have traditionally given this problem, that of the rights of human in the use of machines, will break down as the line between human activity and machine activity continues to blur. The person who carries a smartphone we might still construe as using a machine. And perhaps we might even think that of the person who wears an electronic insulin pump. But an eyeborg or a pacemaker?

After losing her left arm in a motorcycle accident, Claudia Mitchell became the first person to receive a bionic arm. The device detects the movements of a chest muscle rewired to the stumps of nerve once connected to her former limb.83 Would we really say she is using a machine? Or would we say she has machine parts? And if the latter, do we think about those machine parts as sharing in Mitchell’s rights as a human or do we think about her rights as a human as limited by our surveillance capabilities with respect to those machine parts?

The answers to these questions will not always be the same. We will not feel the same way about the privacy of what you see with your bionic eye—and your right not to incriminate yourself with the images it collects—as we will feel about your right to shield physiological data you collect on yourself recreationally or to incentivize your own fitness. To the extent you opt to film everything you see with Google Glass, you may be out of luck. Our choices will hinge on the depth of integration of human and technology, the function the technology is playing in our lives and the seriousness we attach to that function, and probably the perceived but ineffable inherency of that function to the irreducibly human.

About the Authors

Benjamin Wittes is a senior fellow in Governance Studies at The Brookings Institution. He co-founded and is the editor-in-chief of the Lawfare blog, which is devoted to sober and serious discussion of “Hard National Security Choices.”

Benjamin Wittes is a senior fellow in Governance Studies at The Brookings Institution. He co-founded and is the editor-in-chief of the Lawfare blog, which is devoted to sober and serious discussion of “Hard National Security Choices.”

Jane Chong is a 2014 graduate of Yale Law School, where she was an editor of the Yale Law Journal. She spent a summer researching national security issues at Brookings as a Ford Foundation Law School Fellow.

Jane Chong is a 2014 graduate of Yale Law School, where she was an editor of the Yale Law Journal. She spent a summer researching national security issues at Brookings as a Ford Foundation Law School Fellow.

-

Footnotes

- 573 U. S. ____ (2014). Justice Alito wrote a separate opinion concurring in part and concurring in the judgment.

- Timothy Wu, Professor of Law, Colum. L. School, Brookings Inst. Judicial Issues Forum: Constitution 3.0: Freedom, Technological Change and the Law (Dec. 13, 2011), https://www.brookings.edu/~/media/events/2011/12/13%20constitution%20technology/20111213_constitution_technology.pdf.

- Some of the most contentious political issues of the day might be described as a struggle over the abrogation of individuals’ ability to exercise that dominion—for example, as with laws concerning abortion or euthanasia.

- We have already seen fit to take the leap of wondering when machines might be elevated to the status of humans—that is, whether machines one day endowed with artificial intelligence should be granted the rights and recognition that accompany personhood. See, e.g., Alex Knapp,Should Artificial Intelligences Be Granted Civil Rights?, Forbes (Apr. 4, 2011, 1:42 AM),http://www.forbes.com/sites/alexknapp/2011/04/04/should-artificial-intelligences-be-granted-civil-rights. Yet relatively little has been said about the status of humans mediated by machines.

- We use “machine” as shorthand but recognize that the “machine” components of a man-machine hybrid need not comprise inorganic material. In fields like neurobotics and nanobiotechnology, scientists increasingly derive from biomolecular materials the blueprint and the physical building blocks for developing next-level computational power. See Chris Hables Gray, Cyborg Citizen: Politics in the Posthuman Age 183 (2002).

- Vasi Van Deventer sums it up this way: “If first-order cybernetics modeled the person after the machine, second-order cybernetics explores the machine in terms of the characteristics of living systems.” Vasi Van Deventer, Cyborg Theory and Learning, in Connected Minds, Emerging Cultures: Cybercultures in Online Learning 173 (Steve Wheeler, ed. 2009). One interesting corollary is that the organism need not be the beneficiary of the functions provided by the artificial components in question; the organism can itself be converted into a machinic means to an end. Researchers have already succeeded in inserting electrodes into rats and using their baroreflex systems to run complex biochemical calculations that digital computers cannot yet handle. Gray,supra, at 183.

- Nick Kolakowski, We’re Already Cyborgs, Slashdot, Jan. 14, 2014, slashdot.org/topic/cloud/were-already-cyborgs.

- See note 88, infra.

- See note 55, infra.

- For example, the Rathenau Instituut in the Netherlands has dubbed the merge of humans and technology an “intimate technological revolution.” See generally Virgil Rinie van Est, Ira van Rerimassie & Gaston Dorren Keulen, Intimate Technology—The Battle for our Body and Behavior, Rathenau Instituut (2014), available at http:// http://www.rathenau.nl/uploads/tx_tferathenau/Intimate_Technology_-_the_battle_for_our_body_and_behaviourpdf_01.pdf.

- Costas Pitas, Snowden Warns of Loss of Privacy in Christmas Message, Reuters (Dec. 25, 2013, 6:42 PM), http://www.reuters.com/article/2013/12/25/us-usa-snowden-privacy-idUSBRE9BO09020131225.

- Id.

- See Gray, supra note 5, at 4 (tracing the idea of man-made sentient creatures to ancient Greek and Hindi folklore and sixteenth-century Japan, China and Europe); Hugh Herr, et al.,Cyborg Technology—Biomimetic Orthotic and Prosthetic Technology, available at biomech.media.mit.edu/wp-content/uploads/sites/3/2013/07/Biologicall_Inspired_Intell.pdf (noting that the ancient Egyptians and early Romans used prostheses and simple walking aids.).

- See Rolf Pfeifer & Josh Bongard, How the Body Shapes the Way We Think: A New View of Intelligence 265 (2007); Chris Hables Gray, Steven Mentor, Heidi J. Figueroa-Sarriera,Cyborgology: Constructing the Knowledge of Cybernetic Organisms, in The Cyborg Handbook,supra note 13, at 6.

- Stuart A. Umpleby, Science of Cybernetics and the Cybernetics of Science, Cybernetics and Systems 120 (2007), http://www.tandfonline.com/doi/pdf/10.1080/01969729008902227; see also Manfred E. Clynes, Cyborg II: Sentic Space Travel, in The Cyborg Handbook, supra note 13, at 35.

- Clynes’s “cyborg” concept builds off the work of Norbert Weiner, one of the greatest mathematicians of the twentieth century. Weiner is credited with pioneering the concept of “cybernetics” (though he did not coin the term) and giving coherence to a literature that up until that point had not acknowledged “the essential unity of the set of problems centering about communication, control, and statistical mechanics, whether in the machine or in living tissue.”See Norbert Weiner, Cybernetics: Or the Control and Communication in the Animal and the Machine 19 (1948). Though its applications have proven diverse and wide-ranging, the thesis of his magnum opus was specific: Weiner posited:

- [S]ociety can only be understood through a study of the messages and the communication facilities which belong to it; and . . . in the future development of these messages and communication facilities, messages between man and machines, between machines and man, and between machine and machine, are destined to play an ever-increasing part.”

- Id. Weiner’s idea that man and machine rely on “precisely parallel” principles for their physical function, Norbert Weiner, The Human Use of Human Beings: Cybernetics and Society 26 (1954), would prove foundational to Clynes and Kline’s idea of fusing man and machine as part of an effort to “adapt[] man’s body to any environment he may choose,” Clynes & Kline, supra note 13.

- Clynes & Kline, supra note 13, at 27 (“For the exogenously extended organizational complex functioning as an integrated homeostatic system unconsciously, we propose the term ‘Cyborg’. The Cyborg deliberately incorporates exogenous components extending the self-regulatory control function of the organism in order to adapt it to new environments.”)

- See, e.g., David J. Hess, On Low-Tech Cyborgs, in The Cyborg Handbook, supra note 13, at 371 (“The cyborg is a symbol of news on the cultural landscape; it is a metaphor of the possibilities engendered by the events of biotechnology and artificial intelligence.”).

- Gray, supra note 5, at 19-20.

- See id. See also Vasi Van Deventer, Cyborg Theory and Learning, in Connected Minds, Emerging Cultures: Cybercultures in Online Learning 170 (Steve Wheeler, ed. 2009) (“The cyborg has become a symbol for adaptability, intelligent application of information, and elective physical augmentation, but . . . [i]n popular consciousness the cyborg remains a superhuman who can pass as an ordinary person in everyday life . . . .”).

- Donna Haraway, Simians, Cyborgs and Women: The Reinvention of Nature 150 (1991).

- Andy Clark & David J. Chalmers, The Extended Mind, 58 Analysis 10 (1998), available atconsc.net/papers/extended.html.

- Andy Clark, Natural-Born Cyborgs: Minds, Technologies, and the Future of Human Intelligence 3 (2003).

- Bruce Sterling, Eight Theses on Cyborgism, Wired (Feb. 4, 2011, 11:21 PM), http://www.wired.com/beyond_the_beyond/2011/02/eight-theses-on-cyborgism.

- Clark, supra note 24, at 4.

- Id. at 5. See also Are We Becoming Cyborgs, N.Y. Times, Nov. 30, 2012,http://www.nytimes.com/2012/11/30/opinion/global/maria-popova-evgeny-morozov-susan-greenfield-are-we-becoming-cyborgs.html (“You know, anyone who wears glasses, in one sense or another, is a cyborg. And anyone who relies on technology in daily life to extend their human capacity is a cyborg as well. So I don’t think that there is anything to be feared from the very category of cyborg. We have always been cyborgs and always will be.”)

- Gray, Mentor & Figueroa-Sarriera, supra note 14, at 6.

- Cyborg anthropology, a subspecialty launched at the Annual Meetings of the American Anthropological Association (AAA) in 1993, focuses on how humans and nonhuman objects interact and the resulting cultural changes. What is Cyborg Anthropology?, Cyborg Anthropology, http://cyborganthropology.com/What_is_Cyborg_Anthropology%3F (last visited Jan. 26, 2014).See generally Gary Lee Downey, Joseph Dumit & Sarah Williams, Cyborg Anthropology, in The Cyborg Handbook, supra note 7 (offering a brief overview of the purpose and dangers of cyborg anthropology).

- Amber Case, We Are All Cyborgs Now, TED, Dec. 2010. http://www.ted.com/talks/amber_case_we_are_all_cyborgs_now.html.

- Ray Kurzweil, The Singularity Is Near: When Humans Transcend Biology 9 (2005).

- Tim Wu, If a Time Traveller Saw a Smartphone, New Yorker, Jan. 13, 2014, http://www.newyorker.com/online/blogs/elements/2014/01/if-a-time-traveller-saw-a-smartphone.html.

- M. Mitchell Waldrop, The Dream Machine, J.C.R. Licklider and the Revolution that Made Computing Personal (2001).

- J.C.R. Licklider, Man-Computer Symbiosis (1960), available athttp://groups.csail.mit.edu/medg/people/psz/Licklider.html.

- Id.

- Steve Mann & Hal Niedzviecki, Cyborg: Digital Destiny and Human Possibility in the Age of the Wearable Computer (2001).

- Oxford English Dictionary (2014).

- We have already seen fit to take the leap of wondering when machines might be elevated to the status of humans—that is, whether machines one day endowed with artificial intelligence should be granted the rights and recognition that accompany personhood. See, e.g., Alex Knapp,Should Artificial Intelligences Be Granted Civil Rights?, Forbes (Apr. 4, 2011, 1:42 AM),http://www.forbes.com/sites/alexknapp/2011/04/04/should-artificial-intelligences-be-granted-civil-rights. Yet relatively little has been said about the status of humans mediated by machines.

- Issues of access are closely linked to concerns about discrimination against those unable to afford or unwilling to undergo certain modifications. Antidiscrimination laws may be necessary to prevent cyborgs from being denied employment as a result of their modifications and unenhanced humans from being discriminated against for opposite reasons. Joseph Guyer, Cyborgs in the Workplace: Why We Will Need New Labor Laws, Future Culturalist, Apr. 12, 2013,http://futureculturalist.wordpress.com/2013/04/12/cyborgs-in-the-workplace-why-we-will-need-new-labor-laws; see also Andy Greenberg, Cyborg Discrimination? Scientist Says McDonald’s Staff Tried To Pull Off His Google-Glass-Like Eyepiece, Then Threw Him Out, Forbes (July 17, 2012, 8:00 AM), http://www.forbes.com/sites/andygreenberg/2012/07/17/cyborg-discrimination-scientist-says-mcdonalds-staff-tried-to-pull-off-his-google-glass-like-eyepiece-then-threw-him-out.

- See John Armitage, Militarized Bodies: An Introduction, 9 Body & Soc’y, no. 4, at 2 (2003), available at bod.sagepub.com/content/9/4/1.short (“[T]he social allure of the humanoid cyborg warrior, of new levels of militarized machinic incorporation and even of human-machine weapon systems, shows no sign of abating.”).

- Anti-Google Glass Site Wants to Fight a Future Full of Cyborgs, DVice (Mar. 18, 2013, 11:41 AM), http://www.dvice.com/2013-3-18/anti-google-glass-site-wants-fight-future-full-cyborgs.

- Anti-Google Glass Site Wants to Fight a Future Full of Cyborgs, DVice (Mar. 18, 2013, 11:41 AM), http://www.dvice.com/2013-3-18/anti-google-glass-site-wants-fight-future-full-cyborgs.

- See Gray, supra note 7, at 33 (describing the work of distinguished professor Jose M. R. Delgado, who has implanted electrodes in the brains of animals to control them).

- See, e.g., Moore v. Regents of the University of California, 793 P.2d 479 (Cal. 1990) (holding that a patient whose spleen cells were used to develop a commercially profitable cell line had no property rights in his organs).

- See Gray, supra note 5, at 33.

- Are We Living Among Cyborgs?, Deutsche Welle, http://www.dw.de/are-we-living-among-cyborgs/a-17361266 (last visited Jan 18, 2014).

- Brad Allenby, Is Human Enhancement Cheating?, Slate (May 9, 2013, 7:30 AM), http://www.slate.com/articles/technology/superman/2013/05/human_enhancement_ethics_is_it_cheating.html.

- See Peter Conrad and Deborah Potter, Human Growth Hormone and the Temptations of Biomedical Enhancement, 26 Sociology of Health & Illness 184 (2004). For example, when synthetic versions of naturally occurring human growth hormone became available in the form of injections, the ethicist John Lantos suggested that shortness was becoming a “disease” in order for doctors and insurance companies to justify its treatment. Gray, supra note 1, at 174; see alsoBarry Werth, How Short Is Too Short?, N.Y. Times Magazine, June 16, 1991. Lantos’s point relates to the larger phenomenon of “medicalization,” the social process by which problems are defined and treated as medical problems subject to medical intervention. The rise of medicalization, described as “one of the most potent transformations of the last half of the twentieth century in the West,” see Peter Conrad, The Medicalization of Society: On the Transformation of Human Conditions into Treatable Disorders 5 (2007), roughly coincides with the emergence of cyborg discourse. See generally id. (examining the social construction of disease and the corresponding expansion of medical jurisdiction over conditions only newly identified as subject to treatment),

- Claire Cain Miller, Google Glass to Be Covered by Vision Care Insurer VSP, N.Y Times, Jan. 28, 2014, http://www.nytimes.com/2014/01/28/technology/google-glass-to-be-covered-by-vision-care-insurer-vsp.html.

- Id.

- Harbisson is the founder of the Cyborg Foundation, an international organization that assists humans in becoming cyborgs, as well as a vocal proponent of cyborg rights. Annalee Newitz,The First Person in the World to Become a Government-Recognized Cyborg, io9 (Dec. 2, 2013, 2:58 PM), http://io9.com/the-first-person-in-the-world-to-become-a-government-re-1474975237;see also Cyborg Foundation, http://www.cyborgfoundation.com (last visited Jan. 28, 2014).

- The Man Who Hears Colour, BBC (Feb. 15, 2012, 10:37 ET), http://www.bbc.co.uk/news/magazine-16681630.

- The distinction between prosthetics and assistive devices, however tenuous, is written into statute. For example, the Federal Employees’ Compensation Act (FECA) covers damage or destruction to prosthetic devices, categorizing it under “injury.” Eyeglasses and hearing aids, on the other hand, are replaced or otherwise compensated for only if the damage or destruction “is incident to a personal injury requiring medical services.” 5 U.S.C. §8101(5).

- Linda MacDonald Glenn, Case Study: Ethical and Legal Issues in Human Machine Mergers (Or the Cyborgs Cometh), Annals of Health Law 175, 176 (2012), available at http://lawecommons.luc.edu/cgi/viewcontent.cgi?article=1024&context=annals.

- Id. at 176-77.

- Id. at 177.

- Id.

- See, e.g., Todd Bishop, No Google Glasses Allowed, Declares Seattle Dive Bar, GeekWire (Mar. 8, 2013, 1:27 PM), http://www.geekwire.com/2013/google-glasses-allowed-declares-seattle-dive-bar.

- See, e.g., Evan Schwartz, Google Glass Strip Clubs: Forget It!, Vibe, Apr. 8, 2013, http://www.vibe.com/article/google-glass-strip-clubs-forget-it.

- Steven Rosenbaum, Can You Really ‘Ban’ Google Glass?, Forbes (June 6, 2013, 8:58 PM), http://www.forbes.com/sites/stevenrosenbaum/2013/06/09/can-you-really-ban-google-glass.

- See, e.g., Rosa Golijan, From Strip Clubs to Theaters, Google Glass Won’t Be Welcome Everywhere, NBC, http://www.nbcnews.com/technology/strip-clubs-theaters-google-glass-wont-be-welcome-everywhere-1B9231620.

- Claire Evans, Panopticon In Reverse: Steve Mann Is Fighting for Your Cyborg Rights, Motherboard Vice, http://motherboard.vice.com/blog/panopticon-in-reverse-steve-mann-is-fighting-for-your-post-human-rights.

- Microchips implanted in Mexican Officials, Associated Press (July 14, 2004, 9:21 ET), http://www.nbcnews.com/id/5439055/ns/technology_and_science-tech_and_gadgets/t/microchips-implanted-mexican-officials/#.UyJNS-ddVVM.

- NSF Report, supra note 38, at 9-10.

- Emmet Cole, The Cyborg Agenda: Extreme Users, Robotics Bus. Rev., Nov. 13, 2012, http://www.roboticsbusinessreview.com/article/the_cyborg_agenda_extreme_users

- Ramachandran, supra note 42.

- European Soc’y of Cardiology, Remote Monitoring and Follow-up of Pacemakers and Implantable Cardioverter Defibrillators, 11 Europace, 701, 701 (2009).

- What’s To Stop Hackers From Infecting Medical Devices?, Forbes (April 20, 2012, 12:08 PM), http://www.forbes.com/sites/marcwebertobias/2012/04/20/whats-to-stop-hackers-from-infecting-medical-devices; see Tarun Wadhwa, Yes, You Can Hack A Pacemaker (And Other Medical Devices Too), Forbes (Dec. 6, 2012, 8:31 AM), http://www.forbes.com/sites/singularity/2012/12/06/yes-you-can-hack-a-pacemaker-and-other-medical-devices-too.

- Haran Burri & David Senouf, Remote Monitoring and Follow-up of Pacemakers and Implantable Cardioverter Defibrillators, 11 Europace 701, 701, 708 (2009).

- Andrea Peterson, Yes, Terrorists Could Have Hacked Dick Cheney’s Heart, Wash. Post (Oct. 21, 2013, 8:58 AM), http://www.washingtonpost.com/blogs/the-switch/wp/2013/10/21/yes-terrorists-could-have-hacked-dick-cheneys-heart.

- Google Glass Update Lets Users Wink and Take Photos, BBC, Dec. 18, 2013,http://www.bbc.com/news/technology-25426052.

- See Naam, supra note 48.

- Pfeifer & Bongard, supra note 14, at 265.

- Wu, supra note 32.

- Stop the Cyborgs, http://stopthecyborgs.org (last visited Mar. 12, 2014).

- Id.

- 425 U.S. 435 (1976).

- Id. at 443.

- See Cory Doctorow, Why Can’t Pacemaker Users Read Their Own Medical Data?, BoingBoing (Sept. 28, 2012, 8:08 PM), http://boingboing.net/2012/09/28/why-cant-pacemaker-users-rea.html.

- Health Care in the Digital Age: Who Owns the Data?, Wall St. J. (Nov. 28, 2012, 10:30 PM), http://live.wsj.com/video/health-care-in-the-digital-age-who-owns-the-data/28B6E0AD-8506-40B2-A659-20A9B696F524.html#!28B6E0AD-8506-40B2-A659-20A9B696F524 (“Who owns the rights to a patient’s digital footprint, and who should control that information? Not just for medical implants, but also for smartphone apps, and over-the-counter monitors that track things like sleep patterns and hours of physical activity.”). Campo must pay out of pocket for biannual meetings with his doctor in order to receive short summaries of his data. Id. The law requires doctors to hand over traditional medical records to patients who request them within 30 days, but it is unclear whether data collected outside the medical facility is considered medical record. Id. And there are currently no guidelines from the Department of Health and Human Services that address these questions. Id.

- Michael Millar, How Wearable Technology Could Transform Business, BBC (Aug. 5, 2013, 19:00 ET), http://www.bbc.com/news/business-23579404.

- See Steve Mann, Jason Nolan & Barry Wellman, Sousveillance: Inventing and Using Wearable Computing Devices for Data Collection in Surveillance Environments, Surveillance & Society 331 (2003), http://www.surveillance-and-society.org/articles1(3)/sousveillance.pdf.