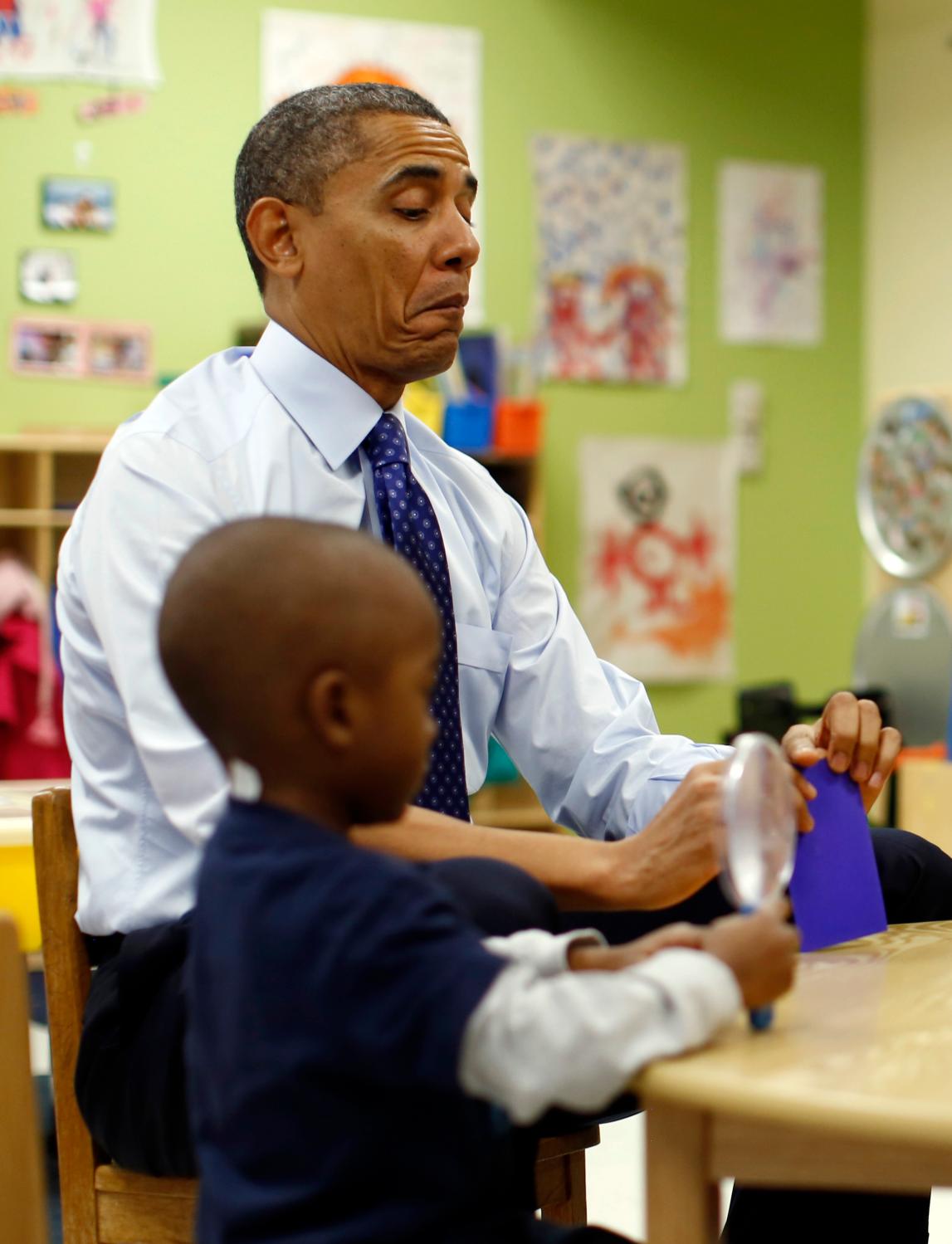

The state pre-k program in Georgia is put forward by supporters of universal pre-k as a model for the nation. No less an advocate than President Obama touted the Georgia program in his 2013 state of the union address:

“In states that make it a priority to educate our youngest children—like Georgia or Oklahoma—studies show students grow up more likely to read and do math at grade level, graduate high school, hold a job, form more stable families of their own.”

Unfortunately, the president misspoke. Georgia established its universal pre-k program in 1995. There have been no long-term follow-up studies of its participants into adulthood. Further, the first participants would have been about 21 years of age at the time of the president’s address, and thus would have been too young for any evidence on their job histories or family formation to have been collected, analyzed, and reported.

What does research tell us about the impact of Georgia’s universal pre-k on child outcomes? Two studies have examined the scores of Georgia students on the 4th and 8th grade assessments of reading and mathematics that are administered to a representative sample of students in every state every few years as part of the National Assessment of Educational Progress (NAEP). The question is whether the trend line for Georgia went up relative to other states as a result of Georgia’s introduction of universal pre-k. That is a reasonable question since the investment in pre-k is typically justified by advocates as producing long-term academic benefits for participants.

The author of the first study concludes that:

“The estimated effects of Universal Pre-K availability on test scores and grade retention are positive, but are not statistically significant, suggesting there were no discernible effects on statewide academic achievement.”

The authors of the second study state that “None of the basic … estimates is statistically significant,” but go on to suggest that there were positive effects for children from low-income families that were offset by negative effects for children from higher-income families.

Both these studies are careful econometric analyses, but their methods fall far short of providing the confidence in causal conclusions that could be expected from a well-designed and implemented randomized trial. Thus, while the studies don’t find statistically significant overall impacts of universal pre-k on 4th and 8th grade achievement, they do not, in my view, rule out the possibility that such impacts exist and could be detected with more sensitive methods.

In the absence of best evidence from a well-design and implemented randomized trial, it is particularly important to have several sources of plausible evidence with which to triangulate an informed judgment of whether a program is achieving its goals. In that context, and given the importance of Georgia’s program as a model for national expansion of state pre-k, the newly released study of Georgia’s universal pre-k program, conducted by the reputable Frank Porter Graham Child Development Institute at the University of North Carolina at Chapel Hill, deserves serious attention. The authors of the study conclude that their findings:

“provide strong evidence that Georgia’s Pre-K provides a beneficial experience for enhancing school readiness skills for all children—boys and girls, those from families of different income levels, and children with differing levels of English language proficiency.”

I disagree.

This is the research design of the study as described by the authors:

“This study utilized the strongest type of quasi‐experimental research design for examining treatment effects, comparing two groups of children based on the existing age requirement for the pre‐k program. The treated group had completed Georgia’s Pre‐K Program (and was just entering kindergarten in the study year) and the untreated group was not eligible for Georgia’s Pre‐K Program the previous year (and was just entering pre‐k in the study year). Because the families of both groups of children chose Georgia’s Pre‐K and the children were selected from the same set of classrooms, the two groups were equivalent on many important characteristics; the only difference was whether the child’s birth date fell before or after the cut‐off date for eligibility for the pre‐k program.”

This is the regression-discontinuity design (RDD) that David Armor and I critiqued in a post from a couple of months ago – the design that has been used in high-visibility studies of district programs in Tulsa, the Abbott Districts in New Jersey, and Boston.

The Georgia study brings into stark relief one of the issues Armor and I raised previously, the implausibility of the root assumption of the RDD, that there are no systematic differences between the treatment group and the control group other than their falling on one side or the other of the arbitrary age cutoff for entry into pre-k. If the treatment and control groups in the RDD studies differ systematically on experiences and characteristics other than when they are eligible to enter pre-k, then any differences in school readiness in the two groups may be due to these other experiences and characteristics rather than to pre-k.

The children in the treatment group in the RDD studies have all successfully completed pre-k and entered kindergarten when they are tested for the one and only time, whereas the children in the control group have just started pre-k when they are tested for the one and only time. One of the reasons the children in the two groups may differ in ways that could bias the results is that some of the children who start pre-k will drop out, or transfer to a center not in the study, or finish pre-k but be held back from kindergarten by their parents. These children don’t find their way into the sample of children that is defined as the treatment group because they don’t make it to kindergarten to be tested, but they are there in the control group that is tested when the children are just starting pre-k.

The methodological problem for RDD studies that is caused by the leaky pipeline between pre-k entry and kindergarten entry was highlighted by the March report from the U.S Department of Education’s Office of Civil Rights documenting the large number of children who are suspended from pre-k. Children who are going to be sent home from their pre-k center because they bite other kids, aren’t toilet trained, or have other behavioral problems are in the control group in the pre-k RDD studies. They’re gone from the treatment group. Voila! What looks like a huge treatment effect could be nothing more than differential attrition.

The problem of non-equivalent groups in the RDD studies is exacerbated in the Georgia study by wildly-different parental consent rates: 77% for children in the control group vs. 45% for children in the treatment group, further reduced to 30% because testing of the treatment group occurred in kindergarten classrooms and only 90% of the schools housing the kindergarten classrooms and 85% of the kindergarten teachers within those schools signed onto the study. Remember that for an age-cutoff RDD study to generate a valid estimate of the impact of a pre-k program, the treatment and control groups must be the same, except for the difference in their age-based eligibility for entry into pre-k. I’m sorry, but when 77% of the subjects in the control group volunteer to be tested, whereas only 30% of the treatment group subjects can be tested, the study is fatally flawed.

An important indirect signal of flaws in initial group equivalence in a program evaluation is implausibly large estimates of the program effect. As an anchor for thinking about the effects in the Georgia RDD study, consider that the effect on student learning over the course of a year of having a teacher in the top quartile vs. a teacher in the bottom quartile is 0.36 standard deviations. Such teacher effects are among the largest in education that lie outside the family. But the Georgia study RDD study reports much larger effect sizes for pre-k, e.g., 0.89 for letter knowledge, 1.05 for letter-word identification, 1.20 for phonemic awareness, and 0.86 for counting.

Another point of comparison is from the recent meta-analysis of 84 preschool studies by Duncan and Magnuson. Included studies were published between 1960 and 2007, had a comparison group demographically similar to the treatment group, and had one or more measures of children’s cognition or achievement collected close to the end of the program treatment period. Almost all of the studies have serious flaws, but as a group they give a sense of the size of the effects that are expectable at the end of a pre-k program. For programs established after 1980, the meta-analysis finds an average effect size of 0.16.

In the context of either research on teacher effects or prior pre-k research, the effect sizes reported in the Georgia RDD study are remarkably large and consistent with the likely direction of the attrition biases introduced by the data collection strategy. The treatment group gets favored because mobility, suspensions, and consents (parents, schools, and teachers) change the composition of the treatment group toward higher achievers. As a result, I can’t place any credence in the findings.

In 1999, the National Academies of Science issued an important report on the state of education research, concluding that “in no other field is the research base so inadequate and little used.” We seem to have fixed the “little used” part when it comes to pre-k policy.

Surely early childhood, a period of life for which evolutionary processes have endowed the human species with the need for extended social dependency and opportunities to learn, and that brain science indicates is our most active period of neurological development, is critically important. But this intuition and the evidence behind it does not mean that social programs intended to enhance early development will do so, or that they will do so in the most productive way. We need strong research to guide early childhood policy, and we should not to be hobbled by consensus views that are built on a mountain of empirical mush. What we don’t know in this area will hurt us, and there is a huge amount we don’t know.

P.S.

Some of my friends have quibbled with the A grade I gave to the Vanderbilt study of the Tennessee Voluntary Pre-K Program (TN-VPK) in a previous post. And some advocates of universal pre-k have responded to that rating much more strongly. The issue is that while the Vanderbilt study is a randomized trial, some of the analyses aren’t based on that randomization. In particular, for the analysis of tested cognitive outcomes at the end of kindergarten and first grade, the research team elected to compare outcomes only for the children who actually participated in TN‐VPK with those who did not participate irrespective of the conditions designated for them on the original randomized applicant. In other words, children who won the lottery to attend TN-VPK but did not enroll weren’t included in the treatment group for the analysis. Dropping nonparticipants from the treatment condition for an analysis of differences in outcomes typically increases the size of an estimated effect, but not necessarily so. In any case, my critics are right: This analysis turns the Vanderbilt study into a quasi-experiment. I should have given it a lower grade for internal validity. I think an A- for internal validity is appropriate, which is the grade I gave Perry. I’ve updated my original post to that effect.